San Jose, Calif. — At the IBM Almaden Research Center, a team of researchers is creating the artificial brains of the future. Within the walls of Almaden is the Cognitive Computing Lab, whose staff is working on one of the coolest projects in technology.

San Jose, Calif. — At the IBM Almaden Research Center, a team of researchers is creating the artificial brains of the future. Within the walls of Almaden is the Cognitive Computing Lab, whose staff is working on one of the coolest projects in technology.

We took a tour of this lab from Dharmendra Modha (pictured), principle investigator on the brain chip project. You can make jokes about self-aware computer network Skynet from Arnold Schwarzenegger’s Terminator films or the rogue computer HAL 9000 from 2001: A Space Odyssey, but this lab isn’t science fiction. IBM and its partners have already built a brain-like chip prototype, and Modha and his colleagues showed us how it works.

When commercialized, these brain chips could could turn computing on its head, replacing current machines with something that is much more like a thinking artificial brain. The idea is to create computers that are better at handling real-world sensory problems than today’s computers can and to create applications such as pattern recognition for use in business, science and government.

The brain chips have the basic building blocks of processor, memory, and communications based on an architecture that resembles the brain, not the serial computers with the John Von Neumann architecture that have dominated computing for 65 years. IBM calls the larger project Synapse (Systems of Neuromorphic Adaptive Plastic Scalable Electronics, or SyNAPSE).

As part of a huge effort with six labs at IBM, four universities and the Defense Advanced Research Projects Agency, the cognitive computing team that we’ll meet in our video is building a series of applications that show off what the chip can do.

As part of a huge effort with six labs at IBM, four universities and the Defense Advanced Research Projects Agency, the cognitive computing team that we’ll meet in our video is building a series of applications that show off what the chip can do.

Modha starts out our video tour with an overview of the project. We wrote about the project when IBM announced it in November, 2008 and again when it hit its first milestone in November, 2009. Now the researchers have completed phase one of the project, which was to design a fundamental computing unit that could be replicated over and over to form the building blocks of an actual brain-like computer.

“What you’re seeing is a dream slowly crystallizing into reality,” Modha said. “We’re all trying to bring together neuroscience, supercomputing, and nanotechnology so as to create the seeds of a new generation of computers: cognitive computers that are more like we behave.”

The brain-like processors with integrated memory don’t operate fast at all, sending data at a mere 10 hertz, or far slower than the 5 gigahertz computer processors of today. But the human brain does an awful lot of work in parallel, sending signals out in all directions and getting the brain’s neurons to work simultaneously. Because the brain has more than 10 billion neurons and 10 trillion connections (synapses) between those neurons, it’s capable of an enormous amount of computing power.

The brain-like processors with integrated memory don’t operate fast at all, sending data at a mere 10 hertz, or far slower than the 5 gigahertz computer processors of today. But the human brain does an awful lot of work in parallel, sending signals out in all directions and getting the brain’s neurons to work simultaneously. Because the brain has more than 10 billion neurons and 10 trillion connections (synapses) between those neurons, it’s capable of an enormous amount of computing power.

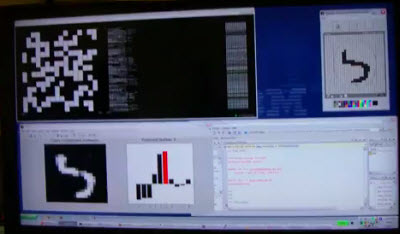

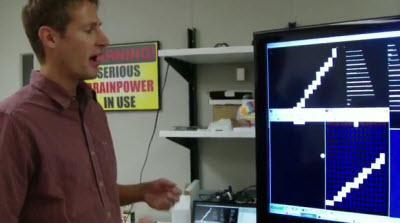

After Modha explains the project, he introduces us to Paul Merolla (pictured left), a research scientist at Almaden, who shows us a computer vision classification application where we can see that the computer can recognize a number from one to ten, based on a partial drawing of the number. The chip can learn through experiences such as looking at images and deciding whether it recognizes them or not. Merolla can tell it when it has guessed correctly, and it uses that feedback in future recognition tests. The chip doesn’t always guess right, as it has to interpret what you’re drawing in real-time.

Merolla said the chip extracts features from the drawing and tries to figure out what it thinks Merolla is drawing. Then it shows how confident it is about its prediction, and when the answer is clear, it returns its prediction.

Merolla said the chip extracts features from the drawing and tries to figure out what it thinks Merolla is drawing. Then it shows how confident it is about its prediction, and when the answer is clear, it returns its prediction.

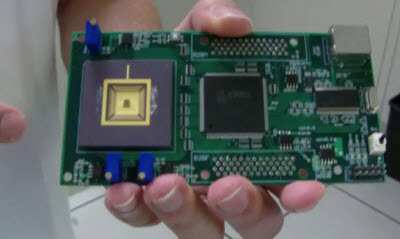

John Arthur (pictured below), an IBM research scientist and co-creator of the brain chip, showed us what the chip looks like when mounted on a small circuit board. The chip is pretty small at 2 millimeters by 3 millimeters, and it is surrounded by a bunch of packaging to get data in and out of the chip. This second-generation prototype has the equivalent of 256 neurons, an array of 262,000 synapses. The brain of that chip is about as advanced as the brain of a worm.

The so-called “neurosynaptic computing chips” recreate a phenomenon known in the brain as a “spiking” between neurons and synapses. Bill Risk, the IBM research scientist pictured below, has built a whole “brain wall” to visually represent what a collection of 1,000 chips with 250,000 neurons could do. Each dot on the wall represents a spiking event that is happening with a single neuron, and each square represents just one chip.

The so-called “neurosynaptic computing chips” recreate a phenomenon known in the brain as a “spiking” between neurons and synapses. Bill Risk, the IBM research scientist pictured below, has built a whole “brain wall” to visually represent what a collection of 1,000 chips with 250,000 neurons could do. Each dot on the wall represents a spiking event that is happening with a single neuron, and each square represents just one chip.

The wall is useful for debugging a brain chip. The pattern running shows a bunch of random tests on simulated chips. When a neuron fires, it turns white. The whole wall is about as sophisticated as a smart ant or a dumb bee. If the whole wall were thinking, we’d see a bunch of regions firing more than others.

The system can handle complex tasks such as playing a game of Pong, the original computer game from Atari. Steven Esser (pictured at bottom), another research scientist, showed how the machine can spot a pattern after the ball bounces off a wall and then predict where the ball is going to go. It then moves the paddle to the right position to hit the ball back. This is a lot different from the way real Pong games play the game. Those computer opponents are given unfair advantages such as the exact coordinates and movements of the ball as well as equations for how it’s going to move.

The system can handle complex tasks such as playing a game of Pong, the original computer game from Atari. Steven Esser (pictured at bottom), another research scientist, showed how the machine can spot a pattern after the ball bounces off a wall and then predict where the ball is going to go. It then moves the paddle to the right position to hit the ball back. This is a lot different from the way real Pong games play the game. Those computer opponents are given unfair advantages such as the exact coordinates and movements of the ball as well as equations for how it’s going to move.

Besides playing Pong, the IBM team has tested the chip on solving problems related to navigation, machine vision, pattern recognition, associative memory (where you remember one thing that goes with another thing) and classification.

As a hypothetical application, IBM said that a cognitive computer could monitor the world’s water supply via a network of sensors and tiny motors that constantly record and report data such as temperature, pressure, wave height, acoustics, and ocean tide. It could then issue tsunami warnings in case of an earthquake. Or, a grocer stocking shelves could use an instrumented glove that monitors sights, smells, texture and temperature to flag contaminated produce. Or a computer could absorb data and flag unsafe intersections that are prone to traffic accidents. Those tasks are too hard for traditional computers.

As a hypothetical application, IBM said that a cognitive computer could monitor the world’s water supply via a network of sensors and tiny motors that constantly record and report data such as temperature, pressure, wave height, acoustics, and ocean tide. It could then issue tsunami warnings in case of an earthquake. Or, a grocer stocking shelves could use an instrumented glove that monitors sights, smells, texture and temperature to flag contaminated produce. Or a computer could absorb data and flag unsafe intersections that are prone to traffic accidents. Those tasks are too hard for traditional computers.

For phase 2, IBM is working with a team of researchers that includes Columbia University; Cornell University; University of

California, Merced; and University of Wisconsin, Madison. While this project is new, IBM has been studying brain-like computing since as far back as 1956, when it created the world’s first (512 neuron) brain simulation. Hopefully, we’ll see a lot more progress from this team in the near future.

Check out the video tour below.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More