Today marks the anniversary of the launch of the Xbox and Microsoft’s Halo video game a decade ago, as we’ve chronicled in our coverage. But those launches would never have happened without today’s other anniversary: the 40th anniversary of the launch of the original microprocessor.

Today marks the anniversary of the launch of the Xbox and Microsoft’s Halo video game a decade ago, as we’ve chronicled in our coverage. But those launches would never have happened without today’s other anniversary: the 40th anniversary of the launch of the original microprocessor.

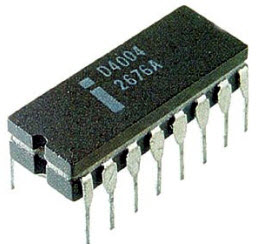

Intel introduced the 4004 microprocessor on Nov. 15, 1971, billing it as a computer on a chip. It was a central processing unit (CPU), and its progeny would become the brains of most things electronic, including PCs, game consoles, supercomputers, digital cameras, servers, smartphones, iPods, tablets automobiles, microwave ovens and toys. It has become an indispensable part of modern life. They solve problems such as displaying the time and calculating the effects of global warming.

“Today, there is no industry and no human endeavor that hasn’t been touched by microprocessors or microcontrollers,” said Federico Faggin, one of the trio of the microprocessor’s inventors, in a speech in 2009.

The first chip wasn’t very powerful; it was originally designed to perform math operations in a calculator called Busicom. The 4-bit microprocessor ran at a speed of 740 kilohertz, compared to top speeds above 4 gigahertz today. If the speed of cars had increased at the same pace as chips, it would take about one second to drive from San Francisco to New York. Today’s fastest Intel CPUs for PCs run 5,000 times faster than the 4004.

The microprocessor was a pioneering piece of work created by Faggin, Ted Hoff, Stan Mazor — all working for Intel. They created the chip at the behest of their Japanese customer, Masatoshi Shima, who worked for Busicom.

The microprocessor was a pioneering piece of work created by Faggin, Ted Hoff, Stan Mazor — all working for Intel. They created the chip at the behest of their Japanese customer, Masatoshi Shima, who worked for Busicom.

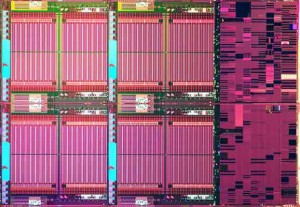

The chips got better and faster through a phenomenon known as Moore’s Law. Observed by Intel chairman emeritus Gordon Moore in 1965, the law holds that the number of transistors on a chip doubles every two years. That’s possible because chip equipment can shrink the circuitry on a chip so the grid of components is finer and finer. As the circuits become smaller, the electrons travel shorter distances, so the chips become faster. They consume less electricity and throw off less heat. The shorter distances mean the chips can become smaller and cost less to make.

Now the circuits are less than 32 nanometers wide and soon will be at 22 nanometers. By 2014, Intel is planning for 14 nanometers. A nanometer is a billionth of a meter. Over time, the microprocessors could do more and more things.

Intel lucked out by getting its microprocessors in the first IBM PC in 1981. In 2010, about 1 million PCs were shipped every day. By 2015, Gartner predicts there will be 2.25 billion PCs across the world, compared to 1 billion in 2008. The industry is expected to grow 10.5 percent to 387.8 million units in 2011. Each month, about 12 billion videos are watched on YouTube.

Intel lucked out by getting its microprocessors in the first IBM PC in 1981. In 2010, about 1 million PCs were shipped every day. By 2015, Gartner predicts there will be 2.25 billion PCs across the world, compared to 1 billion in 2008. The industry is expected to grow 10.5 percent to 387.8 million units in 2011. Each month, about 12 billion videos are watched on YouTube.

“When I was chief executive at Intel I remember one of our young engineers coming in and describing how you could build a little computer for the home,” Moore said in 2005. “I said, ‘gee that‘s fine. What would you use it for?‘ And the only application he could think of was housewives putting their recipes on it.”

After dozens of cycles of Moore’s Law, Intel can put billions of transistors on a single chip, compared to 2,300 transistors for the 4004. That kind of logarithmic change over time is like comparing the population of a large village to the population of China.

Today’s chips are built through a complex process involving hundreds of steps in clean rooms, or manufacturing environments where the air is 1,000 times cleaner than a hospital operating room. Each new factory now costs $5 billion to build.

The amusing thing about the microprocessor project was that everything seemed accidental and unorganized. Intel was founded in 1968 and work on the microprocessor began not long after, in 1969.

Hoff was the 12th employee hired at Intel by chief executive Robert Noyce. Back in 1969, it didn’t really make sense to talk about personal computers, Hoff once said. Shima wanted a caculator with a bunch of custom chips. But Hoff proposed to make a calculator using a general-purpose chip that could be programmed to do a number of different functions, according to the book, “The Microprocessor: A Biography,” by Michael S. Malone.

Shima didn’t like Hoff’s suggestion. But Intel founders Noyce and Moore encouraged Hoff to pursue the idea anyway. The Japanese would come around. Hoff and Mazor designed a solution with four chips: the 4-bit CPU, or the 4004; a read-only memory chip to store programs; a random access memory chip to hold data; and a shift register to provide connections to keyboards and other devices.

But they didn’t know how to translate this architecture into a working chip design. The project fell behind schedule. Then, in April 1970, Intel lured Faggin away from rival Fairchild Semiconductor. He was immediately assigned to work on the microprocessor.

Shima arrived from Japan at Intel to find that no work had been done on it since his last visit.

“You bad! You bad!” Shima raged when he arrived.

Faggin said, “I just arrived here! I was hired yesterday!”

“You late,” Shima said.

Faggin went to work 12 to 16 hours a day, fixing the remaining architectural issues and then completing the logic and circuit design for the four chips. That was necessary to get them into manufacturing. Within three months, Faggin had done the work on translating the architecture into designs for four chips. In October 1970, the chip prototypes were run through the factory. But on the first run, the 4004 didn’t work. In early 1971, corrections were made and new prototypes were manufactured. By March, Intel sent the first chips to Busicom. But Busicom wasn’t that interested in the late chip.

Noyce saw the value of the microprocessor, though, and he secured the right to make the chip for other customers in May, 1971. That turned out to be one of the best moves that Intel ever made.

Over time, the creators of the microprocessor received a series of accolades. In 1980, Hoff was named the first Intel Fellow and in 2010, President Barack Obama gave the trio the National Medal of Technology and Innovation.

Over time, the creators of the microprocessor received a series of accolades. In 1980, Hoff was named the first Intel Fellow and in 2010, President Barack Obama gave the trio the National Medal of Technology and Innovation.

In 2005, Moore observed, “What computers do well and what human brains do well are really completely different. I believe to get to real human intelligence we‘ll have to go back and … understand how the brain works better than we do now and take a completely different approach.”

Intel’s current CEO, Paul Otellini, said, “The transformations in computing have unleashed wave after wave of business and personal productivity and have provided billions of people with their first real opportunity to participate in the global economy. Yet, today I would submit that we are still at the very early stages in the evolution of computing. We‘ve just begun to see its impact on the course of history.”

He said that Intel is committing to making sure that Moore’s Law continues, despite the fact that Moore himself worries the industry will run into the fundamental limits of physics at some point in the future.

He said that Intel is committing to making sure that Moore’s Law continues, despite the fact that Moore himself worries the industry will run into the fundamental limits of physics at some point in the future.

Now, researchers at IBM and elsewhere are trying to come up with chips that mimic the human brain. Justin Rattner, Intel’s chief technology officer, said in September, “The sheer number of advances in the next 40 years will equal or surpass all of the innovative activity that has taken place over the last 10,000 years of human history.”

He added, “At this point, it‘s fair to ask, what lies ahead? What lies beyond multi-core and many-core computing? And the answer… is called extreme-scale computing. This is multi-core and many-core computing at the extremes. Our 10 year goal here is to achieve nothing modest: a 300x improvement in energy efficiency for computing at the extremes.”

Here’s a video that Intel created about the 4004.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More