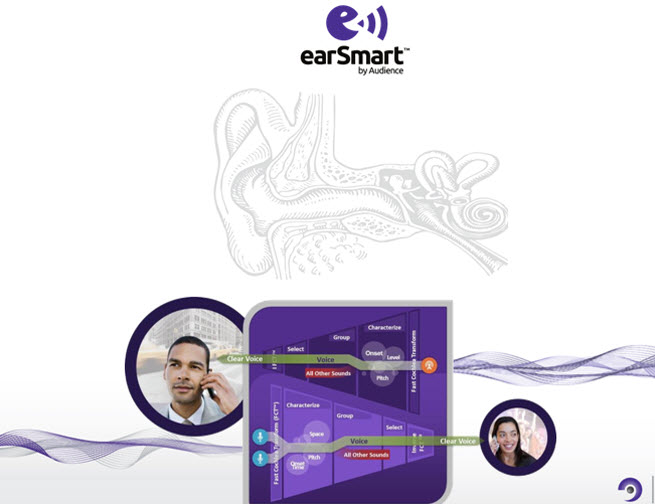

Since the year 2000, the sound scientists at audio chip firm Audience have tried to reproduce the way the human ear perceives sound. They turn that knowledge into computer models and create chips that process and reproduce sound so that you can hear it in your mobile phone calls.

Now the Mountain View, Calif.-based chip company is launching its first Smart Sound Processor that will deliver better voice quality on cell phone calls, improved speech recognition, and better audio playback in smart devices. The new chip is a codec, or audio encoder and decoder, dubbed the earSmart eS515 Smart Sound Processor, and it is debuting at the Consumer Electronics Show in Las Vegas today. The chip is the first in a family that focuses on high audio quality and low power consumption for mobile devices.

Now the Mountain View, Calif.-based chip company is launching its first Smart Sound Processor that will deliver better voice quality on cell phone calls, improved speech recognition, and better audio playback in smart devices. The new chip is a codec, or audio encoder and decoder, dubbed the earSmart eS515 Smart Sound Processor, and it is debuting at the Consumer Electronics Show in Las Vegas today. The chip is the first in a family that focuses on high audio quality and low power consumption for mobile devices.

“This is the next generation in sound processing,” said Peter Santos (pictured right), in an interview with VentureBeat. “Our core technology is isolating voice or other sounds and then doing precise surgery on that data for the highest quality.”

Such chips are increasingly in demand for use inside mobile phones because the can make garbled voice calls more clear. That garbling is a problem as mobile networks become clogged and carry both voice and data at the same time, creating bottlenecks that put a lot of stress on mobile network providers such as AT&T.

Consumers want slimmer mobile devices with larger displays and more processing power. The Smart Sound Processor deals with this demand by tightly integrating technology that can reproduce and discern sounds for lower costs and less power consumption.

Santos said the Smart Sound Processor delivers simultaneous processing from three microphones, which enables more accurate sound detection and reproduction. It also handles automatic speech recognition, mobile audio zoom, and support for voice over the Internet.

Audience’s chips are already used in mobile devices like the Samsung Galaxy Note II, the Google Nexus 10 tablet, the Motorola Razr I, and the thinnest Android smartphone, the BBK Vivo X1. The company has shipped more than 200 million units and has more than 90 customers.

Audience’s chips are already used in mobile devices like the Samsung Galaxy Note II, the Google Nexus 10 tablet, the Motorola Razr I, and the thinnest Android smartphone, the BBK Vivo X1. The company has shipped more than 200 million units and has more than 90 customers.

But the new chip’s features are better than what the company has produced to date. The three microphone inputs can gather more environmental information around a user and deliver vastly improved voice quality. The data can be used to discern the direction your voice is coming from as you speak into the phone. The chip can then filter out sounds that are coming from around you, like laughter at a party, the noise of cars on a street, or an airplane flying overhead. It can even remove echos that a mobile phone listener would normally hear in spaces such as conference rooms and hallways.

The speech recognition helps on the other side of a phone call, recognizing spoken words and filtering out disruptive background noise. The chip uses custom hardware-accelerated mathematical algorithms to isolate a voice from surrounding environmental noise, dramatically improving speech recognition applications such as virtual assistants and voice search. In other words, Apple’s Siri might actually understand what you’re saying with this new technology built into an iPhone. (Audience does not have a chip in the newest Apple phones, and Apple has an internal group of audio chip designers).

Santos said the new chip is also better at media recording and playback. It can capture audio with high-definition quality, with a technology called two-microphone 48 kilohertz noise suppression. And the Audio Zoom feature allows users to dynamically switch between narrate mode, with a single speaker, to interview mode, where the person holding the device can interview another person with crystal clear accuracy. In other words, it’s smart enough to discern two voices and make them sound clear, while filtering out background noise.

Audience was founded by Lloyd Watts, a researcher who worked with legendary brain and computer chip expert Carver Mead. Watts worked on audio technology at Paul Allen’s think tank, Interval Research, but that shut down in 2000. Allen’s Vulcan Ventures invested in Audience, and Mead joined Audience’s board for a time. The company got its first real capital in 2004 and started selling chips. Audience now has 290 employees, and it generated $40.3 million in its most recent quarter. The audio chips sell for about $1 each.

Audience competes in some respects with Jawbone, which makes a headset that takes additional information from the movement of your cheek muscles as you speak. But Santos said he believes his company’s solution is superior: “We believe we have a commercial and technical lead,” he said.

Audience competes in some respects with Jawbone, which makes a headset that takes additional information from the movement of your cheek muscles as you speak. But Santos said he believes his company’s solution is superior: “We believe we have a commercial and technical lead,” he said.

The chip is available in samples now.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More