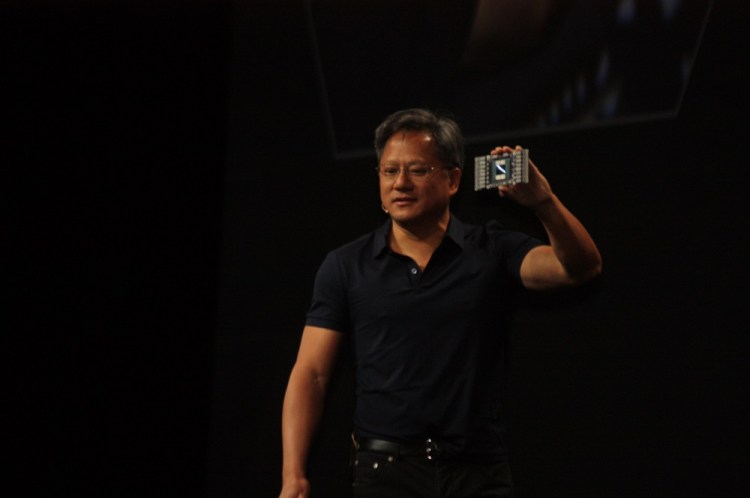

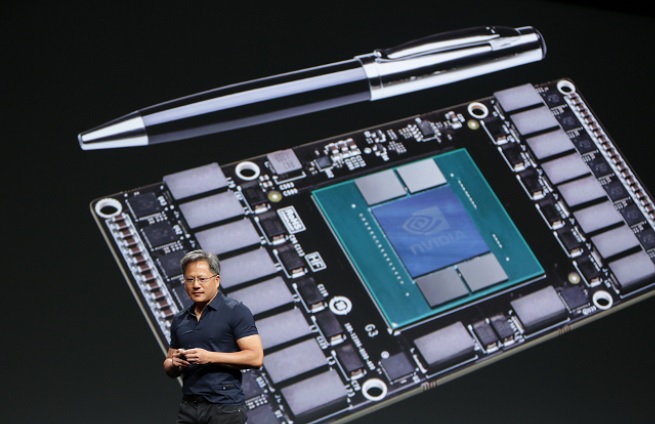

Nvidia chief executive Jen-Hsun Huang unveiled his company’s latest graphics-processor architecture, code-named Pascal, that will put a “supercomputer in the space of two credit cards.”

The new design is significant because it will enable a new generation of mobile devices, gaming PCs, and supercomputers.

The new chip architecture — which can be designed into many kinds of chips — will incorporate both graphics processing unit (GPU) and a central processing unit (CPU) on the same chip. And for the first time, it will incorporate 3D memory, or memory cells stacked in three dimensions, instead of simply in one flat layer. That will give it 2.5 times more memory capacity and a four-fold savings in energy efficiency, Huang said. He made the announcement at the company’s GPUTech conference in San Jose on Tuesday.

The device will use Nvidia’s high-speed NVLink technology, which will speed data communication by 5 times to 12 times. Its goal is to bring big data computing to mobile and desktop devices. The architecture will debut as a follow-on to Maxwell, which is being used in today’s graphics chips and mobile processors.

The large amount of memory and NVLink enable something called Unified Memory, which simplifies the programming of games and other applications.

The new chip architecture’s name, “Pascal,” comes from the 17th century scientist Blaise Pascal, who created the first mechanical calculator. Nvidia always names its chip architectures after famous scientists.

Huang said that the processing power of Pascal, which will arrive in 2016, will enable “machine learning,” or artificial intelligence that can recognize faces, patterns, and other objects.

“Machine learning experts call this object classification, and they program it using a neural net,” Huang said. “Programming this is an awfully large challenge.”

A brain has an estimated billion connections, or synapses. It has about 100 billion neurons and 1,000 connections for each neuron. It can store an estimated 500 million images.

Huang pointed out a computing dubbed a Google Brain, which cost $5 million. It operates on 600 kilowatts, has 1,000 CPU servers, 2,000 CPUs, and 16,000 processing cores. Our brains would take 5 million times longer to train to recognize images compared to one Google Brain.

Nathan Brookwood, an analyst at Insight 64, said he was intrigued at Nvidia’s use of 3D memory for the chip. But he noted it will be a while until 2016, when Nvidia plans to release the first chips based on Pascal, and plenty of competitors could make similar moves in the meantime.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=1df09d44-fadc-4cdb-b19d-4d88d25715e1)