Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

PALO ALTO, Calif. — Richard Socher never set out to place himself on the bleeding edge of artificial intelligence. He merely wanted to blend language and math — two subjects he’d always liked.

But one thing led to another, and he ended up developing an impressive technology called recursive neural networks, and now the startup he established after leaving university, MetaMind, is launching with financial backing from some serious names.

Socher and his team at the four-month-old startup want to demonstrate MetaMind’s ability to process images and text better than any other available technology out there to perform deep learning. Toward that end, in addition to announcing an $8 million initial funding round from Khosla Ventures and Salesforce.com chief executive Marc Benioff, MetaMind today is introducing multiple demonstrations of its technical capabilities on its website.

A type of artificial intelligence, deep learning involves training systems on lots of information derived from audio, images, and other inputs, and then presenting the systems with new information and receiving inferences about it in response. Technology companies like Google and Facebook have been making technical advances and acquisitions in the field, and a few deep-learning startups have appeared.

But Socher thinks that as people try out MetaMind’s technology, they’ll see the startup has advantages, drawing as it does on New York University professor and Facebook employee Yann LeCun’s breakthrough convolutional neural networks for mining pictures as well as Socher’s own recursive neural networks, which have achieved breakthroughs in text processing.

“When we say we’re at the state of the art, we actually mean we run it and compare it to publicly available methods,” Socher told VentureBeat in an interview at MetaMind’s office. “We try to be scientific about those statements.”

As easy as dragging and dropping …

Beyond that, the technology is drag-and-drop simple, meaning that pretty much anyone can do deep learning now.

“You don’t need to be a programmer anymore,” Socher said. As he showed in a demonstration, a person can simply give MetaMind some text to train on and then receive a couple of lines of code that can be plugged into an application. And it doesn’t require a company to build a data center or even start using a public cloud, like Amazon Web Services. MetaMind takes care of it all.

And that’s true for all the demos live on MetaMind’s site. One shows how semantically similar two sentences are. Another shows how positive or negative tweets are about any subject you search for. MetaMind also lets you train a classifier: You upload a spreadsheet full of text with labels, so the system can know what to look for, then you throw additional text at it to perform analysis on the fly.

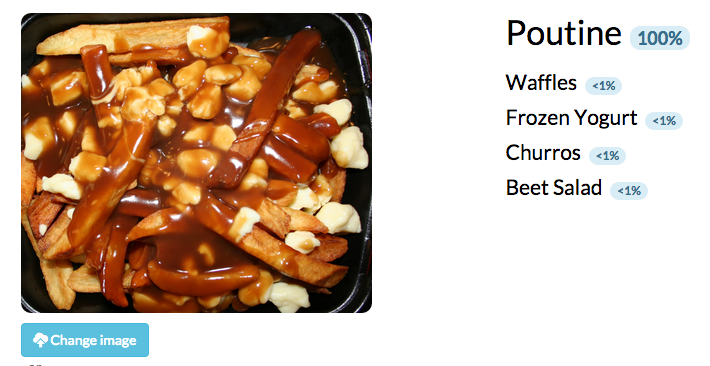

MetaMind can also classify images, as long as there’s a relevant set of images to train on. So after it’s digested pictures of foods, you can drag and drop a picture of, say, a plate of fish and chips, and it can state with a high percentage of confidence that the image shows fish and chips. (Go ahead, try out the demo for yourself here.)

Socher also showed how he could bring up images that were relevant to words he typed in a text field.

… or typing in a few words

He typed in the word “bird” on his laptop. In response, it displayed images, each of which had a bird. Then he made the search term “birds,” and the pictures changed; each one contained multiple birds.

“What’s cool about this is it actually has a sense of compositionality — how words compose the meaning of a longer phase,” he said.

He typed in “birds on water,” and sure enough, he was shown pictures of birds flying over bodies of water.

Such work calls on multiple types of deep learning. The convolutional neural networks have pulled out features after scanning many, many images. And the recursive neural networks extract meaning from all the words. They work together jointly. Google and Microsoft have each announced recently that they have made inroads in processing text and images in one shot, but Socher himself was doing that last year, with a paper about it coming out in February.

“We’ve had this technology for a couple of months,” Socher said of the demo.

Socher has come a long way. A native of Germany, he worked with natural-language processing (NLP) in college but felt that not enough math was involved. In graduate school, he did research in computer vision, where there was more math, but that wasn’t perfect, either — it was too specific, he said. Then, for his Ph.D., he came to the U.S. to focus on machine learning at Stanford University. That’s where he saw Prof. Andrew Ng give a talk about deep learning and its applications in computer vision.

“I felt like those were great ideas, but they didn’t quite fit for natural-language processing yet,” he said. “I invented a bunch of new models for deep learning applied to natural-language processing.”

His recursive neural network examines the connections between two consecutive words. Then it takes examines the connections between that pair of words and the word to its left. And so on — hence the term “recursive” — until it can perceive all the linguistic components at work.

Above: An illustration from Socher’s 2014 dissertation, “Recursive Deep Learning for Natural Language Processing and Computer Vision”

His models first came out in 2011, and they’ve generated interest among academics. He’s since put out several papers demonstrating the possibilities with recursive neural networks.

He has considered a career in academia. But earlier this year, he realized he didn’t want to go down that path.

As head teaching assistant for a machine-learning class at Stanford with more than 300 students, Socher saw how people wanted to use the approach on all sorts of data types.

“Every five minutes, you had a new project that was in a completely different space,” he said. “I was, like, wow, machine learning is really going to be huge, and it’s just going to grow in its importance.”

He didn’t want to take any of the job offers he had received from major companies over the years. Instead, he wanted to bring these sorts of technologies to a wide assortment of people and companies.

Starting up a startup

He would need funding in order to build a team that could do this. And he ended up going to Khosla Ventures, where he met Sven Strohband, the venture firm’s chief technology officer. Strohband has joined MetaMind as its chief executive.

Meanwhile, Vinod Khosla, the founder of the venture firm and a cofounder of Sun Microsystems, is serving as an advisor to the startup. Benioff is, too, as is Prof. Yoshua Bengio of the Université de Montréal, one of the figureheads of the deep learning community.

The startup itself has now built up a team of 10 employees, and it has already attracted paying customers, Socher said. It’s working with small companies as well as Fortune 500 companies. The startup can sell licenses for MetaMind systems running in companies’ data centers, and it can provide consulting services to companies’ MetaMind-powered systems over time.

Use cases could include extracting key signals hidden in financial analysts’ reports, or analyzing chat messages from people seeking customer support from a company. Richer applications could involve making predictions about patients based on radiology images — not unlike fellow deep-learning startup Enlitic.

While MetaMind does have in mind a number of such applications for its technology, the collection of demos suggests that the company is very much open to letting the world tell it what the best applications will be. And maybe that’s all right for such a young startup.

“We believe that this should be more available to lots of people, because we think that there’s lots of uses there,” Strohband said. “People use them for things we couldn’t have anticipated, really, quite frankly.”