Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

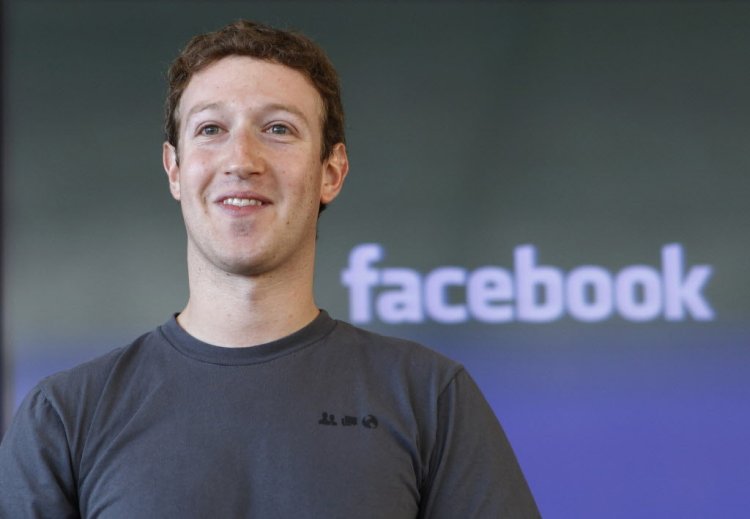

As events continue to unfold in the days following the horrific terrorist attack on Paris-based satirical mag Charlie Hebdo, Facebook founder and CEO Mark Zuckerberg has vowed to reject all attempts by extremists to censor content on the social network.

Zuckerberg posted a message to his Page earlier today that says he was once the target of a Pakistan-based extremist who “fought to have me sentenced to death,” after Facebook didn’t remove content related to the Prophet Muhammad deemed offensive.

“We stood up for this because different voices — even if they’re sometimes offensive — can make the world a better and more interesting place,”Zuckerberg wrote.

Facebook has been criticized in the past for failing to remove other content deemed offensive, including decapitation videos, however it has u-turned on some of these policies. But Zuckerberg is adamant Facebook won’t let extremists influence what content is shared across the world. More from his post:

“Facebook has always been a place where people across the world share their views and ideas. We follow the laws in each country, but we never let one country or group of people dictate what people can share across the world.

Yet as I reflect on yesterday’s attack and my own experience with extremism, this is what we all need to reject — a group of extremists trying to silence the voices and opinions of everyone else around the world.

I won’t let that happen on Facebook. I’m committed to building a service where you can speak freely without fear of violence.”

Charlie Hebdo has been the subject of terrorist campaigns dating back to 2011, when its offices were firebombed after it published a spoof edition “guest edited” by Muhammad, which featured a caricature of the Prophet. The magazine has continued with Muhammad-related spoofs in recent times, which is what is believed to be the motive behind the latest attacks.

Rock and a hard place

Facebook often finds itself caught between a rock and a hard place in terms of pulling down content. On the one hand, if it does remove a specific video or perceived “offensive” item, it may be accused of censorship. But on the other hand, if it doesn’t remove it, it may be accused of facilitating the sharing of deeply sinister material.

In the past, Facebook has issued “enhanced” standards for its takedown policy, where it differentiates between offensive content shared responsibly and content shared to glorify a particular issue. So, for example, if someone shared a graphic video that didn’t glorify terrorism and also contained a warning message, it may be kept online. But if they shared content that celebrates violence, it may be removed. It’s all about the context.

Facebook has also had a longstanding battle with moms posting breastfeeding pictures, and it recently hit the headlines again after such a photo was removed from the social network after the company received a complaint. In this instance, Facebook said it removed the photo in error, and as a result of this case it updated its policy, and again “context” is the key word here. The company said: “Facebook modified the way it reviews reports of nudity to better examine the context of the photo or image.”

In the case of sharing images of the Prophet Muhammad on Facebook, Zuckerberg is adamant that he won’t bow to pressure from extremist groups. But it’s not clear whether if, for example, someone deliberately shares provocative pictures with the express purpose of offending those of Islamic faith, such images will be removed. If it’s all about the context, then based on Facebook’s broad guidelines, a case could still be argued for the removal of images in certain situations.