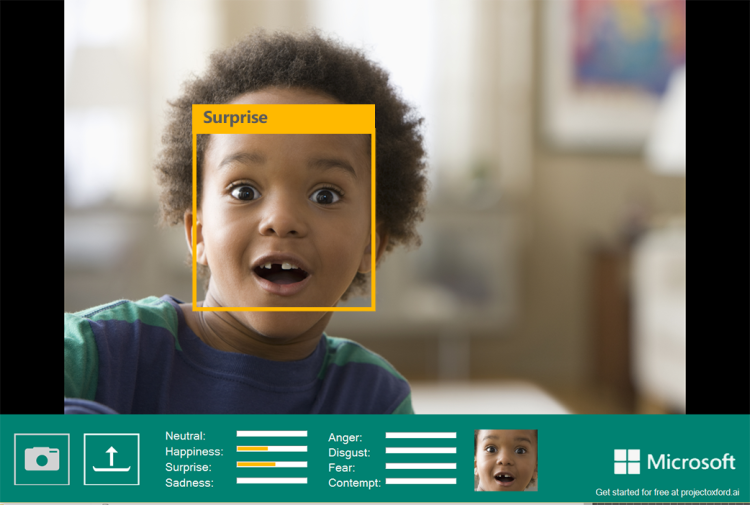

Microsoft today announced several updates to its Project Oxford portfolio of machine learning tools, which are aimed at developers. Microsoft has begun a public beta for a new application programming interface (API) that can identify emotions from expressions on a person’s face in a still image.

The new API can detect anger, contempt, disgust, fear, happiness, sadness, and surprise, as well as a neutral disposition, according to a statement.

An API that can check spelling — a core function of Microsoft Word — and even accept modern slang and brand names is also available now in public beta.

Later this year, Microsoft will release other capabilities that developers can incorporate into their applications, including spell checking, facial tracking and motion detection in videos, speech recognition for individuals, and smile prediction.

Microsoft first debuted Project Oxford in April at the Build developer conference, drawing on its face APIs to release the How Old Do I Look? app. Last month, Microsoft began a public beta of the Project Oxford Language Understanding Intelligent Service (LUIS), which allows developers to train and deploy models that can figure out what people mean when they use certain words.

The updated face APIs will be able to detect facial hair — the gimmicky website MyMoustache.net that Microsoft introduced earlier this week is relying on the expanded functionality.

And the Microsoft Oxford computer vision APIs will now be able to classify images into 1,000 categories, a spokesman told VentureBeat in an email.

Find out more about the new APIs on the Project Oxford website.