To paraphrase William Gibson, “The future is already here and, thanks to intelligent assistants (IA), it is more evenly distributed than ever before.”

Based on our spoken input, mobile personal assistants (like Siri and Google Now) answer questions, navigate routes, and organize meetings. Close cousin Alexa, running on the Echo, understands our utterances when we order pizza, hail an Uber, or complete an order from Amazon. Meanwhile, a large set of distant cousins – call them text ‘bots – understand “plain English” requests and can recommend restaurants, movies, or even bridal fashions.

We humans are learning to take over our digital lives using our own words and our choice of digital device. We’re also finding that we’re more comfortable than ever before communicating with technological devices through spoken words.

Intelligent assistance reaches its tipping point

According to the “User Adoption Survey” commissioned by predictive speech analytics specialist MindMeld, use of voice-based intelligent assistants has reached a tipping point and is heading toward mass acceptance. More people are talking to their smartphones and making spoken queries in their automobiles and at home — through TV remotes, wireless speakers (Amazon Echo), and a variety of wearables — all of which is accelerating the adoption of IA.

More than 50 percent of respondents have tried voice assistants and more than 30 percent are “regular users” (daily or weekly). Fifty percent of those who use the assistants are “satisfied” or “extremely satisfied” with their experience, compared with less than 20 percent who consider themselves dissatisfied with the technology. Thus, the technology has entered the mainstream and acceptance is accelerating. In fact, 60 percent of the respondents said they adopted IA in the past year (40 percent within the past six months). For context, home computers took nearly 30 years to reach 60 percent of U.S. households, and the Internet achieved that level in 15 years.

The Echo effect: Getting an IA ecosystem into homes

Amazon Echo, with its Super Bowl TV commercials, has made a conspicuous splash in the IA world. Using relatively inexpensive wireless speakers as a platform, the company made its investment in speech recognition and life-like speech rendering into the basis of Alexa, a tour de force in speech-enabled device control, information retrieval, voice search, and ecommerce. After claiming the lion’s share of wireless speaker sales in 2015, Amazon doubled down on the home to introduce two form factors for the voice-activated Alexa. The $130 Tap is a Bluetooth Speaker that can handshake with Wi-Fi and offer Alexa’s intelligent voice activation (IVA) services throughout the home. The $90 Dot is a skinnied-down Echo designed to serve primarily as a “remote outpost” for Alexa — meaning that you can place several of them around the house as “voice command” centers.

Amazon is further conditioning the market by adding several commerce partners to its Alexa Voice Service. For instance, there’s Uber and Dominos Pizza, as well as music-streaming service Spotify. The Amazon team is also entering financial services, a promising sign that IA can offer robust security and privacy controls. Capital One credit card holders can ask Alexa to check their balance, pay a bill, or track spending. Capital One has close to 65 million cards in circulation, a rather healthy potential customer base. Ford Motor Company is another partner. The automaker has enabled its Sync service to access and control devices in the so-called Smart Home. Sync customers can arm or disarm their security systems or turn lights on or off, all under Alexa’s control.

Smaller firms benefit from the Echo effect, too. For instance, smart assistant EasilyDo powers a number of functions surrounding calendar appointments and mail reading. Integration with Amazon’s IA platform is made possible through Alexa Skills Kit (ASK), which amounts to a Software Development Kit (SDK) that enables digital commerce companies to make their services Alexa-compatible.

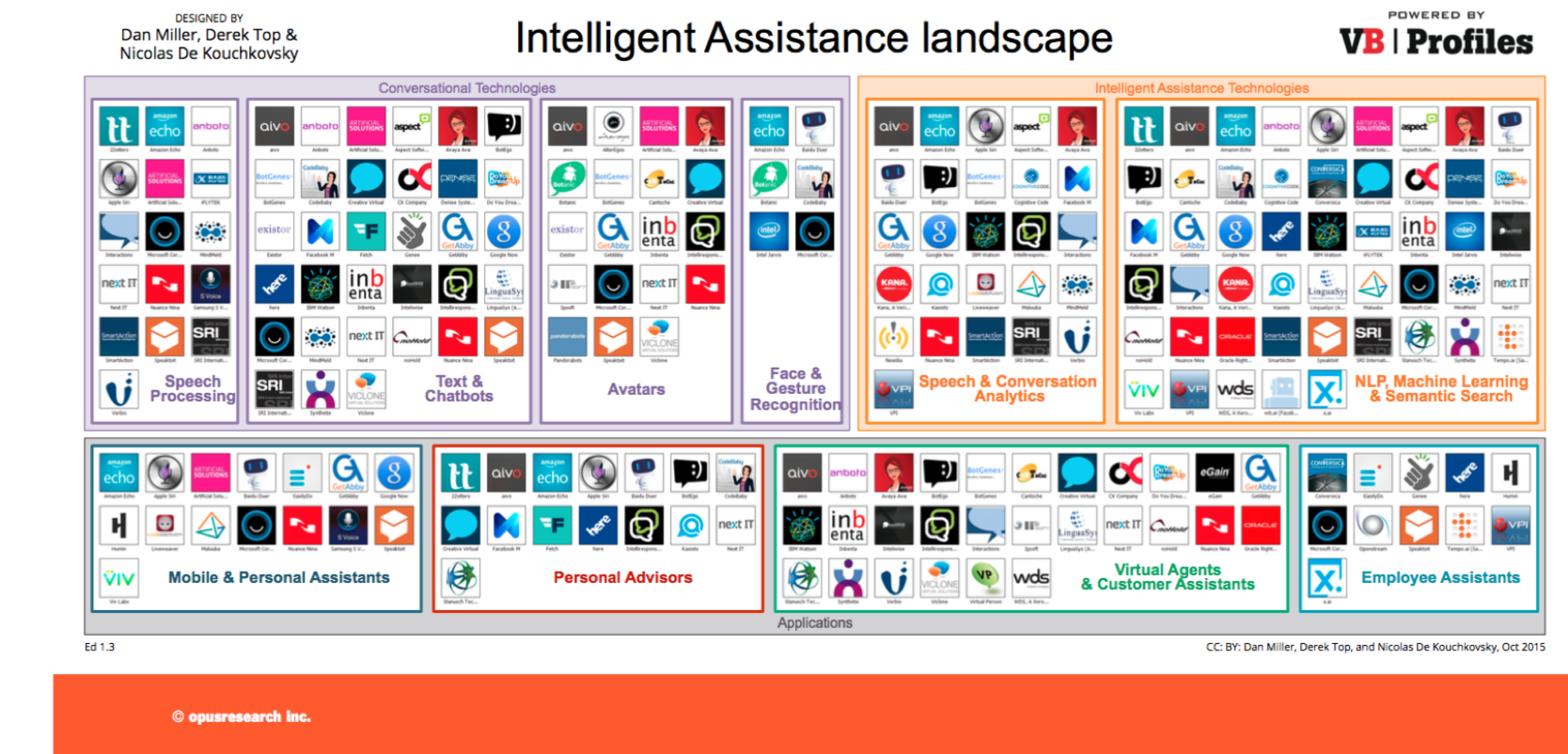

A high-resolution version of the landscape is available here.

Bots everywhere and the growth of conversational commerce

In addition to Echo’s expanding ecosystem, the pool of companies entering the IA field is growing, thanks to messaging platforms like Slack, WeChat, and Facebook Messenger. These new entrants are accelerating the rise of bots for text-based communication. Scheduling bots, like x.ai and Meekan are natural companions to collaboration platforms. Other tools, like Conversica (sales appointment scheduling and followup), offer additional, specific benefits for their users. As automated bots add particular, vertical expertise, these are the most likely to evolve into “Personal Advisors” that can intervene when people need feedback or assistance on both phone and text-based messaging networks. This is where consumers can expect to see a plethora of special purpose resources ready to book travel plans, compare and purchase hard-to-find items, calculate mortgage payments, and the like.

Other additions to the IA landscape include two companies aiming to improve and expand the presence of natural language interfaces across the Internet of Things (IoT). One is VoiceBox Technologies, which has been in the Voice User Interface (VUI) business for over a decade and has made significant inroads into the automotive and mobile entertainment domains, with partners or customers that include Toyota, Pioneer, Chrysler, Dodge, Renault, Fiat, and TomTom. The company is poised to rival Alexa and Amazon in the world of “zero UI.”

Semantic Machines is another new addition to the IA pantheon. It raised more than $20 million in venture funding last year from investors like Catalyst and Bain Capital. Since then, it has assembled a cadre of top experts in natural language processing, semantic understanding, and conversational computing. The company has embarked on a multi-year path toward cultivating the sort of verbal user interface that truly understands each person’s intent, even as the user jumps around from topic to topic or provides the sorts of incomplete or incoherent statements that we are all prone to deliver in natural conversation.

Dan Roth, the company’s founder and CEO, leads a team that is tackling some of the longest-standing challenges of human-to-machine conversations. When ready, the technology will be incorporated into offerings from a limited number of partners. The results, in his words, will be “breathtaking,” especially for those of us with a deep grasp of the challenges of conversational understanding. For the rest of mankind, talking to an intelligent assistant may feel routine and as comfortable as putting on a well-worn pair of running shoes.

Such routine and comfort will be here soon, as IA acceptance and use continue to accelerate. What started as a novelty and source of marketing differentiation from a smartphone manufacturer has become the most convenient user interface for the Internet of Things, as well as a plain-spoken yet empathetic controller of our digital existence.

Dan Miller is founder and lead analyst at Opus Research, a market research firm focused on Intelligent Assistance. You can track his Intelligent Assistance Landscape on VBProfiles.com.