Microsoft researchers have come up with a novel way of getting computers to tell stories about what’s happening in multiple photographs by using artificial intelligence (AI). Today the company is publishing an academic paper (PDF) describing the technology, which could one day power services that are especially useful to the visually impaired. Microsoft is also releasing the photos, captions, and “stories” developed in the research.

The new capability is significant because it goes well beyond just identifying objects in images, or even videos, in order to generate captions.

“It’s still hard to evaluate, but minimally you want to get the most important things in a dimension. With storytelling, a lot more that comes in is about what the background is and what sort of stuff might have been happening around the event,” Microsoft researcher Margaret Mitchell told VentureBeat in an interview.

To advance the state of the art in this area, Microsoft relied on people to write captions for individual images, as well as captions for images in a specific order. Engineers then used the information to teach machines how to come up with entire stories to tell about those sequences of images.

The method involves deep learning, a type of artificial intelligence that Microsoft has previously used for tasks like speech recognition and machine translation. Facebook, Google, and other companies are actively engaged in this research area, as well.

In this case, a recurrent neural network was employed to train on the images and words. Mitchell and her colleagues in the research borrowed an approach from the domain of machine translation called sequence-to-sequence learning. “Here, what we’re doing is we’re saying that every image is fed through a convolutional network to provide one part of the sequence, and you can go over the sequence to create a general encoding of a sequence of images, and then from that general encoding, we can decode out to the story,” said Mitchell, the principal investigator in the paper.

She and her collaborators — some of whom work at the Facebook Artificial Intelligence Research (FAIR) lab — sought to improve what was originally being produced with the system by putting certain rules in place. For instance, “the same content word cannot be produced more than once within a given story,” as they write in the paper.

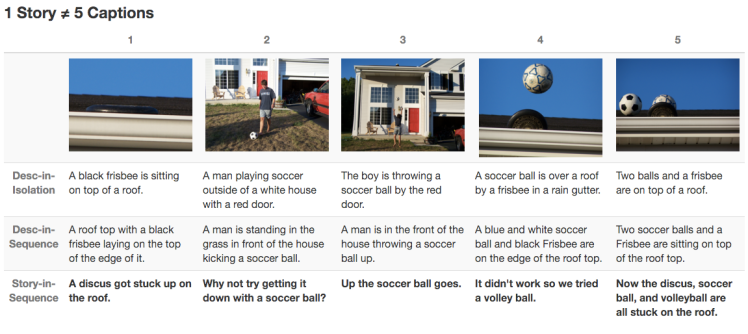

Above: An example of stories for images in sequence at bottom.

The final result is language that’s less literal but more abstract and fascinating. And over time, this sort of language could have great potential. People who aren’t able to see the photos can get an understanding of what they convey together as a set.

This would be a good next step to follow the recent wave of research into identifying objects and people in images and videos for the blind. In fact, that’s an area Mitchell has recently been exploring in association with blind Microsoft software developer Saqib Shaikh.

But sighted people who are learning a second language could also be helped a lot by visual storytelling, and it could inspire kids to think more creatively about what they’re seeing in the world, too, Mitchell said.

People are increasingly capturing multi-image files with the cameras on their phones, whether those be animated GIF-like Live Photos from iPhones or entire videos. So it will become more important for machines to understand what’s going on across those larger sets of frames. It’s no longer enough to just recognize what’s appearing in each individual frame. Mitchell sees the research going in that direction — though they’re not quite there yet.

“It’s just some simple heuristics, really, but it shows the wealth of information we’re able to pull out from these models,” Mitchell said. “It’s really positive and quite hopeful moving forward.”

See the academic paper for more detail. Microsoft also has an official blog post about the research.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More