The advances in digital distribution have changed the game industry in the same dramatic way they changed music, video, newspaper, and book publishing. In fact, Newzoo projects the global video game industry to reach $1.07 billion by 2019.

And with so many of these living on the Internet or having significant online components, latency is their enemy.

Poor cloud and network performance have two effects on games: poor downloads and poor gameplay. We will cover both below. Before we do let’s talk a little about the digital marketplace that we call gaming.

A mere couple of year ago the digital marketplace was a small portion of the landscape for delivery of games. The shift of computer game delivery away from packaged goods and toward 24/7 online services has happened. This has created new pressures on developers and publishers to be able to manage and predict consumer demand, so they can ensure positive online gaming experiences.

AI Weekly

The must-read newsletter for AI and Big Data industry written by Khari Johnson, Kyle Wiggers, and Seth Colaner.

Included with VentureBeat Insider and VentureBeat VIP memberships.

Measuring success in thousandths-of-a-second

It isn’t just the big waits that cause customer dissatisfaction. The smallest delays in loading a web page or waiting for a video to start can determine a game publisher’s capability to acquire new customers.

Consider these facts:

- Online gaming customers are twice as likely to abandon a game when they experience a network delay of 500 additional milliseconds.

- A 2-second delay in load time during a transaction resulted in abandonment rates of up to 87 percent.

- Eighty-one percent of Internet users abandon a page when a video fails to start immediately.

- A 25 percent drop in Google traffic is attributable to a 500 millisecond slowdown.

Latency, the time interval between an action and response during multiplayer gaming, is another area where consumers don’t like to wait. The amount of acceptable latency varies by game type. Academic research on the effects of latency on gameplay in different game genres is instructive.

In a first-person shooter (FPS) like Call of Duty, completing an action has tiny deadline, and latency of more than 100 millisecond can affect the experience of the gamer. In turn-based or simulation games such as Civilization or The Sims, latency has a less pronounced effect but can still negatively impact the user experience. Game developers and publishers need to find ways of ensuring that every possible amount of latency is removed from the entire value chain.

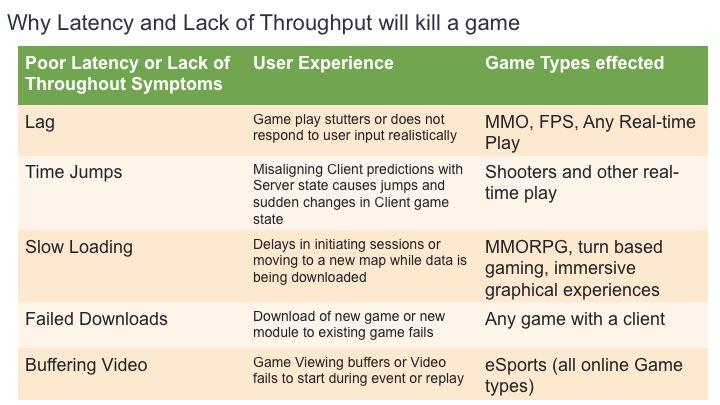

Generally gaming represents challenges in many areas. Let’s list a few of the most obvious:

Simply put: When Internet traffic slows game state information, players lose an advantage, and their immersive experience suffers. Game publishers face the technical challenge of simulating the smooth unfolding of time we experience in the regular world.

These customers will let the world know when traffic is ruining their experience. To have a successful product, providers of intensive gameplay for the vast audiences of massive multiplayer online (MMO) games face the technical challenge of simulating the smooth unfolding of time we experience in the regular world. Worldwide. All day. All night.

That means getting packets to every client on time, no matter the traffic conditions.

But what happens when latency rears its ugly head?

Failed downloads are a huge issue as well for these digital companies. The larger players are typically both building and maintaining their own download manager, or have outsourced that to one of the handful of download managers in the market. These products do a good job of dealing with loss connections and restarting when the connection has been restored, but they can only work as well as the content delivery network (CDN) or cloud from where the asset is coming from. Download failures are too high even with a perfectly performing download manager.

Lastly – a new emerging entry into the gaming world – esports! All the traditional issues with broadcasting livestreaming events is now a part of the gaming continuum. Video start failures, buffering, and all the other video specific metrics and issues must be addressed.

Real User Measurements as the standard

You cannot fix what you have not measured. So measuring latency and throughput are a high priority within the gaming industry.

Because of the high-tech nature, game companies have quickly realized that latency and throughput are best measured using real user measurements (RUM), not synthetic monitors. If your measurement is leading to a false sense of performance security then you will not understand the problem enough to fix it.

Synthetic measurements always lead to a false sense of security. So these companies typically already get the importance of RUM. What they sometimes fail to understand is the importance of community. When gaming companies take RUM measurements they are typically taking them directly prior to associating a player with a game server.

This is great as a first step. However, it does have some important issues, including:

- Without a community of RUM providers you are not seeing enough networks on a regular basis to make good decisions.

- RUM is noisy. By just taking a couple of measurements or even a couple of hundred you do not get a correct sense of how a network/geo combination is performing. An occasional measurement can tend to be very wrong.

To overcome this, one must develop a community of RUM contributors. The problem for a single gaming company – is that to do this requires billions of transactions a day. And none of them have that much traffic. Without adequate measurements your decisions engine is making guesses as to what Geo/Network combinations have the best connectivity to the various CDNs that you are deciding between. Guessing is not a good strategy.

Three solutions

There are three ways that architects have historically attempted to solve this problem.

- Add more servers

- Add more peering

- Distribute the load to multiple vendors and locations

The reason that (1) does not work is that adding more servers only helps if the problem is that your servers are overloaded. If they are not – then this has no effect. In fact, all it does is increase your costs.

Number (2) sometimes works because peering is really important to Internet performance. This is why companies such as Riot have such extensive peering networks. They are attempting to lower the lag on behalf of their players. The problem with this strategy is that it has limits. The biggest limit is the Speed of Light. The fiber that is in the ground can only be made to go so fast. All things being equal – reaching a server that is in NYC will be faster from Boston than reaching a server in San Francisco. This is not always the case by any means – but the fact is that peering can only take you so far. The best solution is a mix of (2) and (3). So let’s look at (3) in some more detail.

Best practices for latency reduction

When faced with an Availability or Performance problem, the best practice for the IT architect is to scale the infrastructure by adding either other providers or at least adding other nodes. If you are using CDNs for delivery – it is a best practice to involve a second CDN to ensure your downloads happen on time every time.

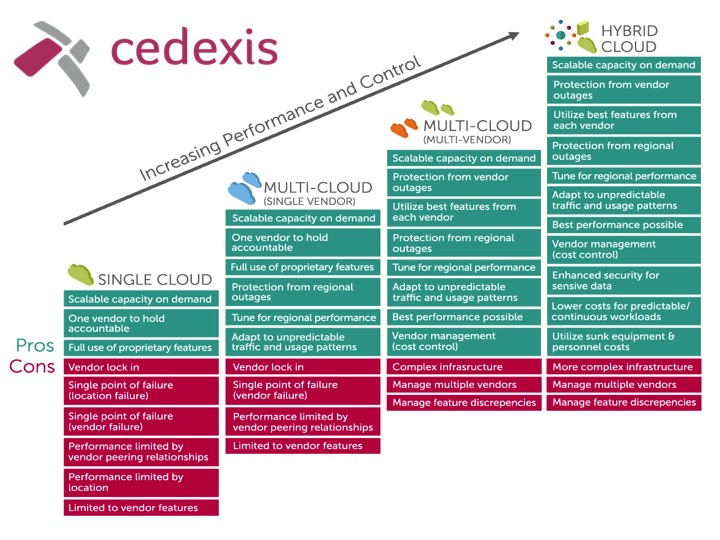

If you are using clouds for your game – it is very typical to add a separate regional instance of your cloud in and direct traffic there when it performs best. For clouds we at Cedexis have seen this evolution as the “Cloud Maturity Model” and it looks like this:

In the past it was enough to have a second cloud instance reserved as a failover. This is called an Active-Passive” scenario. Typically the architect would set up and alert if there was any problems and the traffic could be made to automatically fail over the the passive node’s. There are two problems with this scenario.

- Traffic is untested on the secondary cloud – thus there is risks of sending traffic somewhere it might not perform well.

- Location and Peering on second cloud may not be optimal for the traffic.

Because of these disadvantages, architects have started using an Active-Active strategy for traffic management. Having an Active-Active multicloud architecture improves availability. When cloud vendors have outages in one or more of their regional locations, traffic can be diverted to clouds (or data centers) that are still available.

But what about performance? Can using performance-based global traffic management to route traffic to the best performing clouds mitigate congestion issues? The answer is yes. A multi-cloud strategy can lower overall latency if it is able to take advantage of monitoring that shows which cloud is performing best from each network and geography that the users are attempting to play from.

But to do this — as we discussed above — billions of measurements from every network/geo combination to every piece of infrastructure in use (clouds, CDNs or private data centers) are required. These measurements must be made available to the global traffic management engine in real time.

But this is not enough for an industry that demands flexibility and scalability. What is also needed is the ability to consume this service as an API that allows the gaming client to be an informed participant in this decision tree.

In the end – the gaming industry is perhaps the most demanding major vertical doing business on the Internet today. Other industries can learn from this vertical as it is forced to solve these challenging problems.

As a product evangelist at Cedexis, Pete Mastin has expert knowledge of content delivery networks (CDN), IP services, online gaming, and Internet and cloud technologies.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More