It takes a lot of computing power to properly render a virtual reality scene. But researchers at graphics chip maker Nvidia say they have been able to reduce the amount of processing by two or three times. And that could make VR less expensive to produce and enable it to take off in consumer markets more easily.

The Nvidia research team will unveil the technique at the upcoming Siggraph graphics technology event in Anaheim, Calif., next week. In an interview with GamesBeat, Nvidia vice president of research David Luebke described how a team of five researchers were able to create more efficient processing over the past nine months.

Nvidia calls it “perceptually based foveation,” which is a fancy way of saying how it can cut out some of the processing related to your peripheral vision in VR.

“By understanding the limits of human vision, we can focus the processing where it matters,” Luebke said.

Creating VR imagery is very demanding as it requires graphics hardware to render one image for each eye at a rate of 90 frames per second, compared to the rendering of one image at about 30 or 60 frames a second on an ordinary computer display. The processing power required to make the leap from a computer display to VR is about seven-fold, Luebke said.

“VR is so much more demanding than ordinary PC gaming,” he said.

But graphics chips are already sort of smart. Instead of rendering an entire scene, they can calculate which objects are in view and render only what a player can see on the screen. An existing technique called “foveated VR” already does this, rendering only the peripheral regions that you can see. The foveation leaves parts of the image blurry.

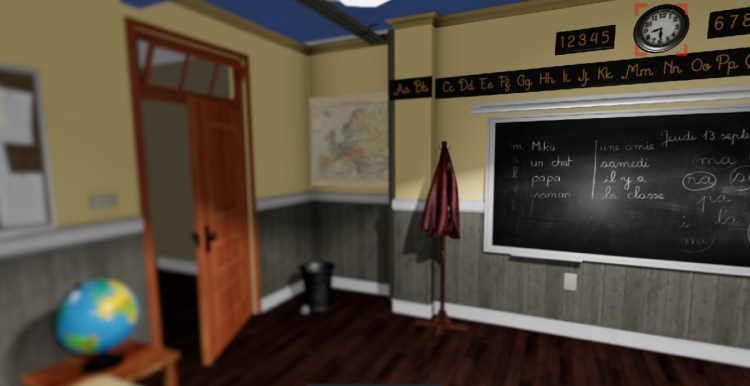

Above: See how blurry the globe is? That’s perceptually based foveation.

But in this case, Luebke said the researchers went further and analyzed peripheral vision and how the eye works. In short, they figured out what they needed to render on the periphery and what they could toss out. The result was a reduction in rendering tasks that amount to two or three times.

“This really turned out to be a cross-disciplinary problem on how you do real-time rendering,” Luebke said. “We had to understand the human eye.”

It turns out that human eyes can detect things on the side, like flickers. Luebke said researchers call this the “tiger detector,” which helps with human survival when a predator comes from the side. But if there is no real flicker, then the eye won’t notice it. So the researchers figured out what they need to render on the sides and what they don’t.

And despite this kind of reduction in rendering, players never notice because their eyes are focused on the middle of the screen most of the time. Luebke said it’s still pretty complex to figure this out.

“The GPU has to prioritize which pixels you shade, and you design the GPU to exploit that,” he said.

The new kind of rendering might one day lead to changes in graphics hardware or software, but that wasn’t the principal aim of the research.

Nvidia Research has about 100 scientists with doctorates, with a focus on research on graphics, computer vision, artificial intelligence, chip architecture, networking, and other topics.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More