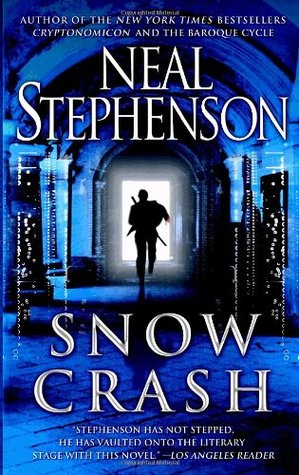

I hear a lot of speeches. But Tim Sweeney, the CEO of Epic Games and a longtime graphics guru, stitched together the best vision I’ve heard in a while at last week’s VRX virtual reality conference in San Francisco. Sweeney talked about how to build the open Metaverse, the virtual world envisioned by Neal Stephenson in the novel Snow Crash in 1992. That novel’s vision of a pervasive cyberspace where we live, work, and play has inspired many a startup, from Second Life maker Linden Lab to Stephenson’s current employer, augmented reality glasses maker Magic Leap.

I wrote about Sweeney’s speech in last week’s DeanBeat. Many people have tried and failed to build the Metaverse, and I came across two new companies in the past week that are invoking the name in their own pitches. But Sweeney’s vision wrapped in everything from virtual economies and blockchain to platform control and Metaverse file formats. Sweeney predicted how realistic avatars such as the one Epic showed earlier this year will mesh with other technologies to enable us to build the ubiquitous cyberspace. And he also thought a lot about its ramifications.

I interviewed him about his speech after he gave it. I also bounced Sweeney’s predictions off another speaker, Tim Leland, vice president of technology management at mobile technology manufacturer Qualcomm. Sweeney told me he forgot about the Metaverse, particularly as early virtual worlds didn’t take off, but he has given it serious thought lately as VR came back.

Here’s an edited transcript of our interview.

Above: Tim Sweeney, CEO of Epic Games, at VRX event.

GamesBeat: The Metaverse has been on everyone’s mind ever since Snow Crash. How far back would you say you started thinking about this more seriously, though, or more practically?

Tim Sweeney: It’s one of those ideas that people brushed off for a very long time, because experiences like Second Life didn’t take off on a large scale. Very simple online systems like Facebook did take off, even though we had 3D graphics capability. So we just forgot about it for a long time, 15 years or so. When VR came back and we started to see the capabilities of things like realtime motion capture, it became clear that we were just a few years away from being able to capture human motion and human emotions and convey them in an interactive experience in a way that’s very close to reality.

GamesBeat: It seemed like you’ve never been that satisfied with human animation in games. You try the best you can every generation to make it better, but it always seemed like this far-off thing, making it good enough to fool people. That seems to be changing as well.

Sweeney: Right. The pixel rendering part of human rendering is fairly straightforward. Give us enough computing power and a fast enough GPU from Nvidia and we can do it in a few years. The motion and emotion part of it is much harder. But VR has a huge advantage in that we don’t have to write artificial intelligence that simulates a human. We simply need to scan what a human is doing, transport it across the internet, and reconstruct it on the other side. That’s clearly within reach. It’s a much easier problem to solve than the AI problem. It’s already been doing with very low-fidelity approximations. Within five years it’ll be amazingly realistic.

GamesBeat: Then we need to be able to capture everybody’s movements and facial expressions. Are we making progress there as well?

Sweeney: There are lots of specialized companies dealing with the hardware sensor and software processing side. We have a variety of sensors, cameras, depth cameras that can pick up signals to feed into an algorithm, based on deep learning. Tesla’s self-driving cars are already doing this in real time with some fairly complex scenarios. They measure the movement of other cars around them on the road. Redirecting that technology to humans will be a much easier problem. I think it’ll fall over the next several years, although it’ll take a lot of companies working in partnership to do it. No one company has everything.

GamesBeat: Some of these things still seem pretty far off, like the idea of sunglasses with 8K per eye. Do you think that’s visible within the time frame you want?

GamesBeat: Some of these things still seem pretty far off, like the idea of sunglasses with 8K per eye. Do you think that’s visible within the time frame you want?

Sweeney: It’s coming quickly. For more than a year, Sony’s been shipping a smartphone with a 4K display. It’s an LCD rather than an OLED, so it’s not immediately applicable to VR, but give me a 4K OLED and really quickly you’ll have prototype VR displays with double their current resolution. All those components are designed for smartphones and retrofitted to VR. When the first wave of custom products designed just for VR arrives, you’ll have much smaller displays, much lighter weight, and much lighter lenses supporting them. That’ll enable us to get up to 8K per eye within five, six, seven years.

GamesBeat: That’s when all these things start to come together.

Sweeney: If you look at the chips Intel is manufacturing, they have no problem putting two billion transistors on a single chip. For 8K per eye you need 640 million, a relatively small number. It’s coming. It’s just a matter of manufacturing. These will be small, consumerized, highly economical chips.

GamesBeat: The Metaverse sounded like you didn’t need pull that many things together to start it. The internet, something like Facebook, the human animation solution.

Sweeney: Yeah, human animation sensing, capture, and rendering, which we’re doing today.

GamesBeat: And then placing to go.

Sweeney: The user-created digital content economy around it is coming. It’ll take years to develop, and we don’t know what the Metaverse equivalent of a website would be. Is it a café, where you walk in and do something with another person? Is it a game parlor? We don’t know all the rules exactly, but we can start building it now. We’ll try lots of different things. Hundreds of people will try thousands of possibilities and some of them will work.

GamesBeat: Instead of a closed platform, you would like an open protocol. What are some of the steps toward doing that?

Sweeney: We need several things. We need a file format for representing 3D worlds. There are several available now. The Pixar Universal Scene Description format is one. The Alembic Material Description Format is another. These could be used as a standard for representing 3D content. You need a protocol for exchanging it, which could be HTTPS or something like the Interplanetary File System, which is decentralized and open to everyone. You need a means of performing secure commerce, which could be the blockchain, and you need a realtime protocol for sending and receiving positions of objects in the world and facial motion. If you look at Unreal and Unity today, we have networking protocols that support multiplayer gaming.

We’re several iterations away from having the remaining components for the Metaverse. They’re all similar enough that a common denominator could be identified and standardized, just like HTTP was standardized for the web.

GamesBeat: Do you see enough force being applied to get companies to come to the table for this? There are a lot of very powerful companies that want this to be closed, or that have historically been closed – Apple, Facebook, Google.

Sweeney: By and large, nobody saw the social network having as large an effect on society as it has had today. People were kind of tricked into signing up with these walled gardens without recognizing the long-term ramifications. There was this one-time opportunity to do that while these sites were in their formative stages.

This time around, everyone realizes that there are 3.5 billion people on the internet who are going to be connected to the Metaverse in the future. Every company—well, most companies would probably prefer to have it be their own closed proprietary thing, but everyone’s second choice is an open system, as opposed to somebody else’s closed system. I think there’s enough recognition and realization of the risks of lock-in and the importance of this coming platform that we’ll be able to get companies to work together collaboratively and do this.

Just like the industry came together to standardize the Khronos graphics API and OpenGL, and now they’re coming together to standardize an OpenVR API like Khronos announced yesterday—I think, over time, we’ll work with all of our partners and competitors to come up with a standardized framework. Everybody can participate and everybody is going to pretty quickly recognize that it’s better to have a system we can all play in, rather than try to make a moon shot and close it down and have all the billions of dollars for ourselves.

Above: Microsoft CEO Satya Nadella at the Windows 10 reveal earlier this year.

GamesBeat: Do you have any update on your tangling with Microsoft to get Windows to be more open?

Sweeney: Microsoft has done a lot of great things with UWP lately. They’ve opened up the installation APIs, so any new version of Windows 10 can install a UWP application from any source. You can buy them from the Windows Store, but that’s not the only place. They’ve done almost everything I would have wanted or envisioned as far as openness, except for promising the industry that they won’t close it down in the future.

That’s my one worry about the Windows platform. Microsoft has given itself, through Windows 10’s forced auto-update process, the ability to close things down at any point. Either Microsoft needs to make assurances to the industry that they’ll keep it open, so we can rely on it and invest ourselves more heavily in these new technologies like UWP and Windows Holographic. Or, if they’re not willing to do that, then the industry needs to refuse to get on board with things they could close down and continue using Win32 and OpenVR and Oculus VR. We need to avoid going down a path where we might find the doors slamming shut behind us.

GamesBeat: Qualcomm announced that they’ll make these ARM-based 48-core server chips to compete with Intel in the data center. Qualcomm and Microsoft are announcing they’re going to make Snapdragon chips that can run desktop and laptop Windows. That’s a big change. They’re unlocking the platform from x86.

Sweeney: Porting to new CPU formats is very valuable, especially these CPUs that have much lower power consumption relative to computation. That’s interesting. As software scales up to be more multithreaded, those architectures could eventually win. I remember Intel’s Larrabee experiment was an extreme attempt in that direction. It was an idea before its time, but it’ll come back.

GamesBeat: These small bits are steps toward the kind of world you eventually want, I suppose, something far more open.

Sweeney: I’ve reckoned with this idea that all game developers and publishers are constantly going to have to fight to keep things open. We’ll make business decisions that are painful in the short term in order to stand up and keep our freedom in the long term. With enough awareness of this I think we’ll be successful. Open platforms are much better and they’ll ultimately win.

Above: Is Minecraft the Metaverse?

GamesBeat: The other part of the Metaverse you started thinking through was this idea of the virtual economy. You mentioned that AR glasses would consume a lot less material than TVs. It’s a more sustainable display ecosystem. But if you’re doing a virtual economy in general, that’s more sustainable than a physical one.

Sweeney: Right. You don’t need iron mines or oil wells to make virtual goods. As more and more people are automated out of the economy through robotics and self-driving cars and other technologies, there will be a way to create value for other human beings online. There will be a virtual economy for exchanging value, goods and services, entertainment experiences and all that. It’ll be really interesting.

If you look at the kind of transactions that occur just as people play games, accumulate virtual items, use them—you’ve always seen, especially in high-end online games, that there’s an arbitrage between time and money. People who have a lot of free time can play these games without spending much money and accumulate virtual goods. People who don’t have much time can spend money to obtain those virtual goods. People putting money into the economy is an opportunity for people who have more time to take it out. A kind of Star Trek quality to the economy could develop out of that. Instead of government transfer schemes, welfare payments or a basic income, we can find great gainful opportunity for everybody in these worlds.

The proprietors of these worlds have to be open to that, a larger social solution to our problems, rather than just trying to scam everybody for as much money as quickly as possible, the way the free-to-play mobile economy is going right now.

Above: There’s a fight for the control over the Metaverse.

GamesBeat: Is there a way to screw up the Metaverse? Or can you safeguard it so that it happens the way you want it to happen?

Sweeney: We need to be very patient. A lot of companies are doing experiments along these lines. We have to realize it’s going to take many attempts before we find the key combination of elements that makes it all work. In two years, when everybody’s downloaded different versions of the Metaverse from different companies and found that they all suck, I wouldn’t give up at that point. We need to continue to look for the magic.

Keep in mind, the web existed for almost a decade before social networks became pervasive. Even though the technology was available to make a social network in around 1995, it simply didn’t happen until somebody had the idea to do it, do it big, and do it with a certain level of quality, a decade later. We’re not just limited by technology, but by our ideas and our experimentation and how quickly we can try things.

GamesBeat: I’ve been struck lately by how much the walls are coming down between science fiction and what’s technically possible, and how games play a part in that. There seems to be this inspiration cycle going on between games and tech and sci-fi. You’re a perfect example.

Sweeney: With VR every week we see something new that we hadn’t thought of. Here’s a company over there showing off a virtual dog. You have your VR hands and you go pet the dog. There’s no gameplay to it. You’re just interacting with this dog, and it’s awesome. There are hundreds of ideas like that. When we figure out how to put them all together into something big, it’ll be completely different from anything we have now.

GamesBeat: Do you think some of these other big things are going to happen, like the AI singularity?

Sweeney: I don’t know. If you look at what’s happening with deep learning, we’ve only got the very bottom elements of the pipeline. They’re able to simulate parts of your visual cortex, processing images and recognizing very basic patterns. But nobody has the slightest clue how to do cognition, your higher-level brain functions. Deducing complex conclusions from a huge set of data, nobody can predict when that’s coming. It could be 15 years out, or it could be lifetimes. We simply haven’t invented the algorithms to do that. Anybody who says you can extend deep learning to magically do that hasn’t done their homework and tried to fill in the steps.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More