Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

Companies pursuing on-premises accelerated computing solutions will soon have new choices in providers, courtesy of Nvidia. The San Jose company today announced the DGX-Ready Data Center program, which gives customers access to datacenter services through a network of colocation partners.

Tony Paikeday, director of product marketing for Nvidia DGX, said the new offering is aimed at organizations that lack modern datacenter facilities. They get no-frills, “affordable” deployment of DGX reference architecture solutions from DDN, IBM Storage, NetApp, and Pure Storage without having to deal with facilities planning — or so goes the sales pitch.

“Accelerated computing … systems [are] taking off,” he said in a statement. “Designed to handle the world’s most complex AI challenges, the systems have been rapidly adopted by a wide range of organizations across dozens of countries.”

The DGX-Ready Data Center is launching with over a half-dozen North American data center operators in the U.S. and Canada, including Aligned Energy, Colovore, Core Scientific, CyrusOne, Digital Reality, EdgeConneX, Flexential, Scale Matrix, and Switch. Nvidia says it’s evaluating additional program partners for North America, and that it plans to extend the program globally later this year.

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

- Turning energy into a strategic advantage

- Architecting efficient inference for real throughput gains

- Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

A powerful platform

DGX recently set six new records for how fast an AI model can be trained using a predetermined group of datasets. Across image classification, object instance segmentation, object detection, non-recurrent translation, recurrent translation, and recommendation systems under MLPerf benchmark guidelines, it outperformed competing systems by up to 4.7 times.

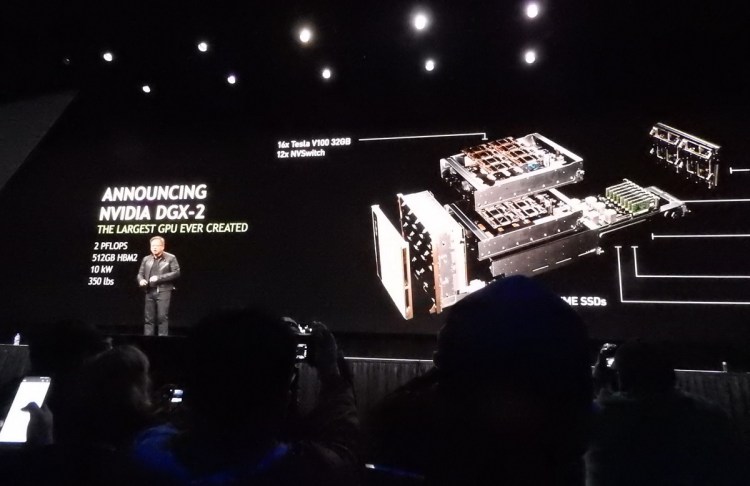

Performance benefited from platform improvements announced in March 2018 at Nvidia’s GPU Technology Conference in Santa Clara, California. There, Nvidia said it achieved a twofold memory boost in Nvidia Tesla V100, its datacenter GPU, by doubling the amount of memory in Nvidia Tesla V100, and it revealed NVSwitch, the successor to its NVLink high-speed interconnect technology that enables 16 Tesla V100 GPUs to communicate with each other simultaneously at a speed of 2.4 terabytes per second.

It’s also where DGX-2 made its debut. The server’s 300 central processing units (CPUs) are capable of delivering two petaflops of computational power, Nvidia claims, while occupying only 15 racks of datacenter space. Units sell for about $399,000 apiece.

There’s been quite a lot of uptake in the intervening months. Cray, Dell EMC, Hewlett Packard Enterprise, IBM, Lenovo, Supermicro, and Tyan began rolling out Tesla V100 32GB systems in Q2 2018. Oracle Cloud Infrastructure started offering Tesla V100 32GB in the cloud in the second half of the year. And in December, IBM teamed up with Nvidia to launch IBM SpectrumAI with Nvidia DGX, a converged system that marries IBM’s Spectrum Scale software-defined file platform with Nvidia’s DGX-1 server and workstation lineup.

Analysts at MarketsandMarkets forecast that the datacenter accelerator market will be worth $21.19 billion by 2023.