Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

More U.S. soldiers died during the mid-2000s troop surge in Iraq than in any other combat mission since Vietnam. As soldiers came home in flag-draped caskets, a growing number of veterans who made it back alive committed suicide. According to the VA National Suicide Data Report, veteran suicides surpassed 6,000 a year at one point.

Veterans committing suicide after returning home from Iraq — and Afghanistan — wasn’t just a hard-to-explain tragedy, but a public health crisis.

Today Rohit Prasad is best known as chief scientist for Amazon’s Alexa AI unit, a team experimenting with AI to detect emotions like happiness, sadness, and anger. Back in 2008, to help protect veterans with PTSD, Prasad led a Defense Advanced Research Projects Agency (DARPA) effort to use artificial intelligence to understand veterans’ mental health from the sound of their voice.

Among other things, the program focused on detecting distress in the voices of veterans suffering from PTSD, depression, or suicide risk by looking at informal communications or other patterns.

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

- Turning energy into a strategic advantage

- Architecting efficient inference for real throughput gains

- Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

“We were looking at speech, language, brain signals, and sensors to make sure that our soldiers when they’re coming back home — we can pick [up] these signals much earlier to save them,” Prasad said during an interview last month at Amazon’s re:Mars conference. “Because it was really hard. This is an area where there’s stigma, there’s a lot of [reliance on] self-reporting, which is noisy, and no easy way to diagnose that you’re going through these episodes.”

For the project, DARPA teamed up with companies like Cogito, which worked with the U.S. Department of Veteran Affairs to alert doctors when it believed a veteran was in trouble from the sound of their voice.

“This was a team of psychologists who were super passionate [about] this problem, and this was a DARPA effort, which means we had funds,” he said, adding that the mission was to save as many lives as possible. “It’s one of the programs that I didn’t like leaving before I came here.”

Amazon’s Alexa AI team is currently experimenting with ways to detect emotions like happiness and sadness, work that was published in research earlier this year. Amazon is working on a wearable device for emotion detection that people can use to understand the feelings of those around them, according to Bloomberg.

Alexa’s emotional intelligence project has been in the works for years now. Prasad told VentureBeat in 2017 that Amazon was beginning to explore emotion recognition AI but only to sense frustration in user’s voices. Prasad was equally tight-lipped at the recent re:Mars conference.

“It’s too early to talk about how it will get applied. Frustration is where we’ve explored offline on how to use it for data selection, but [I have] nothing to share at this point in terms of how it will be applied,” he said.

How it works

Amazon’s emotion detection ambitions are visible in two papers it published in recent months.

Both projects trained models using a University of Southern California (USC) data set of approximately 12 hours of dialogue read by actors and actresses. The data set of 10,000 sentences was then annotated to reflect emotion.

“Multimodal and Multi-view Models for Emotion Recognition” detects what Amazon Alexa senior applied science manager Chao Wang calls the big six: anger, disgust, fear, happiness, sadness, and surprise.

“Emotion can be described directly by numerical values along three dimensions: valence, which is talking about the positivity [or negativity] of the emotion, activation, which is the energy of the emotion, and then the dominance, which is the controlling impact of the emotion,” Wang said.

Above: A graph of how valence, dominance, and activation combine to predict human emotion

The work’s multimodal approach analyzes both acoustic and lexical signals from audio to detect emotions. Acoustic looks at sonic and voice properties of speech and lexical looks at the word sequence, explained Amazon Alexa senior applied scientist Viktor Rozgic.

“The acoustic features are describing more or less the style [of] how you said something, and the lexical features are describing the content. As seen in the examples, they are both important for emotional connection. So after the features are extracted, they are fed into a model — in our case, this will be different neural network architectures, and then we finally make a prediction, in this case anger, sadness, and a neutral emotional state,” he said.

“Multimodal and Multi-view Models for Emotion Recognition” was accepted for publication by the 2019 Association for Computational Linguistics (ACL).

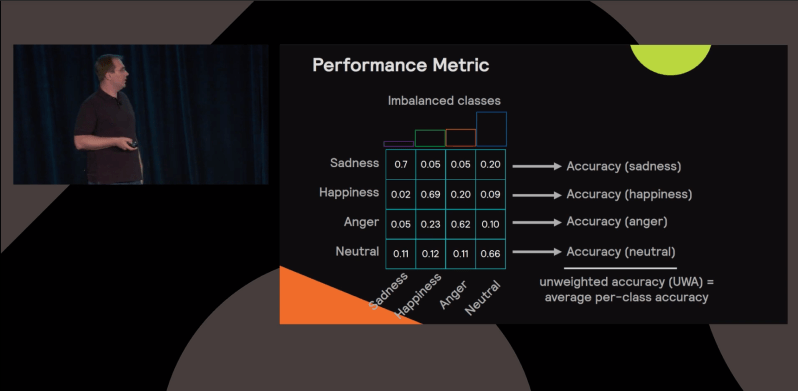

Above: Model performance metrics for “Multimodal and Multi-view Models for Emotion Recognition”

The other paper Amazon recently shared — “Improving Emotion Classification through Variational Inference of Latent Variables” — explains an approach to achieving slight improvements in valence to predict emotion.

To extract emotion from audio recordings, human interaction in voice recordings are mapped to a sequence of spectral vectors, fed to a recurrent neural network, and then used as a classifier to predict anger, happiness, sadness, and neutral states.

“We’re feeding the acoustic features to the encoder, and the encoder is transforming these features to a lower dimensional representation from which [the] decoder reconstructs the original audio features and also predicts the emotional state,” Rozgic said. “In this case, it’s valence with three levels: negative, neutral, and positive, and the role of adversarial learning is to regularize the learning process in a specific way and make the representation we learn better.”

“Improving Emotion Classification through Variational Inference of Latent Variables” was presented this spring at the 2019 International Conference on Acoustics, Speech, and Signal Processing.

Research by Rozgic, Prasad, and others published by the 2012 International Speech Communication Association conference Interspeech also relies on acoustic and lexical features.

The evolution of emotion and machine intelligence

In addition to offering details about Amazon’s emotion detection ambitions, a session at re:Mars explored the history of emotion recognition and emotion representation theory, what Wang called the foundation for emotion recognition research led by schools like USC’s Signal Analysis and Interpretation Lab and MIT’s Media Lab. Advances in machine learning, signal processing, and classifiers like support vector machines have also moved the work forward.

Applications of the tech range from gauging reaction to video game design, marketing material like commercials, power car safety systems looking for road rage or fatigue, or even to help students using computer-aided learning, Wang said. The tech can also be used to help people better understand others’ emotions, like a project reportedly being developed by Amazon.

Though advances have been made, Wang said emotion detection is a work in progress.

“There’s a lot of ambiguity in this space — the data and the interpretation — and this makes machine learning algorithms that achieve high accuracy really challenging,” Wang said.