Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

Arm may be a bit late to the whole machine learning and artificial intelligence bandwagon, at least with specialized designs for modern chips. But the designer of chip intellectual property has everybody beat in terms of volumes of AI and machine-learning chips deployed in the widest array of devices.

Arm’s customers, which include rivals Intel and Nvidia, are busy deploying AI technology everywhere. The company is also creating specific machine-learning instructions and other technology to make sure AI gets built into just about everything electronic, not just the high-end devices going into servers.

On the server level, customers such as Amazon are bringing ARM-based machine learning chips into datacenters. I talked with Steve Roddy, vice president of the machine learning group at Arm at the company’s recent TechCon event in San Jose, California.

Here’s an edited transcript of our interview.

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

- Turning energy into a strategic advantage

- Architecting efficient inference for real throughput gains

- Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

Above: Steve Roddy is the vice president of the machine learning group at Arm.

VentureBeat: What is your focus on machine learning?

Steve Roddy: We have had a machine learning processor in the market for a year or so. We aimed at the premium consumer segment, which was the obvious first choice. What is Arm famous for? Cell phone processors. That’s where the notion of a dedicated NPU (Neural Processing Unit) first appeared, in high-end cell phones. Now you have Apple, Samsung, MediaTech, [and] Huawei all designing their own, Qualcomm, and so on. It’s commonplace in a $1,000 phone.

What we’re introducing is a series of processors to serve not only that market, but also mainstream and lower-end markets. What we originally envisioned — we entered the market to serve people building VR glasses, smartphones, places where you care more about performance than cost balancing and so on. History would suggest that the feature set shows up in the high-end cell phone, takes a couple years, and then moves down to the mainstream-ish $400-500 phone, and then a couple years later that winds up in the cheaper phone.

I think what’s most interesting about how fast the whole NPU machine learning thing is moving is that that is happening much faster, but for different reasons than — it used to be, okay, the 8 megapixel sensor starts here, and then when it’s cheap enough it goes here, and then when it’s even cheaper it goes there. It’s not just that the component cost goes down and integrates in and it’s replaced by something else. It’s that machine learning algorithms can be used to make different or smarter decisions about how systems are integrated and put together to add value in a different way, or subtract cost in a different way.

Above: Simon Segars at Arm TechCon 2019

VentureBeat: One talk today described how a neural network will figure out how to do something, and then you cull out the stuff that isn’t really necessary. You wind up with a much more efficient or smaller thing that could be embedded into microcontrollers.

Roddy: That’s a whole burgeoning field. Taking a step back, machine learning has really two components. There’s the creation of the algorithm, the learning, or training, as it’s called, which happens almost exclusively in the cloud. That, to me — I like to jokingly say that most practitioners would agree that it’s the apocryphal million monkeys with a million typewriters. Poof, one of them writes a Shakespeare sonnet. That’s kind of what the training process is like.

In fact, Google is explicit about it. Google has a thing now called AutoML. Let’s say you have an algorithm you picked from some open source repository, and it’s pretty good at the task you want. It’s some image recognition thing you’ve tweaked a little bit. You can load that into Google’s cloud service. They do this because it runs the meter, obviously, on compute services. But basically it’s a question of how much you want to pay.

They will randomly try pseudo-randomly created different variations of the neural net. More filters here, more layers there, reverse things, do them out of order, and just rerun the training set all over again. Oh, this one’s now .1% more or less accurate. It’s just how much you want to spend. $1,000 or $10,000 in compute time? A million monkeys, a million typewriters. Look, I discovered one that’s 2% more accurate at face recognition, voice recognition, whatever it happens to be.

Set all that aside. That’s the development of the neural net. The deployment is known as inference. Now I want to run one particular pass of that inference on the object I want to recognize — what face, what object. I want to run it on a car and recognize Grandma in the crosswalk, or what-have-you. Arm is obviously focused on those volume silicon markets where it’s deployed, in the edges or the endpoints.

You stick a bunch of sensors in the walls of the convention center, for example, and the lights go out and it’s filled with smoke because it’s on fire. You could have sensors that recognize there’s fire, activate, and look for bodies on the floor. They can send out distress signals to the fire department. “Here’s where the people are.” “Don’t go to this room, there’s nobody there.” “Go to this room.” It’s a pretty cool thing. But you want it to be super efficient. You don’t want to rewire the entire convention center. You’d like to just stick up this battery-operated thing and expect it to run for three or six months. Every six months you go in and change the safety system with that sensor.

That’s a question of taking the abstract model that a mathematician has created and reducing it to fit on a constrained device. That’s one of the biggest challenges still ahead. We have our processors. They’re great at implementing highly efficient versions of neural nets in end devices. The process of getting from the mathematician, who is conceiving new types of neural nets and understands the math inside it, and connecting it down to the lower-level programmer, who’s an embedded systems programmer — there’s a huge skills gap there.

If you’re a 24-year-old math wizard and you just got your undergraduate math degree and your graduate degree in data science and you come out of Stanford, and the big internet companies are having fistfights outside your dorm to offer you a job — you’re brilliant at neural nets and the mathematics behind it, but you don’t have any skills at embedded software programming, by definition. The guy who’s an embedded software engineer and assembling CPUs and GPUs and ARM NPUs, putting operating systems on chips and doing drivers and low-level firmware, he’s told, “Hey, here’s this code with a neural net in it. Make sure it runs on this constrained little device that has 2 megs of memory and a 200MHz CPU. Make it work.”

Well, wait a minute. There’s a gap there. The embedded guy says, “I don’t know what this neural net does. It requires 10 times as much compute as I have. What’s the 90% that I can throw away? How do I know?” The guy at the high level, the mathematician, doesn’t know a thing about constrained devices. He deals with the math, the model of the brain. He doesn’t know embedded programming. Most companies don’t have both people in the same company. Very few highly integrated companies put everyone in a room together to have a conversation.

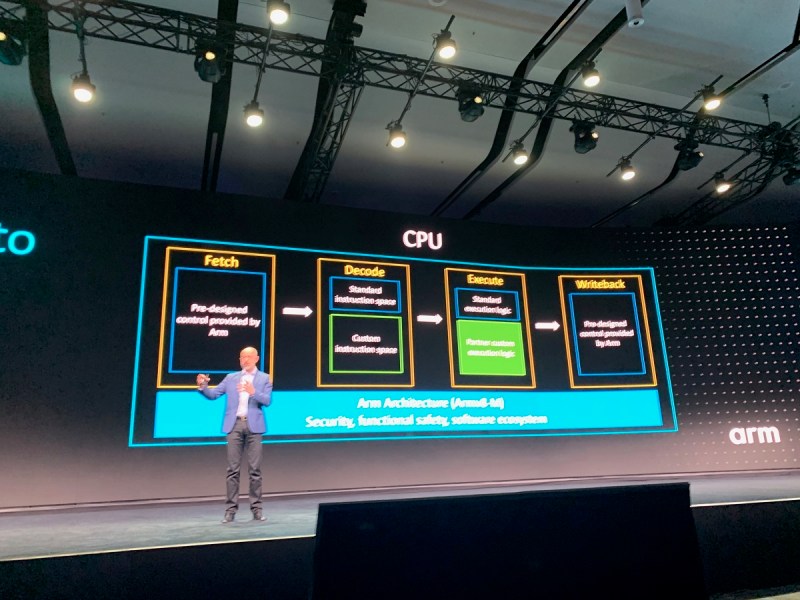

Above: Simon Segars talks about custom instructions at Arm TechCon 2019.

Quite often — say you’re the mathematician and I’m the embedded software guy. We have to have an NDA (non-disclosure agreement) to even have a conversation. You’re willing to license the model output, but you’re not giving up your source data set, your training data set, because that’s your gold. That’s the value. You give me a trained model that recognizes cats or people or Grandma in the crosswalk, fine, but you’re not going to let out the details. You’re not going to tell me what goes on. And here I am trying to explain how this doesn’t fit in my constrained system. What can you do for me?

You have this gulf. You’re not an embedded programmer. I’m not a mathematician. What do we do? That’s an area where we’re investing and others are investing. That’s going to be one of the areas of magic over time, in the future. That helps close the loop between it. It’s not a one-way thing, where you license me an algorithm and I keep hacking it down until I get it to fit. You gave it to me 99% accurate, but I can only implement it 82% accurate, because I had to take out so much of the compute to make it fit. That’s better than nothing, but I sure wish I could go back and retrain and have an endless loop back and forth where we could collaborate in a better way. Think of it as collaborating between constrained and ideal.

VentureBeat: I wonder if the part here that sounds familiar is the same or very different, but Dipti Vachani gave that talk about the automotive consortium and how everyone is going to collaborate on the self-driving cars, taking things from prototypes to production. She was saying we can’t put supercomputers in these cars. We have to get them down into much smaller, affordable devices that can go into production units. Is some of what you’re talking about in any way similar? The supercomputers have figured out these algorithms, and now those need to be reduced down to a practical level.

Roddy: It’s the same problem, right? When these neural nets are created by the mathematicians, they’re typically using floating point arithmetic. They’re doing it in an abstract with infinite precision and essentially infinite compute power. If you want more compute power, you fire up a few more blades, fire up a whole datacenter. What do you care? If you’re willing to write the check to Amazon or Google, you can do it.

VentureBeat: But you can’t put the datacenter in a car.

Above: Self-driving cars need a standards body to get to the finish line. Minneapolis-based VSI Labs’ research vehicle at Arm TechCon.

Roddy: Right. Once I have the shape of the algorithm, it becomes a question — you hear terms like quantization, pruning, clustering. How do you reduce the complexity in a way that prunes out the parts that actually don’t matter? There’s lots of neural connections in your brain — this is trying to mimic a brain — but half of them are garbage. Half of them do something real. There are strong connections that transmit the information and weak ones that can be pruned away. You’d still recognize your partner or your spouse if you lost half your brain cells. The same thing for trained neural nets. They have lots of connections between the imagined neurons. Most of them you could get rid of, and you’d still get pretty good accuracy.

VentureBeat: But you’d worry that one thing you got rid of was the thing that prevents a car wreck in some situation.

Roddy: It’s a test case. If I get rid of half the computation, what happens? This is the so-called retraining. Retrain, or more importantly do the training with the target in mind. Train not assuming infinite capacity of a datacenter or a supercomputer, but train with the idea that I’ve only got limited compute.

Automotive is a great example. Let’s say it’s 10 years from now and you’re the lab director of pedestrian safety systems for XYZ German component company. Your algorithms are running in the latest and greatest Lexus and Mercedes cars. They each have $5,000 worth of compute hardware. Your algorithms are also running in a nine-year-old Chinese sedan that happens to have a first generation of your system.

One of your scientists over here comes up with the best new algorithm. It’s 5% more accurate. Yay! In the Mercedes it’s 5% more accurate, anyway, but you have an obligation — in fact you probably have a contract that says you’ll do quarterly updates — to the other guy. Making it worse, now we’ve got 17 platforms from 10 car companies. How do you take this new mathematical invention and put it in all those places? There has to be some structured automation around that. That’s part of what the automotive consortium is trying to do in a contained field.

The technology we’re developing is around “How do we create those bridges?” How do you put a model, for example, into the training sets that the developer uses — the TensorFlows, the Caffes — that allows them to say, “Well, instead of assuming I’m running in the cloud for inference, what if I was running on this $2 microcontroller in a smart doorbell?” Train for that, instead of train for the abstract. There’s a lot of infrastructure that could be put in place.

For good or for bad, it has to cut across industry. You have to build bridges between data scientists at Facebook, chip guys at XYZ Semiconductor, box builders, and the software algorithm people that are trying to inter-upgrade it all together.

VentureBeat: There could be competitors like Nvidia in the alliance. How do you keep this on a level above the competition?

Roddy: What Nvidia does — to me, they’re a customer. They sell chips.

Above: VSI Labs’ full stack of AV technology in the trunk of their research vehicle.

VentureBeat: Intel theoretically too, but —

Roddy: Intel actually buys a lot from Arm. Architecturally, at the level of — Nvidia is a great example. They have their own NPU. They call it the NVDLA. They recognize that for training in the cloud, yeah, it’s GPUs. That’s their bulwark. But when they talk about edge devices, they’ve even said that not everyone can have a 50-watt GPU in their pocket. They have their own version of what we talked about here, NPUs that are fixed-point implementations of integer arithmetic in different sizes. Things from 4 square millimeters down to a square millimeter of silicon. This thing runs at less than a watt. That’s way better than a high-powered GPU.

If you have a relatively modern phone in your pocket, you have an NPU. If you have bought an $800 phone in the last couple of years, it has an NPU. Apple has one. Samsung has one. Huawei has a couple generations. They’ve all done their own. We would predict, over time, that the majority of those companies will not continue to develop their own hardware. A neural net is basically just a giant DSP filter. You have a giant set of coefficients in a big image, for example. I might have 16 million coefficients in my image classifier, and I have a 4-megapixel image. That’s just a giant multiplication. It’s multiply accumulate. That’s why we talk about the multiply accumulate performance of our CPUs. That’s why we build these NPUs that do nothing but multiply accumulate. It’s a giant filter.

The reality is, there’s only so much you can do to innovate around 8 x 8 multiply. The basic building block is what it is. It’s system design. We have a lot of stuff in our design around minimizing data movement. It’s being smart about data movement at the block level, at the system level. I would not expect that, 10 years from now, every cell phone vendor and every automotive vendor has their own dedicated NPU. It doesn’t make sense. Software and algorithms, absolutely. Architecture, yeah. But the building block engines will probably become licensed, just like CPUs and GPUs have.

No guarantee that we’re the ones that win it. We’d like to think so. Someone will be. There will probably be a couple of really good vendors that license NPUs, and most of the proprietary things will go away. We hope we’re one of the winners. We like to think we have the wherewithal to invest to win, even if our first ones aren’t market winners. Indications are it’s actually pretty good. We’d expect that to happen over the course of five or 10 years. At the system level, there are so many system design choices and system software choices. That’s going to be the key differentiator.

Above: Dipti Vachani of Arm announces Autonomous Vehicle Computing Consortium.

VentureBeat: On the level where you would compete, then, does it feel like Arm is playing some catch-up, or would you dispute that?

Roddy: It depends on what you’re looking at and what your impression is. If you literally sit down and say, “Snapshot, right now: How much AI is running in the world at this very moment and where is it running?” Arm is the hands-down winner. The vast majority of AI algorithms don’t actually require a dedicated NPU. Machine learning goes all the way down to things like — you have predictive text on your phone. Your phone is probably enabled with “Okay Google” or “Hello Siri.” That’s a machine learning thing. It’s probably not running on a GPU or an NPU. It’s probably just running on an M-class core.

If you look at cell phones in the market, how many smartphones are out there? In service, maybe 4 billion to 5 billion? About 15-20% of them have NPUs. That’s the last three generations of Apple phones, the last two to three generations of Samsung phones. Call it half a billion. Generously, maybe a billion. But everyone has Facebook. Everyone has Google predictive text. Everyone has a voice assistant. That’s a neural net, and it runs on the CPU with all those other systems. There’s no other choice.

If you take a quick snap and look at where most of the inference is running, it’s on a CPU and most of it is ARM. Even in the cloud, when you talk about where inference is running — not training, but the deployment — the vast majority of those are running on CPUs. Mostly Intel CPUs, obviously, but if you’re using Amazon, there are ARM servers.

What’s the classic one in finance? I want to have satellite photos of shopping centers analyzed so I can see traffic patterns at Home Depot and know whether I should short or go long on Home Depot stock. People actually do that. You need a bunch of satellite imagery to train on. You also need financial reports. You have the pictures of all the traffic around all the Home Depots or all the JC Penny’s and you correlate with the quarterly results over the last 15 years and you build a neural net. Now we think we have a model correlating traffic patterns to financial results. Let’s take a look at live shots from the satellite over the last three days at all the Home Depots in North America and come up with a prediction for what their earnings will be.

That actual prediction, that inference, runs on a CPU. It might take weeks of training on a GPU to build the model, but I have 1,000 photos. Each inference takes half a second. You don’t fire up a bunch of GPUs for that. You run it and 20 minutes later you’re done. You’ve done your prediction. The reality is we’re the leading implementer of neural net stuff. But when it comes to perception about the glamour NPU, it’s true. We don’t have one in the marketplace today. By that token, we’re behind.

But admittedly, we’re just introducing our family of NPUs for design now. We have three NPUs. We’ve licensed them all. They’re in the hands of our customers. They’re doing design. You’re not going to see silicon this year. Maybe late next year. I don’t have anyone lined up to make an announcement. It’s going to be another decade before the whole field settles down. Huawei has their own. Apple has their own. Samsung has their own. Qualcomm has their own. Nvidia has their own. Everyone has their own. Do they all really need to spend 100 people a year investing in hardware to do 8-bit multipliers? The answer is, probably not.

VentureBeat: I remember that Apple had — they described their latest chip at their event. They said the machine learning bit was 6 times more powerful than the previous one. The investment into that part of the chip made sense. That’s the part that gives you a lot of bang for your buck. When you’re looking at these larger system-on-chip things in the phones and other beefy devices, are you expecting that part to get magnified, blown up, doubled and tripled down within that larger silicon pie?

Roddy: Some things yes, some things no. We’re seeing a proliferation of machine learning functionality in a couple of different ways. One of the unanticipated ways is how it’s pushed more rapidly into lower-cost devices than history might have predicted. Screen sizes and camera sizes used to trickle down at a regular rate, generation after generation, from high-end to mid-range to low-end phones. We’ve seen a much more rapid proliferation, because you can do interesting things with an NPU that in some ways allow you to cut costs elsewhere in the system, or enable functionality that’s different than the rest of the system.

The great example in low-cost phones — take the notion of face unlock. Face unlock is typically a low-power camera, low resolution, has to discern my face from your face. That’s about all it needs to do. If you’re a teenager, your buddy can’t open your phone and start sending funny texts. That typically runs just in software on a CPU, typically an ARM CPU. That’s good enough to unlock a phone, whether it’s a $1,000 phone or a $100 phone.

But now you want to turn that $100 phone into a proxy banking facility for the unbanked in the developing world. You don’t want just a bad camera doing a quick selfie to determine who’s making a financial transaction. You need much more accurate 3D mapping of the face. You probably want a little iris scan along with it. If you can do that with a 20-, 30-, 40-cent addition to the applications processor by having a small dedicated NPU that only gets turned on to do the actual detailed facial analysis, that’s about the size of what people want from the smallest in our family of NPUs.

Suddenly, for the $100 cell phone it makes sense to put in a dedicated NPU, because it enables that phone to become a secure banking device. It wasn’t about making the selfies look better. The guy buying a $100 phone isn’t willing to pay to make selfies look better. But the banking companies are willing to subsidize that phone to get that stream of transactions, for sure, if they pick up a penny for every 80-cent microtransaction that occurs in Bangladesh or wherever. We’re seeing functionality that originally started as a vanity thing — make the Snapchat filter pretty, make my selfie look 20 years younger — now you can use it for different things.

VentureBeat: The machine learning percentage of the silicon budget, what would you say that is?

Roddy: It depends on the application. There are some product classes where they’re willing to put — what’s the state of the art these days? People putting 10 or 12 teraops into a cell phone. One thing we do is look at various types of functionality, and what’s the compute workload? How much of it is the neural net piece and how much of it is other forms of computation?

Something like speech processing, nah, forget it. It can run on M-class CPUs. You don’t need it to be able to do “OK Google” and “Hello Siri.” Go to the other end of the spectrum and look at something like video green screen, which would be me with my selfie saying, “Look at me! I’m on the beach!” It cuts me out and puts me in a beach scene, even though I’m really in a dull conference room. “Hi, honey, I’m still at the office,” even though I’m at the ball game. That’s a tremendous amount of horsepower.

But if you spend $1,200 on the latest phone and you want the coolest videos because you’re an Instagram influencer, sure. If it costs 5 bucks extra to pack a 20 teraop NPU in the phone, why not? Because it’s cool. It’s being driven by both ends. There’s some neat stuff you can do.