Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

After years of hype and speculation, OpenAI has officially launched a new lineup of large language models (LLMs), all different-sized variants of GPT-5, the long-awaited successor to its GPT-4 model from March 2023, nearly 2.5 years ago.

The company is rolling out four distinct versions of the model — GPT-5, GPT-5 Mini, GPT-5 Nano and GPT-5 Pro — to meet varying needs for speed, cost, and computational depth.

- GPT-5 is the full-capability reasoning model, used in both ChatGPT and OpenAI’s application programming interface (API) for high-quality general tasks

- GPT-5 Pro is an enhanced version with extended reasoning and parallel compute at test time, designed for use in complex enterprise and research environments. It provides more detailed and reliable answers, especially in ambiguous or multi-step queries.

- GPT-5 Mini is a smaller, faster version of the main model, optimized for lower latency and resource usage. It is used as a fallback when usage limits are reached or when minimal reasoning suffices.

- GPT-5 Nano is the most lightweight variant, built for speed and efficiency in high-volume or cost-sensitive applications. It retains reasoning capability, but at a smaller scale, making it ideal for mobile, embedded, or latency-constrained deployments

GPT-5 will soon be powering ChatGPT exclusively and replace all other models going forward for its 700 million weekly users, though ChatGPT Pro subscribers ($200) month can still select older models for the next 60 days.

As per rumors and reports, OpenAI has replaced the previous system of having users switch the underlying model powering ChatGPT with an automatic router that decides to engage a special “GPT-5 thinking” mode with “deeper reasoning” that takes longer to respond on harder queries, or uses the regular GPT-5 or mini models for simpler queries.

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

- Turning energy into a strategic advantage

- Architecting efficient inference for real throughput gains

- Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

In the API, the three reasoning-focused models — GPT-5, GPT-5 mini, and GPT-5 nano — are available as gpt-5, gpt-5-mini, and gpt-5-nano, respectively. GPT-5 Pro is not currently accessible via API, as it is used only to power ChatGPT for Pro-tier subscribers.

GPT-5’s release comes just days after OpenAI launched a set of free, new open source LLMs under the name GPT-oss, which can be downloaded, customized and used offline by individuals and developers on consumer devices like PCs/Mac desktops and laptops.

The biggest takeaway, though, is likely not what GPT-5 is, but what it isn’t: AGI, artificial general intelligence, OpenAI’s stated goal of an autonomous AI system that outperforms humans at most economically valuable work.

Whether or not you, the reader, personally believe such a system is possible or desirable, OpenAI declaring AGI would have material business impacts. Wired reported previously that there is a clause in OpenAI’s contract with Microsoft that permits OpenAI to begin charging Microsoft for access to its newest models or cut off access to OpenAI models if OpenAI’s board determines that the company has achieved AGI or generates more than $100 billion in profit.

But apparently, that is not the case today. As co-founder and CEO Sam Altman said, flanked by other OpenAI staffers on an embargoed video call with reporters last night, “the way that most of us define AGI, we’re still missing something quite important — many things that are quite important, actually — but one big one is a model that continuously learns as its deployed, and GPT-5 does not.”

I also asked OpenAI the following question directly: “Is OpenAI considering GPT-5 AGI? Will it trigger any changes regarding Microsoft negotiations?”

To which an OpenAI spokesperson responded over email:

“GPT-5 is a significant step toward AGI in that it shows substantial improvements in reasoning and generalization, bringing us closer to systems that can perform a wide range of tasks with human-level capability. However, AGI is still a weakly defined term and means different things to different people. While GPT-5 meets some early criteria for AGI, it doesn’t yet reach the threshold of fully human-level AGI. There are still key limitations in areas like persistent memory, autonomy, and adaptability across tasks. Our focus remains on advancing these capabilities safely, rather than speculating on specific timelines.“

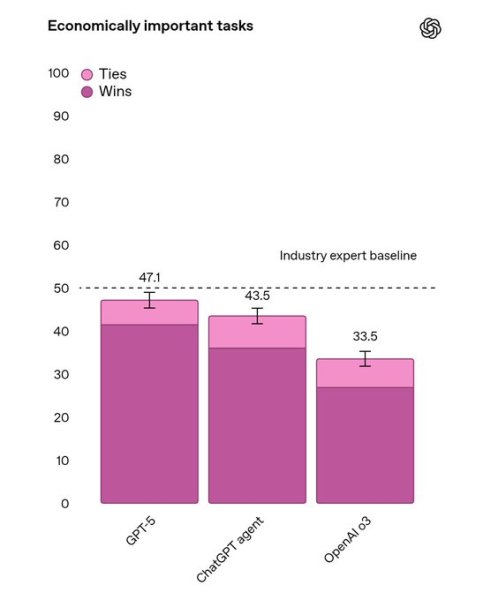

Yet benchmark results shared by OpenAI show GPT-5 is nearing the threshold of performing as well as, and is close to exceeding, the average human expert performance at various tasks across law, logistics, sales, and engineering.

As OpenAI writes: “When using reasoning, GPT-5 is comparable to or better than experts in roughly half the cases, while outperforming OpenAI o3 and ChatGPT Agent.”

Why use GPT-5?

With numerous alternative models now available from OpenAI and a growing list of competitors, including Chinese startups offering powerful open-source models, what does GPT-5 bring to the table?

Altman described the leap in capability as more than incremental. He compared the experience of using GPT-5 to upgrading from a pixelated display to a retina screen — something users simply don’t want to go back from.

“GPT-3 felt like talking to a high school student,” Altman said. “GPT-4 was like a college student. GPT-5 is the first time it feels like talking to a PhD-level expert in your pocket.”

Among the most impressive capabilities demoed for reporters during the embargoed call was the ability to generate the code for a fully working web application from a single prompt, in this case, a French language learning app with built-in game where English-to-French phrases were shown every time the user guided a virtual mouse to collect slices of cheese, with fully working emoji-inspired characters, backdrop/setting, and clickable interactive menus. The given prompt was also only a single paragraph.

As Altman stated: “This idea of software on demand will be a defining part of the new GPT-5 era.”

However, this basic capability — prompt to working software — has been available already from prior OpenAI models such as o3 and o4-mini, o4-high, and rival services like Anthropic’s Claude Artifacts, which I (and many others) have used for many months to create interactive first-person and clickable games as well.

The advantage GPT-5 seems to offer in making games, apps, and other software from prompts seems to be in speed — it produced this demo app in a matter of mere minutes — and completeness, with very few discernible bugs and a completely playable experience in “one-shot,” or from a single prompt without back-and-forth conversation, as the developers like to say.

Available to ChatGPT free users and all plans

GPT-5 is not restricted to premium subscribers. OpenAI has made the model available across all ChatGPT tiers, including free users — a deliberate move aligned with the company’s mission to ensure broad benefits from AI.

Free-tier users can access GPT-5 and GPT-5 Mini, with usage limits — though exactly what those usage limits are remains undefined for now, and I’d guess will likely change on an irregular cadence depending on demand.

Subscribers to the ChatGPT Plus ($20 per month) tier receive higher usage allowances, while subscribers to the ChatGPT Pro ($200 monthly), Team ($30 per month or $240 annually), and Enterprise (variable pricing depending on company size and usage) customers get unlimited or prioritized access.

GPT-5 Pro will become available to Team, Enterprise, and EDU customers in the coming days.

The new unified ChatGPT experience eliminates the need to manually select a model. Once users reach usage limits on GPT-5, the system automatically shifts to GPT-5 mini — a more lightweight but still highly capable fallback.

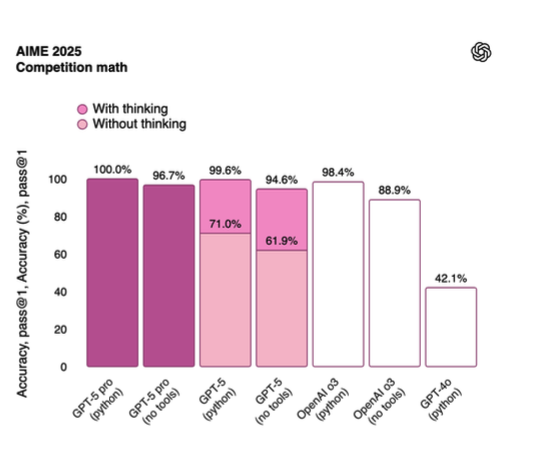

Improved metrics across the board, including 100% in AIME 2025 Math

According to OpenAI, GPT-5 offers the most accurate, responsive and context-aware AI system the company has ever shipped.

It reduces hallucinations, handles multi-step reasoning more reliably and generates better-quality code, content, and responses across diverse domains.

The GPT-5 system delivers ~45% fewer factual errors than GPT-4o in real-world traffic, and up to ~80% fewer when using its “thinking” mode.

This mode, which users can trigger by explicitly asking the model to take its time, enables more complex and robust responses — powered by GPT-5 Pro in certain configurations. In tests, GPT-5 Pro sets new state-of-the-art scores on benchmarks like GPQA (88.4%), AIME 2025 math (100% when using Python to answer the questions) and HealthBench Hard (46.2%).

Performance improvements show up across key academic and real-world benchmarks. In coding, GPT-5 sets new state-of-the-art results on SWE-Bench Verified (74.9%) and Aider Polyglot (88%).

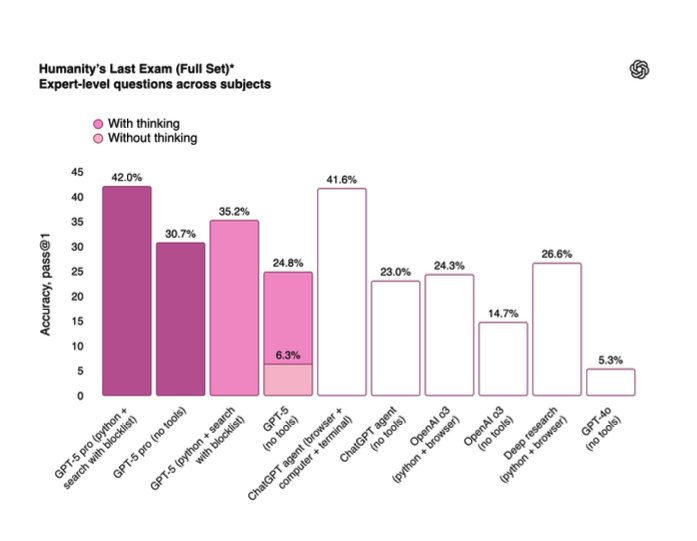

Perhaps most incredibly, on Humanity’s Last Exam — a newish benchmark of 2,500 extremely difficult tasks for programs — GPT-5 pro achieves a record-high 42%, blowing away the competition and all prior OpenAI models except the new ChatGPT agent unveiled last month that controls its own computer and cursor like a human.

On writing tasks, GPT-5 adapts more smoothly to tone, context and user intent. It is better at maintaining coherence, structuring information clearly and completing complex writing assignments.

The improvements are not just technical — OpenAI’s team emphasized how GPT-5 feels more natural and humanlike in conversation.

Health-related use cases have also been enhanced. While OpenAI continues to caution that ChatGPT is not a replacement for medical professionals, GPT-5 is more proactive about flagging concerns, helping users interpret medical results and guiding them through preparing for appointments or evaluating options. The system also adjusts answers based on user location, background knowledge and context — leading to safer and more personalized assistance.

One of the most significant updates is in safe completions, a new system that helps GPT-5 avoid abrupt refusals or unsafe outputs.

Instead of declining queries outright, GPT-5 aims to provide the most helpful response within its safety boundaries and explains when it cannot assist — a change that dramatically reduces unnecessary denials while maintaining trustworthiness.

New developer tools for using GPT-5 through the API

GPT-5 is also a major upgrade for developers working on agentic systems and tool-assisted workflows. OpenAI has introduced a suite of developer-friendly controls in the GPT-5 API, including:

- Free-form function calling – Tools can now accept raw strings such as SQL queries or shell commands, without requiring JSON structure.

- Reasoning effort control – Developers can toggle between rapid responses and deeper analytical processing depending on the task.

- Verbosity control – A new parameter enables users to select the level of detail in responses, ranging from brief to standard to detailed.

- Structured outputs with grammar constraints – Developers can now guide outputs using custom grammars or regular expressions.

- Tool call preambles – GPT-5 can now explain its reasoning before using tools or making external requests.

For the first time, developers can also enable a new parameter option for reasoning effort, called ‘minimal’. This setting allows for the model to operate in reasoning mode, optimized for speed. “This is so that you can use these reasoning models, but with minimalization,” one OpenAI researcher explained during the company’s announcement livestream on YouTube earlier today, “so that they can slot into the very fastest and most latency sensitive applications.”

The researcher stressed that minimal mode means developers don’t have to choose between accuracy and responsiveness: “Now you don’t actually have to choose between a bunch of models… you can use GPT-5 for all of your use cases, and just dilute reasoning effort.”

This approach aims to make GPT-5 viable for ultra-low-latency scenarios like live customer interactions, fast-refresh dashboards, and real-time tool integrations, while still leveraging the reasoning capabilities that differentiate it from smaller or older models.

The API itself is getting major upgrades. A researcher explained that the new custom tools feature moves beyond JSON-only outputs: “Custom tools are just free form plain text,” with the option to enforce formats using “a regular expression or even a context-free grammar… super useful if you have your own SQL fork and specify that the models always follow that format.”

Developers also gain tool call preambles, so “the model’s ability to output explanation of what it’s about to do before it calls tools” can be switched on or tailored, and a verbosity parameter to set responses to “low, medium and high.”

OpenAI also touted GPT-5’s leap in coding performance. On SWE-Bench, a benchmark for Python, the model scored 74.9%, beating GPT-4’s best of 69.1%, and it hit 88% on Polyglot, which covers multiple programming languages. Human testers preferred its code “70% of the time for its improved aesthetic abilities, but also better capabilities overall.”

Developers can access GPT-5 through OpenAI’s platform for the following prices:

- gpt-5: $1.25/$10 per 1 million input/output tokens (with up to 90% input cache discount)

- gpt-5-mini: $0.50 / $5 per 1 million input / output tokens

- gpt-5-nano: $0.15 / $1.50 per 1 million input / output tokens

The context window now spans 256,000 tokens (about the length of a 600-800 page book of text), allowing GPT-5 to handle substantially larger documents and more extensive conversations than its predecessor, GPT-4 Turbo.

For those who require even more, GPT-4.1 (which supports 1 million-token context windows) remains available.

Compared to the primary competitors — Anthropic and Google — OpenAI’s GPT-5 models are on par or cheaper for developers to access through the API, placing more downward pressure on the cost of intelligence.

| Model / Tier | Input Cost (per 1M tokens) | Output Cost (per 1M tokens) | Notes |

|---|---|---|---|

| GPT‑5 | $1.25 (before cache) | $10 | With up to 90% input caching |

| GPT‑5‑mini | $0.50 | $5 | — |

| GPT‑5‑nano | $0.15 | $1.50 | — |

| Claude Sonnet 4 | $3 | $15 | Up to 90% prompt-caching discount |

| Claude Opus 4 | $15 | $75 | High-end model aimed at complex tasks |

| Gemini 2.5 Pro (≤200K) | $1.25 | $10 | Interactive prompts up to 200K tokens |

| Gemini 2.5 Pro (Batch ≤200K) | $0.625 | $5 | Batch processing reduces cost |

| Gemini 2.5 Pro (>200K) | $2.50 | $15 | For long prompts over 200K tokens |

| Gemini 2.5 Flash‑Lite | $0.10 | $0.40 | Google’s most cost-efficient LLM to date |

Early enterprise testers have high praise

Several high-profile companies have already adopted GPT-5 in early trials. JetBrains is using it to power intelligent developer tools, and Notion has integrated GPT-5 to improve document generation and productivity workflows.

At AI developer tool startup Cursor, co-founder and CEO Michael Truell said in a quote provided to reporters by OpenAI: “Our team has found GPT-5 to be remarkably intelligent, easy to steer, and even to have a personality we haven’t seen in any other model. It not only catches tricky, deeply-hidden bugs but can also run long, multi-turn background agents to see complex tasks through to the finish—the kinds of problems that used to leave other models stuck. It’s become our daily driver for everything from scoping and planning PRs to completing end-to-end builds. ”

Other customers report major gains: GitLab cites a drop in tool call volume, GitHub notes improvements in reasoning across large codebases, and Uber is testing GPT-5 for real-time, domain-aware service applications. At Amgen, the model has already improved output quality and reduced ambiguity in scientific tasks.

More updates still to come

GPT-5’s launch coincides with several new features coming now and soon to ChatGPT.

Users can now personalize the interface with chat colors (with exclusive options for paid users) and experiment with preset personalities like Cynic, Robot, Listener, and Nerd — designed to match different communication styles.

ChatGPT will also soon support seamless integration with Gmail, Google Calendar, and Google Contacts. Once enabled, these services will be automatically referenced during chats, eliminating the need for manual toggling. These connectors launch for Pro subscribers next week, with broader availability to follow.

A new Advanced Voice mode understands instructions more effectively and enables users to adjust tone and delivery. Voice will be available across all user tiers and included in custom GPTs.

In 30 days, OpenAI will retire the older “Standard Voice Mode” and fully transition to this unified experience.

With safer design, more robust reasoning, expanded developer tooling, and broad user access, GPT-5 reflects a maturing AI ecosystem that’s inching closer to real-world utility on a global scale.

OpenAI’s approach this time is less about flash and more about integration. GPT-5 isn’t a separate offering that users have to seek out — it’s simply there, powering the tools millions already use, making them smarter and more capable and unlocking a whole new raft of use cases for developers.