Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

You’ve proudly labeled your services as “AI-powered” by integrating LLMs. The home page of your website proudly flaunts the revolutionary impact of your AI-powered services using interactive demos and case studies. This also makes your company’s first mark on the global generative AI landscape.

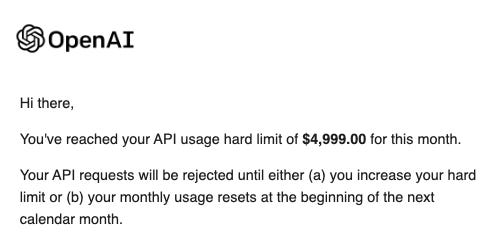

Your small but loyal user base is loving an enhanced customer experience, and you can see the potential for growth on the horizon. But then, just three weeks into the month, this email from OpenAI blows you away:

Just a week ago, you were talking to customers and assessing a product-market fit (PMF), and now, thousands of users have flocked to your website (anything can go viral on social media these days) and crashed your AI-powered services.

As a result, your once-reliable service is not only leaving existing users frustrated but is also affecting new ones.

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

- Turning energy into a strategic advantage

- Architecting efficient inference for real throughput gains

- Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

A quick and obvious fix is to revive the services immediately by increasing the usage limit.

However, this temporary solution comes with a sense of unease. You can’t help but feel like you’re locked into a dependency on a single provider, with limited control over your own AI and the associated costs.

‘Should I DIY?’ you ask yourself

Fortunately, you know that open-source large language models (LLMs) are a reality. Thousands of such models are available for instant use on platforms like Hugging Face, which opens up the possibility of self-hosting.

However, the most powerful LLMs you’ve come across have billions of parameters, are hundreds of gigabytes in size and need considerable effort to scale. In a real-time system demanding low latency, you can’t just plug and play them into your application, unlike traditional models.

While you have full confidence in your team’s abilities to build the necessary infrastructure, the real concern lies in the cost implications of such a transition, including:

- Fine-tuning cost

- Hosting cost

- Serving cost

So, the big question is: do you increase the usage limit or do you go down the self-hosting, aka “ownership” route?

A bit of math with Llama 2

First of all, don’t rush. This is a big decision.

If you talk to your machine learning (ML) engineers, they’ll probably tell you that Lama 2, an open-source LLM, appears to be a good model to proceed with because it performs equally well on most tasks as GPT-3, which is your current model.

You’ll also learn that it’s available in three model sizes — 7, 13 and 70 billion parameters — and decide to proceed with the largest to stay competitive with the current OpenAI model you’re using.

LLaMA 2 was trained using bfloat16, so every parameter consumes 2 bytes per parameter. This means that the model size would be 140 GB.

If this sounds like a big model to fine-tune, don’t worry. With LoRA, you don’t need to fine-tune the entire model before deployment.

In fact, you might only need to fine-tune ~0.1% of the total parameters, which is 70M, consuming 0.14 GBs under the bfloat16 representation.

Impressive, right?

To accommodate memory overheads during fine-tuning (like backpropagation, storing activations, storing dataset), it would be good if you maintained ~5X more memory space than what the trainable parameters consume.

Let’s break this down:

- Weights of the LLaMA 2 70B model are fixed during LoRA, so this will not result in memory overheads → Memory requirement = 140 GB.

- To tune LoRA layers, however, we need to maintain 0.14 GB*(5x) = 0.7 GB.

- This totals to ~141 GBs during fine-tuning.

Assuming you don’t have the training infrastructure yet, let’s suppose you prefer using AWS. Based on the AWS EC2 on-demand pricing, compute will cost ~$2.8 per hour, resulting in ~$67/day for fine-tuning, which is not a huge cost since fine-tuning will not last several days.

AI is the opposite of a restaurant: The primary cost lies in serving, not preparing

In deployment, you need to maintain two weights in memory:

- Model weights, which consume 140 GB of memory.

- LoRA fine-tuning weights, which consume 0.14 GB of memory.

- This totals to 140.14 GB.

Of course, you can do away with the gradient computation, but still, maintaining ~1.5X more memory — roughly ~210 GB — is recommended to take care of any unexpected overheads.

Based on the AWS EC2 on-demand pricing (again), GPU computing would cost ~$3.70 per hour, which is ~$90/day to keep the model loaded in production memory and respond to incoming requests.

This comes out to be approximately $2,700/month.

Another thing you need to take into account is that unexpected failures happen all the time. If you have no fallback mechanism, your users will stop receiving model predictions. If you want to prevent that from happening, you need to maintain another redundant model just in case the request fails on the first model.

So this takes your costs to $180/day or $5,400/month. You are almost close to what OpenAI is costing you right now.

At what point do OpenAI and open source break even?

If you continue to use OpenAI, here’s how many words you can process every day to match the fine-tuning and serving cost incurred with LLaMA 2 above.

Based on OpenAI pricing, fine-tuning GPT 3.5 Turbo costs $0.0080 / 1K tokens.

- Assuming that most words have two tokens, to match the fine-tuning cost of the open-source LLaMA 2 70B model ($67/day), you would need to provide approximately 4.15M words to the OpenAI model.

- The average number of words on an A4 page is usually 300, which means we can provide the model with ~14,000 pages worth of data to match open-source fine-tuning costs, which is huge.

It is likely that you don’t have that much fine-tuning data, so using OpenAI will always cost less for fine-tuning.

Another point that might be obvious is that this fine-tuning cost is attached not to training time but rather to the amount of data that the model is fine-tuned on. This was not the case with fine-tuning open-source models, as the cost will depend on both the amount of data and the time you use AWS compute.

Regarding the serving cost, based on the OpenAI pricing page, a fine-tuned GPT 3.5 turbo costs $0.003/1K tokens for inputs and $0.006/1K tokens for outputs.

- Let’s consider $0.004/1K tokens on average. To arrive at a cost of $180/day, we would need to send ~22.2M words daily through the API.

- That is more than 74,000 pages worth of data, each page worth 300 words.

One good thing, however, is that you don’t have to ensure the model is up all day as OpenAI provides pay-per-use pricing.

If your model is never used, you never pay.

Summing up: When does ownership actually make sense?

Transitioning to self-hosted AI may seem like a tempting endeavor at first. But beware of the hidden costs and headaches that come with it.

Apart from the occasional sleepless night that leaves you wondering why your AI-powered service is down, almost all the difficulties of managing LLMs in production systems disappear if you use third-party providers.

This is especially true when your service is not primarily “AI” but rather something that relies on AI.

For large corporations, the $65,000/year ownership cost may be a drop in the bucket, but for most businesses, it’s a figure that can’t be ignored.

And, let’s not forget the additional expenses like talent and maintenance, which can easily inflate the total cost to a staggering $200,000-250,000+/year.

Sure, owning the model from the get-go has its perks, like maintaining control over your data and usage.

But to make self-hosting viable, you’ll need a user request load that far exceeds the ~22.2M words/day mark, along with the resources to manage the talent and logistics, combined.

It is unlikely that owning the model instead of using an API is financially beneficial for most use cases.

Avi Chawla is a data scientist and creator at AIport.