Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

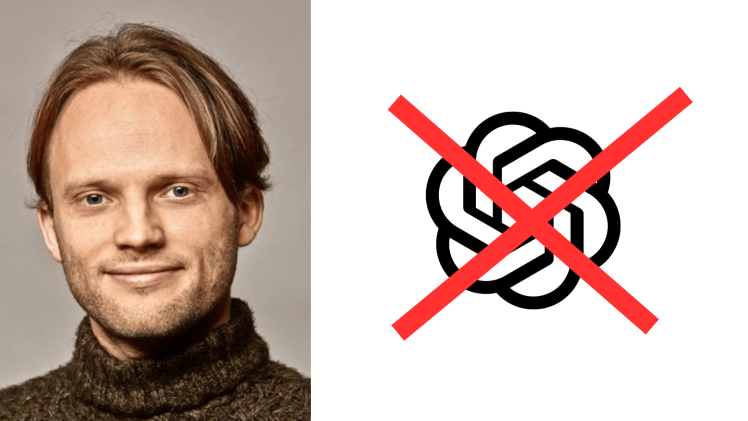

Earlier this week, the two co-leaders of OpenAI’s superalignment team — Ilya Sutskever, former chief scientist and Jan Leike, a researcher — both announced within hours they were resigning from the company.

This was notable not only given their seniority at OpenAI (Sutskever was a co-founder), but because of what they were working on: superalignment refers to the development of systems and processes to control superintelligent AI models, ones that exceed human intelligence.

But following the departures of the two superalignment co-leads, OpenAI’s superalignment team has reportedly been disbanded, according to a new article from Wired (where my wife works as editor-in-chief).

Now today Leike has taken to his personal account on X to post a lengthy thread of messages excoriating OpenAI and its leadership for neglecting “safety” in favor of “shiny products.”

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

- Turning energy into a strategic advantage

- Architecting efficient inference for real throughput gains

- Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

As he put it in one message of his thread on X: “over the past years, safety culture and processes have taken a backseat to shiny products.”

Leike, who joined the company in early 2021, also stated openly that he had clashed with OpenAI’s leadership, presumably CEO Sam Altman (whom Leike’s direct colleague and superalignment co-lead Sutskever had moved to oust late last year) and/or president Greg Brockman, chief technology officer Mira Murati, or others at the top of the masthead.

Leike stated in one post: “I have been disagreeing with OpenAI leadership about the company’s core priorities for quite some time, until we finally reached a breaking point.”

He also stated “we urgently need to figure out how to steer and control AI systems much smarter than us”

OpenAI pledged a little less than a year ago, in July 2023 to dedicate 20% of its total computational resources (aka “compute”) toward this effort to superalign superintelligences — namely its expensive Nvidia GPU (graphics processing unit) clusters used to train AI models.

All of this was supposedly part of OpenAI’s quest to responsibly develop artificial generalized intelligence (AGI), which it has defined in its company charter as “highly autonomous systems that outperform humans at most economically valuable work.”

Leike said that, despite this pledge, “my team has been sailing against the wind. Sometimes we were struggling for compute and it was getting harder and harder to get this crucial research done.”

Read Leike’s full thread on X. Several hours after Leike posted, Altman quoted his post in a new one on X, writing: “i’m super appreciative of @janleike ‘s contributions to openai’s alignment research and safety culture, and very sad to see him leave. he’s right we have a lot more to do; we are committed to doing it. i’ll have a longer post in the next couple of days.”

The news is likely to be a major black eye on OpenAI amid its rollout of the new GPT-4o mutimodal foundation model and ChatGPT desktop Mac app announced on Monday, as well as a headache to its big investor and ally Microsoft who is preparing for a large conference — Build — next week.

We’ve reached out to OpenAI for a statement on Leike’s remarks and will update when we hear back.