Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

With the impending rise of AI PCs, Qualcomm wants to help developers get ahead of the game. The company is releasing an update to its AI Hub that will support the Snapdragon X processor series. App builders can now tap into Qualcomm’s newest chips that power on-device AI, optimizing their programs to work efficiently on the next generation of Windows computers and laptops.

In addition, the Qualcomm AI Hub is opening up to more models. When it debuted in February, it had 75 pre-trained models. Today, there are more than 100. Moving forward, developers will no longer be constrained to what Qualcomm has—they can bring their own.

Training models to work on PCs

Qualcomm’s AI Hub is a good place for developers to start when looking for models from which to build their applications. If you’re building for a smart device, robot, or drone, you can find a model. The same applies to developing an AI-powered app for mobile devices and, soon enough, computers. Many of the popular models featured are also available on Hugging Face and GitHub. All can be easily deployed on Qualcomm devices and run on CPUs, GPUs, and NPUs using TensorFlow Lite or Qualcomm’s AI Engine Direct.

The support for Snapdragon X is a bid to stay one step ahead of the competition. Rivals AMD and Intel have announced AI PC processors, so today’s announcement is more strategic in nature. Developers can run models on the Snapdragon X Series Platforms to build AI-powered Windows applications, thanks to integrations with ONNX Runtime, Hugging Face Optimum and Llama.cpp. This potentially means developers could see their app be used on a host of computers from Acer, ASUS, Dell, HP, Lenovo, Samsung and OEM7—all companies with scheduled PCs powered by Qualcomm’s Snapdragon X Elite or X Plus chips.

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

- Turning energy into a strategic advantage

- Architecting efficient inference for real throughput gains

- Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

Qualcomm has also enlisted Andrew Ng’s DeepLearning.ai to power online courses that educate developers about on-device AI and ways to implement the technology in their programs.

Bring your own AI

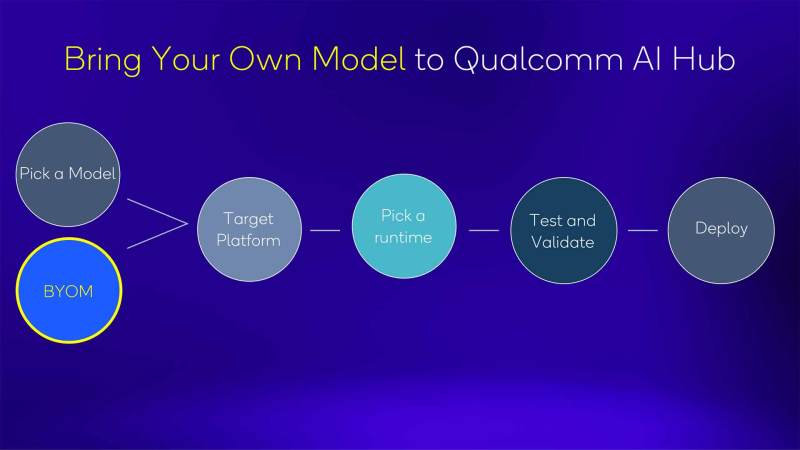

Who knows more about your customer’s data or space better than you? While many developers will take advantage of the more than 100 models available on Qualcomm’s AI Hub, there may be instances when some apps require specialized models.

Developers can now upload, optimize and compile SLM and LLMs specifically for Qualcomm and Snapdragon platforms. These models can be tested and validated on cloud-hosted devices quickly and with a few lines of code.

Imagine if you’re in the research field and are looking to develop a drug or compound. You might not use any of the popular models to accomplish this task, but you might create one that’s trained on specific scientific data. You can then take that model, upload it to Qualcomm’s AI Hub, and deploy it on AI PCs within your organization, allowing fellow researchers to take advantage locally instead of being cloud-based.

This example could be the same no matter what industry or profession you’re in. Having models optimized for the PC will help reduce latency in responses and facilitate faster iterations.