Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

Zyphra Technologies is introducing a new foundation model to decentralize artificial intelligence further. Zamba is an open-source 7B SSM-hybrid AI built using the company’s Mamba blocks and a global shared attention layer. It’s believed to bring intelligence to more devices while requiring a much lower inference cost.

AI for all devices

“Our dream and aspiration is to build your personal AI,” Krithik Puthalath, Zyphra’s CEO told VentureBeat. “Our fundamental mission is to connect people better. Devices [and] social media promised a world where we would be a lot more connected, happier, mindful and present. And… it’s far from that. And our dream and our vision is the future of AI.”

“We think fundamentally, the root problem is that AI from the big companies is centralized,” Puthalath goes on to say, “So in the pursuit of [artificial general intelligence], OpenAI, Anthropic and all these guys are building this monolithic model in the cloud — one model for everyone. We’re starting to see the limitations of that approach, which is that we don’t trust these things. So, AI doesn’t feel like our own. If you use ChatGPT, it gives you great answers, and there are reasoning capabilities. But the ability to have memory, personalization, actually curated to who you are and learning about you, we’re missing that.”

Don’t discount smaller LLMs

A model with seven billion parameters is small compared to OpenAI’s GPT, Anthropic’s Claude, or Meta’s LLaMA, all of which possess tens of billions of parameters. However, Zyphra intentionally chose that approach because it believes having a small language model (SML) is the best way to place AI on more devices.

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

- Turning energy into a strategic advantage

- Architecting efficient inference for real throughput gains

- Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

Though the company’s first model, BlackMamba, has 1 billion parameters, Beren Millidge, Zyphra co-founder and chief scientist, describes it as the equivalent of a “toy” and something to prove Zyphra was successful with its architecture experimenting. So, while 1 billion is a good starting point, 7 billion is “something you can actually have a serious conversation with.”

“Seven billion is actually the perfect scale to be able to run it locally on almost all devices you have,” he explains. On the other hand, if you’re running a model with several hundred billion to a trillion parameters, it’s likely that can only be run using a large cluster of GPUs, something most individuals won’t have access to. This, according to Millidge, is why Zyphra is targeting at this scale.

“This is the decentralization play,” Puthalath remarks. These bigger models have to be run on the cloud. If you can make smaller, more performant models for simple use cases that we, as consumers, want, then that can be done at a smaller scale.” His company is not alone in pushing for more specialized and smaller language models.

AI will continue to be infused into our devices, but having models stored locally may be the best way to ensure that generated responses are timely. No one wants to wait for the data to be transmitted from the cloud to their smartwatch, phone, TV, computer, tablet or wearable glasses. It must be real-time. Consequently, doing so could also make it more affordable, reducing the cost of running inference and allowing model makers to spend more resources on innovating.

Stacking up against competitors

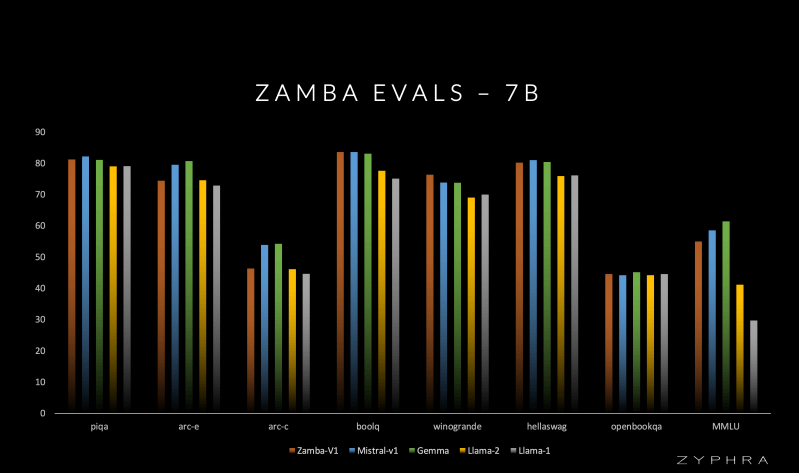

Zyphra boasts that Zamba outperforms fellow open-source models LLaMA 1, LLaMA 2 7B and OLMo-7B across a wide range of standard benchmarks while claiming to need less than half the training data. Though its testing was done internally, the company disclosed that it will release its weights so anyone can judge for themselves.

When questioned about how it approached developing this new architecture, Millidge answers that his team’s work is guided “by bias of intuition as practitioners. We have ideas about what kinds of problems models currently struggle with, and then we have intuitions about how we can solve them.”

The team also drew inspiration from neuroscience, specifically around the general scheme of how the brain works. Zamba is built with a single global memory block comprising a stack of Mamba blocks (the State Space component) and global memory that each block uses to read and write. “That way, it’s much easier for the model to share information across the sequential layer. This was… inspired by how the cortex in your brain interacts with the hippocampus, which is responsible for your long-term memory.”

But Zyphra didn’t rely on intuition from neuroscience, machine learning and general thinking. A great deal of experimenting was also involved. “Your intuitions aren’t always right,” Millidge argues. “You have to… learn some experiments and see what works, what doesn’t and then iterate from there.”

The open-source Zamba foundation model is now available on Hugging Face.