Chatbots have faced a lot of criticism in recent months. They’ve been called dumb, frustrating and useless, and a waste of time.

Of course, a lot of that criticism has been fair — there are a lot of dumb, frustrating, useless, waste-of-time chatbots out there, after all.

But there’s one piece of feedback I’ve been hearing that just doesn’t ring true for me. It’s this idea that the shortcomings of today’s chatbots stem from the fact that they’re not human enough. And that until they can achieve a human-level of intelligence, they won’t be able to add any real value.

As one critic recently wrote, “The ideal chatbot solution would be so seamless that a customer wouldn’t be able to determine whether they’re talking to a human or a chatbot.”

For me, however, this solution doesn’t seem ideal at all. I already have a team of actual humans who can talk to customers. So why would I also need a chatbot that can trick customers into thinking it’s a human?

The goal of building a chatbot shouldn’t be to have it flawlessly imitate a person. The goal should be to have it do something that a person can’t do, or to have it do some annoying, repetitive task that’s better suited to a machine (such as crunching numbers or searching through databases).

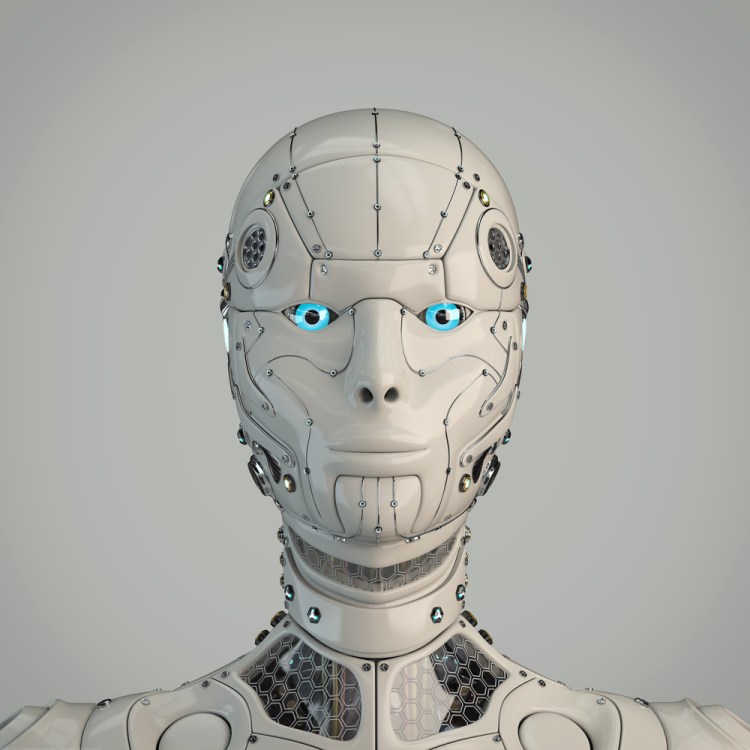

In attempting to create a chatbot that’s indistinguishable from a human, you’re inevitably going to fall into something like the “uncanny valley.” The uncanny valley is a concept in robotics/aesthetics that refers to the revulsion people feel when they see something that looks almost — but not exactly — human.

Ultimately, people like seeing some human features (e.g., two eyes, a smile) on their robots and other non-living beings. But when things get too realistic, it’s perceived as creepy.

That same notion can be applied to chatbots. Just think about the first time you played around with a chatbot like Jabberwacky, or ALICE, or SmarterChild. There were probably a few moments when the responses seemed so human-like that you got a bit weirded out, right?

So instead of thinking about chatbots solely as a means for replicating human intelligence and human behavior, we should be thinking about them as an independent technology.

The only thing working against us is history.

Because traditionally, artificial intelligence (AI) has always been defined in relation to human intelligence. Just look at the first definition for AI that pops up on Google: “the theory and development of computer systems able to perform tasks that normally require human intelligence…”

Our current understanding of AI, as well as our expectations for what chatbots should be able to do, was no doubt shaped by Alan Turing’s groundbreaking paper, “Computing Machinery and Intelligence.” In that paper, Turing introduced the world to his now-famous machine intelligence test, the Turing Test, which asserts that a machine can be considered intelligent if it’s able to pass itself off as a human during a 1:1 (text-based) conversation with an actual human.

So from the earliest days of AI research, the measuring stick for AI has been our own, human intelligence.

There’s a separate camp of AI researchers out there, however, who think that the real measure of AI should be something totally different. They point to other technologies, like artificial flight, which didn’t rely on their counterparts in nature in order to become a reality. If the Wright Brothers’ plane didn’t have to flap its wings like a bird in order to achieve flight, why should we assume that a chatbot has to be able to communicate like a human in order to achieve intelligence?

For a definition of AI that reflects this viewpoint, look no further than Amazon’s Alexa. Its definition of AI:

The branch of computer science that deals with writing computer programs that can solve problems creatively.

See the difference? Here, AI isn’t concerned with solving problems like a human, it’s concerned with solving problems creatively. The goal isn’t to replicate a human process, it’s to figure out the best, most efficient process possible.

And that’s how I like to think of chatbot development at my company. We don’t want to build chatbots that can imitate humans, we want to build chatbots that can take actions that maximize their chances of success toward achieving particular goals. That’s how we define artificial intelligence: It’s having chatbots learn from their experiences so they get better and better at the tasks they’re performing.

At the end of the day, we don’t want our customers to think, “Wow, that chatbot is so human-like.” We want them to think, “Wow, that chabot is so helpful and good at what it does.” If we ever want bots to reach their true potential, we need to let bots be bots.