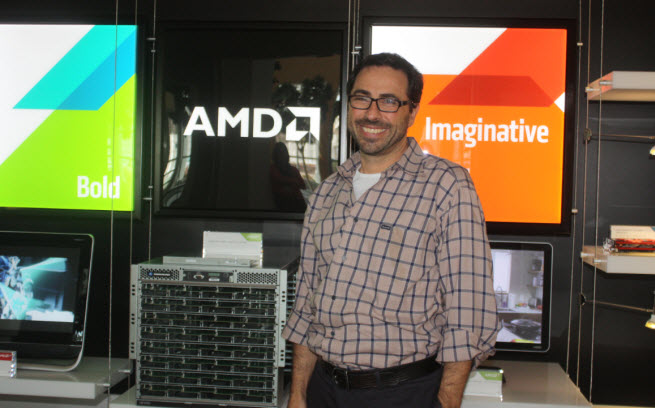

Andrew Feldman, corporate vice president and general manager of Advanced Micro Devices’ server chip business, showed off working 64-bit ARM-based processors for servers last week at the Open Compute Project summit last week in San Jose, Calif.

AMD plans to disrupt the huge x86 (Intel-based) server chip market by using smaller and more power efficient ARM cores in AMD-designed server processors. Feldman is leading that charge, and he says the company will ship ARM-based server chips this year. He predicts that ARM server chips will be 25 percent of the data center market by 2019. If that happens, that’s a big blow against Intel.

AMD isn’t going toe to toe with Intel in designing super-fast server chips anymore. And that may be its path to riches, according to Feldman. Its chips won’t need giant air conditioning systems.

AMD’s shift is disruptive in another way. An x86 (Intel-based) processor takes three years and $300 million to develop. Creating an ARM processor can take as little as three months and $30 million. Intel, however, says that the chip that Feldman talked about has weaker performance and higher power consumption than Intel’s Atom C200 (code-named Avoton) processors that started shipping last September. Based on what AMD unveiled last week, Intel believes it has a 65 percent advantage in performance per watt of power consumed. Intel also notes that the ARM chips aren’t necessarily arriving on the schedule that was originally promised.

Here’s an edited transcript of our interview with Feldman. We’re sure that his words will infuriate Intel.

VentureBeat: I see the way computing architecture works now. You have a little device’s processing power. It goes to a smartphone with more processing power. Then the smartphone taps the data center, with the most processing power. So a small device taps everything.

Feldman: That was the whole insight I brought to bear in my keynote. What these devices do is display the answers that were created somewhere else. That’s one of their limitations, and their glory as well. They give you access to all the world’s knowledge. It’s search or Wikipedia or how to make a reservation at a restaurant or where your friends are hanging out. It’s not that they have that information, but they can access it.

The simple proof point is, if you turn off your radio on that device, what you’re left with is an Angry Birds machine. It’s not a very useful device anymore. It’s not even a great phone. The Blackberry was a better phone, but a less cool device. It didn’t do as good a job of giving you access, and that won the day.

That’s the irony of the smartphone. “Smart” became more important while “phone” became less. We can build much better phones, and they do. If you go to the third world, they never drop calls. My dad called me from Mongolia and I dropped the call from the 280 freeway, in the heart of Silicon Valley, on the coolest new iPhone with AT&T. It’s because the phone became less and less important. Its ability to access the data center became more and more important.

Above: Andrew Feldman of AMD

VB: What does that mean?

Feldman: One of the things I pointed out in my talk is that the data center has snuck into our daily lives without our noticing. When we send an email or take a photo of our dog or watch the Suzuki Method graduation with our child playing the violin and recording it, we don’t realize the data center has become a part of our lives. That video is in the cloud, whether in iCloud or an Android service. We email it through Gmail to a grandmother far away. We tweet about it. The data center has snuck in to how we interact with the world.

That’s going to happen more and more with wearables, too. It’s not that wearables are interesting per se. What’s interesting is their ability to get to the data center.

What that led us to think about is a series of questions. Can we imagine a world with less compute, with less storage. Can we imagine a world where the workloads are bigger, more like CAD/CAM, or do we see the workloads being more like Twitter? The answer, obviously, is the latter. Can we see a world where graphics are less important? Can we see a world where power and space in the data center are less important? Those produce obvious answers for us, and for you and your readers who think about this as well.

When you take the next step and use those observations to create a bounding box for your future, we ask, “What have we learned from 50 years of the history of compute?” Smaller, high volume, lower cost parts always win. That’s what we’ve learned. That’s how the mainframes were beaten by the micros, and the micros by the minis, and the minis by the workstations, and the workstations by x86.

VB: How is this going to play out?

Feldman: Where I began in this industry, I was the first non-engineer and the only PC in the building. Everyone else had Sun workstations. Now we have 6,000 engineers, maybe 5,000 engineers, and not one of them has a workstation. All of them have access to x86 and use it, because it was smaller and cheaper and higher volume.

When we think about that, we say, “ARM has those advantages on x86.” There were eight billion ARM processors shipped in 2012, compared to 13 million server parts, plus or minus. ARM amortizes its R&D over hundreds of partners. Intel amortizes its R&D over Intel. We amortize ours over us in the x86 world. The ecosystem that has produced in the handhelds and the clients is remarkable.

What we’ve seen is exactly this pattern of the small nibbling their way up. Intel was sure it would win the phone, and got clobbered. Abject failure. Not once, but three times. ARM nibbled up from sensors, up into the phone, and from the phone up into the pad, and from the pad it’s working into the Chromebook. In its wake it’s laying waste to the client side. At first everybody says it’s underpowered, there’s no software, and then three years later everybody says, “Why would we buy something else?”

We see that same pattern nibbling up all the way into the server space. We’re throwing gas on that fire by announcing an eight-core ARM server part. We’ll be sampling it in a few weeks. Those are the ARM 857. It’s a remarkable part. It’s the only 28-nanometer ARM server part from a vendor who’s ever built a server part before. It’s hardened for servers. It uses all the IP blocks we’ve deployed in millions of servers over the years, all the RAS functionality, all the error correction, the ECC, the high-performance memory controller, all the technologies that servers have demanded. In addition, it supports a huge amount of DRAM, 128 gigs of DRAM. That’s four times what Intel’s premier single-socket part can support.

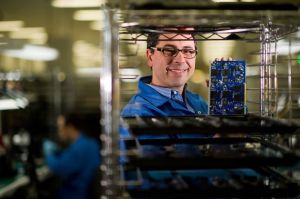

Above: Andrew Feldman of AMD with SeaMicro board

VB: And you demo’d this on stage.

Feldman: We did. In addition to the part, we’re showing an engineering evaluation board with a full software stack. It’s not just, “Here’s a piece of hardware.” It’s, “Here’s a hypervisor. Here’s an OS. Here are the full LAMP stacks. Here are dev tools. Here’s the full set, so that you as a software maker can jump in and put your software on top.” That’s one thing we showed. Huge response.

We also announced a server motherboard that fits the Open Compute Group Hug standard. It’s the first 64-bit ARM open-source motherboard. We put that whole design into the open-source community through the Open Compute Foundation. That’s a really cool board. It fits in my pocket.

It’s my belief that in 2019, ARM will be 25 percent of the server market. Every major data center will be designing their own custom or semi-custom ARM server CPUs.