Google, Pandora, and Spotify haven’t exactly advertised it, but they are all working on using a type of artificial intelligence called “deep learning” to make a better music playlist for you.

All three have recently hired deep learning experts. This branch of A.I. involves training systems called “artificial neural networks” with terabytes of information derived from images, text, and other inputs. It then presents the systems with new information and receives inferences about it in response. Companies including Google, Baidu, and others have put deep learning to work for all sorts of purposes — advertising, speech recognition, image recognition, even data center optimization. A startup even intends to use deep learning to recognize patterns in medical images.

Now these companies are turning to music. A neural network for a music-streaming service could recognize patterns like chord progressions in music without needing music experts to direct machines to look for them. Then it could introduce a listener to a song, album, or artist in accord with their preferences.

Putting these complex systems into production won’t necessarily happen overnight. But look out: Once in place, deep learning could be the kind of technology that inspires listeners to stick around music-streaming services for years to come.

“It’s a really exciting area, and certainly, it’s of high interest to Pandora,” Pandora senior scientist Erik Schmidt told VentureBeat in an interview.

Send in the interns

The new wave of attention leads back to an academic paper that came out of Belgium’s Ghent University last year.

In the obscure “reservoir computing” section of the university’s electronics and information systems department, Ph.D. students Sander Dieleman and Aäron van den Oord collaborated with professor Benjamin Schrauwen to make convolutional neural networks (CNNs) pick up attributes of songs, rather than using them to observe features in images, as engineers have done for years.

The trio found that their model “produces sensible recommendations,” as they put it in the paper. What’s more, their experiments showed the system “significantly outperforming the traditional approach.”

The paper captured the imagination of academics who work with music and deep learning wonks as well. Microsoft researchers even cited the paper in a recent overview of the deep learning field.

The group’s work also hit a nerve at Spotify. Dieleman and van den Oord ended up meeting some of the company’s employees while presenting their paper at the prestigious Neural Information Processing Systems conference near Lake Tahoe in December 2013.

“They invited us to give a talk about our work at the Spotify offices, since we were already planning to stop over in New York on our way back home anyway,” Dieleman told VentureBeat in an email. “That’s how I eventually ended up there, so I suppose the paper was pretty instrumental.”

As for van den Oord, he ended up interning with Philippe Hamel, a software engineer in the music research group at Google.

Dieleman and van den Oord have since returned to university, but it’s clear the companies wanted to look closely at the method the academics devised.

A smarter breed of robot

Deep learning stands out from the recommendation systems in place at Spotify, which uses more traditional data analysis. And down the line, it could provide for improvements in key metrics.

Spotify currently recommends songs using technology from The Echo Nest, which Spotify ended up buying this year. The Echo Nest gathers data using two systems: analysis of text on the Internet about specific music, as well as acoustic analysis of the songs themselves. The latter entails directing machines to listen for certain qualities in songs, like tempo, volume, and key.

We “apply knowledge about music listening in general and music theory and try to model every step of the way musicians actually perceive first and then understand and analyze music,” said Tristan Jehan, a cofounder of The Echo Nest and now principal scientist at Spotify.

“Sander [Dieleman] is just taking a waveform and assumes we don’t know that stuff, but the machine can derive everything, more or less,” Jehan said. “That’s why it’s a very generic model, and it has a lot of potential.”

The idea is to predict what songs listeners might like, even when usage data isn’t available.

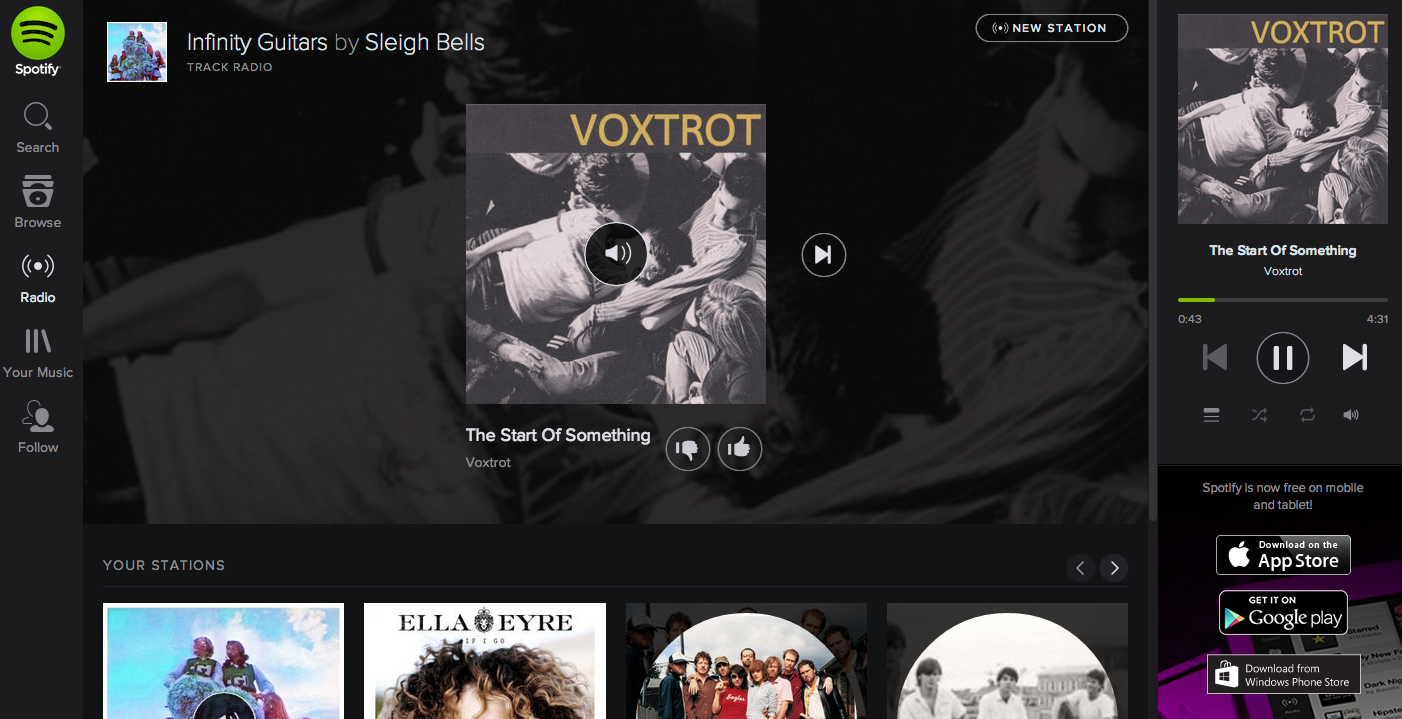

So Dieleman pointed the system at Spotify’s data set of songs — without data on which songs Spotify users like — and came up with several playlists based on song similarity. The blog post generated plenty of interest; it’s even caused researchers to start using some of Dieleman’s methods in their own work.

The implementation isn’t a perfect substitute for the current approach at Spotify. But Jehan believes it’s worth pursuing.

Head-bobbing robots everywhere

The interest goes beyond Spotify. Even Pandora, known for its use of humans in its process of finding songs to play, has been exploring the technique.

Pandora’s musicologists identify attributes in songs based on their knowledge of music. The end product is data that they can feed into complex algorithms — but it fundamentally depends on human beings. And people like Schmidt think that’s actually a great thing.

“I think to some degree that there’s always some superiority to the human ear,” Schmidt said. “We can get absolute ground truth by having musicologists label these things. There’s a level of accuracy, generally, that’s absolute when you have humans, and it’s something that we can really lean on.”

The human-generated data feeds into a system befitting a company with a $3.86 billion market cap. Pandora’s data centers retain an arsenal of more than 50 recommendation systems. “No one of these approaches is optimal for every station of every user of Pandora,” Schmidt said.

Proving a recommendation system’s advantages over those that have already had successful runs in production can be a challenge.

“If we move to something like deep learning, what does that deliver in terms of improving performance?” Schmidt said. “What we’ve seen is, in some cases, it does, but in many cases, we’ve been tremendously successful with simpler models.”

Meanwhile, deep learning has come in handy for a wide variety of purposes at Google, and employees certainly are investigating its applications in a music-streaming context. Doug Eck, a staff research scientist focusing on music technology at Google, wouldn’t say if deep learning were in place for, say, Google Play Music.

Still, he believes “passionately that deep learning represents a complete revolution, literally a revolution, in how machine learning is done,” he said.

The trouble is, deep learning on its own might do a good job of detecting similarities among songs, but maximizing outcomes might mean drawing on several kinds of data other than the raw content. Think upcoming events, or record labels — data that Google already has readily available in the Freebase database.

“What we see from these deep-learning models, including the best of best we’ve seen — very similar to what Aaron and Sander did — is there’s still a lot of noise out there in these models,” Eck said. Weeding out peculiar song recommendations becomes more important.

And so deep learning might not be a sort of drop-in replacement for music streaming. It can be another tool, and perhaps not only for determining which song to play next. Its capabilities could go beyond that.

“What I do see is that deep learning is allowing us to better understand music and allow us to actually better understand what music is,” Eck said. And that’s especially true when you consider its ability to analyze many seconds of music.

From there, Eck said, Google could take what it can learn about music and “build better products, like a better streaming service.”

But even at Google, computational mechanisms must be judged by their effectiveness in terms of the most important measurements, like how much music users listen to. And making major strides by improving the experience so they listen, and do so longer, isn’t easy.

“This is a great challenge,” Eck said.

The result: You, too, become a music snob

Above all else, one thing deep learning could be useful for is exposing people to new music, or to music that is currently unpopular but which they might like if they only heard it.

Fundamentally, especially for those systems that depend on people to analyze music, introducing listeners to new music remains a challenge. Pandora, for instance, has had the luxury of time to enter more genres and accumulate rich data on each, but there is always new music, and thus there is always music that Pandora hasn’t accounted for.

Pandora needs “to figure out and find relevant tracks and be able to deliver them from the first spin,” Schmidt said. Which is why the company is experimenting with machine listening, including what Schmidt called “deep listening.”

And it’s not just about making things better for music fans. Consider the artists: Deep learning might just be able to help them, too.

“I think one of the struggles business-wide all music services have is all indie artists complain they are not paid and so on,” said Alexandre Passant, the cofounder and chief executive of music-discovery startup Seevl. “If you can find a way to surface new artists in algorithms, that’s a win-win solution for everyone.”

So deep learning amounts to one of those technologies that several companies could start to implement in the future in order to improve music streaming.

“In the future, we want to be ahead of everybody else when that stuff is ready, but I think it’s getting there, slowly,” Jehan said. “So we’re going to keep working on that stuff, for sure. It’s going to be tested, and it’s going to be incorporated as soon as results are good.”