Editor’s note: If you’d subscribed to my email newsletter, you could have been reading this column yesterday!

In Microsoft’s vision of the future, Kinect sensors are everywhere: In your living room, your kitchen, at school, and even in the supermarket, above the fruit display.

And why not? The $150 motion-sensing device provides a cheap way to add gesture and voice controls to any application. Plus it’s got a camera and two 3D depth sensors that give computers a tool to map out spaces in three dimensions, recognize people by their faces, identify real-world objects, and create 3D models of those objects.

I spent a day at Microsoft’s Redmond campus this week attending TechForum, a small gathering of about a dozen journalists hosted by Microsoft’s chief research and strategy officer, Craig Mundie. Part strategy briefing, part new-product showcase, part science fair, TechForum was a chance for us writers to see an array of recent and upcoming technologies that Microsoft’s been working on, both in its commercial products as well as in its pure research labs.

Kinect sensors weren’t the day’s primary theme, but it was fascinating to see how many contexts in which the flat, three-eyed black bar kept popping up.

- Kinect sensors are built into the gestural controls in the futuristic demonstration home on Microsoft’s campus. In the living room and entertainment room, large-screen TVs use Xbox-like gestural and voice-command interfaces to let you select music and videos as well as control your home environment.

- Whole Foods, together with development house Chaotic Minds, showed off a robotic shopping cart that uses a Kinect sensor (mounted above the cart’s handlebars) to sense where you are so it can roll along the aisles following you. The Kinect could also be used to identify items you place in the cart, although for the demo we saw, the system used RFID instead.

- Nissan is planning a Kinect-powered interactive app to show off its new 2013 Pathfinder at the upcoming New York Auto Show, and maybe in dealer showrooms after that. Development company Identity Mind is building the app, which lets you view the Pathfinder from different angles by moving your body; a Kinect sensor identifies where your body is and adjusts the view accordingly.

- Interactive television shows for children are in the works, including a new Sesame Street series and a show called National Geographic Wild. These shows will use Kinect sensors to let children answer quizzes and play games by moving their bodies.

- Engineers in Stevie Bathiche’s group Applied Sciences Group have used a Kinect sensor to create a 3D display connected to a stereo camera that moves left and right as you move your head left and right, so the perspective shifts just as it does in the real world.

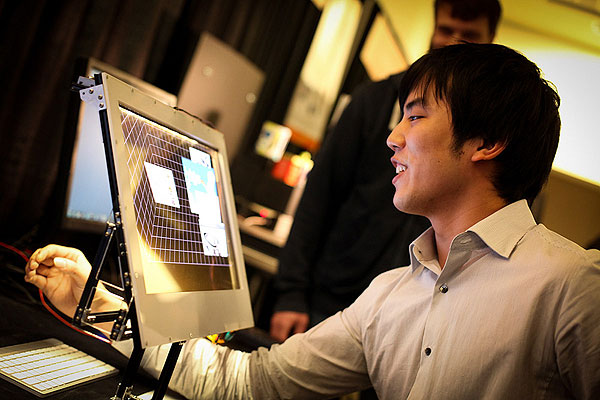

- Other Microsoft researchers have built a demo called “Behind the Screen” that uses Kinect sensors and transparent LCD displays to give you a sort of window for looking at 3D objects that you can manipulate with your hands. You put your hands behind the transparent screen, and a Kinect sensor pointed down at your hands detects where they are and what you’re doing with them. The system projects 3D objects onto the screen, superimposed on your view of your hands, so it looks like you’re interacting with the digital objects. Another Kinect sensor pointed at you adjusts the view to the position of your head, so the perspective always appears correct.

- Andy Wilson’s research group has created a sort of magic mirror called the “Holoflector,” which puts a half-silvered mirror three feet in front of a large LCD screen. If you stand three feet in front of the mirror, a Kinect sensor picks up your body position and can use that to display images on the screen behind the mirror. Because the screen is exactly as far from the mirror as you are, its images look like they’re coexisting with you in the three-dimensional world. That lets the system create some interesting interactive effects, such as turning you into a pixelated mannequin, displaying a floating “hologram” above your outstretched palm, or raining little bouncy balls all over you.

- Finally, Microsoft created a demonstration grocery display for TechForum that uses a Kinect hidden above your head, pointed down at a rack of fancy fruit. There’s also an LCD screen behind a half-silvered mirror that sits above the fruit rack. When you pick up a piece of fruit and hold it in front of the mirror, the Kinect recognizes what you’re holding. The display then wakes up and superimposes images on the reflection, showing you (for instance) what the fruit is, where it comes from, and what kinds of recipes use it. Naturally, you can use gestures to navigate through the interface, swiping left and right to see different information cards.

Microsoft is not alone in embracing the Kinect as a platform for interface innovation. Almost as soon as the Xbox accessory launched, hackers and programmers quickly started seeing what they could do with the device — build robotics platforms with 3D vision, for instance. Microsoft not only didn’t mind the hacking, it encouraged it. To facilitate the experimentation, Microsoft last month released Kinect for Windows, a development kit that’s expressly made for experimentation. Students can buy it for $150 and if you want to incorporate it into a commercial product, it costs $250.

Microsoft now says that more than 300 companies are working on products that will use the Kinect, including the Whole Foods and Nissan projects I mentioned above.

Last year, Mundie said, about 90 percent of the Kinect projects by outside developers were based on the gestural interface. This year, an increasing proportion are using its equally-impressive voice interface.

Even without delving into hard-core coding, Microsoft sees a way that Kinect can be useful for more than just playing games. The company showed a video of the Lakeside Center for Autism, a Seattle-area organization, which uses Kinect games to help autistic children learn to interact with the world and with each other. Apparently, the gestural interface is much more direct and intuitive for these children than traditional computer interfaces, and draws them out of their “shells” more effectively than traditional forms of teaching and human training. (Autism researchers have discovered similar advantages to using Apple iPads, whose touchscreen interface also seems appealing and engaging to children who otherwise have difficulty communicating.)

It’s not hard to see why the Kinect, which provides cheap 3D sensors that just two years ago would have cost thousands of dollars, is such an appealing platform for Microsoft’s innovators. The company is smart to make it so accessible to outside developers, and so cheap. For that reason, you can expect to see (and be using) a Kinect in the next year, regardless of whether you own one.

Are you building Kinect applications? Let me know about your projects, and send me links to short videos. I’ll include the best examples in a future story.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More