Eyefluence has an eye-popping technology straight out of science fiction. The Silicon Valley company is announcing it has raised $14 million for its eye-tracking technology that lets you use the gaze of your eyes to control augmented reality and virtual reality devices.

The company hopes to position itself as an essential user-interface tool for controlling AR glasses and VR headsets, which are expected to be a $150 billion industry by 2020, according to tech advisor Digi-Capital. Right now, it has prototypes that it hopes to license to some very large companies that could build products around the technology.

The funding round was led by Motorola Solutions Venture Capital. Other investors include Jazz Venture Partners, NHN Investment, and Dolby Family Ventures.

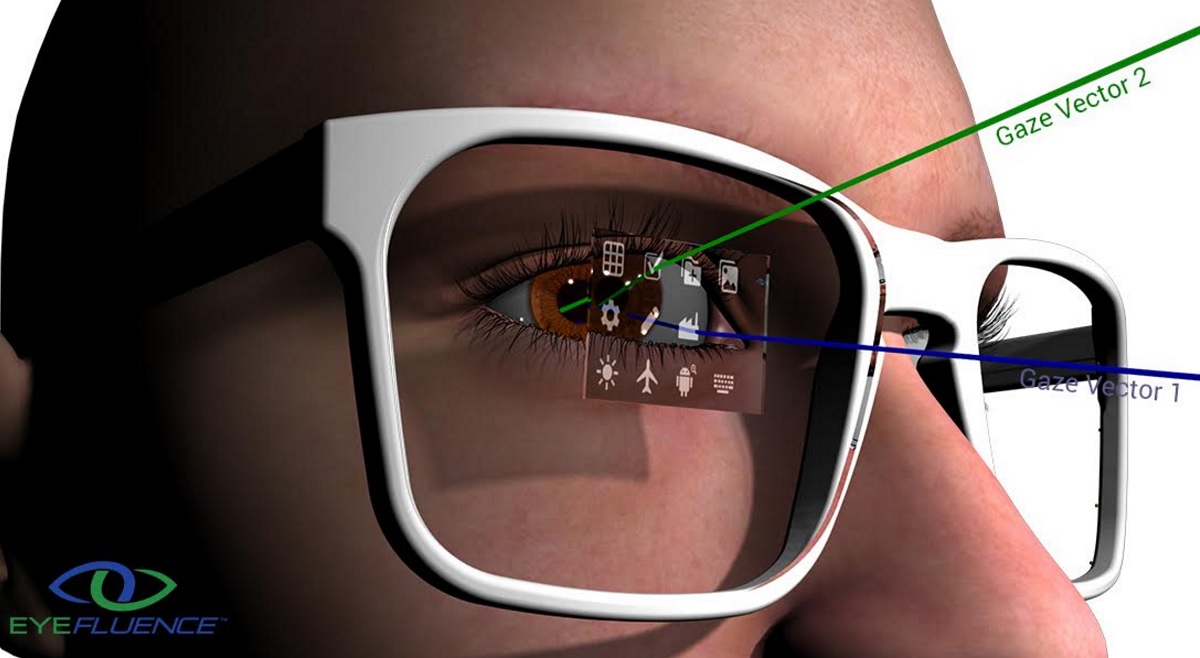

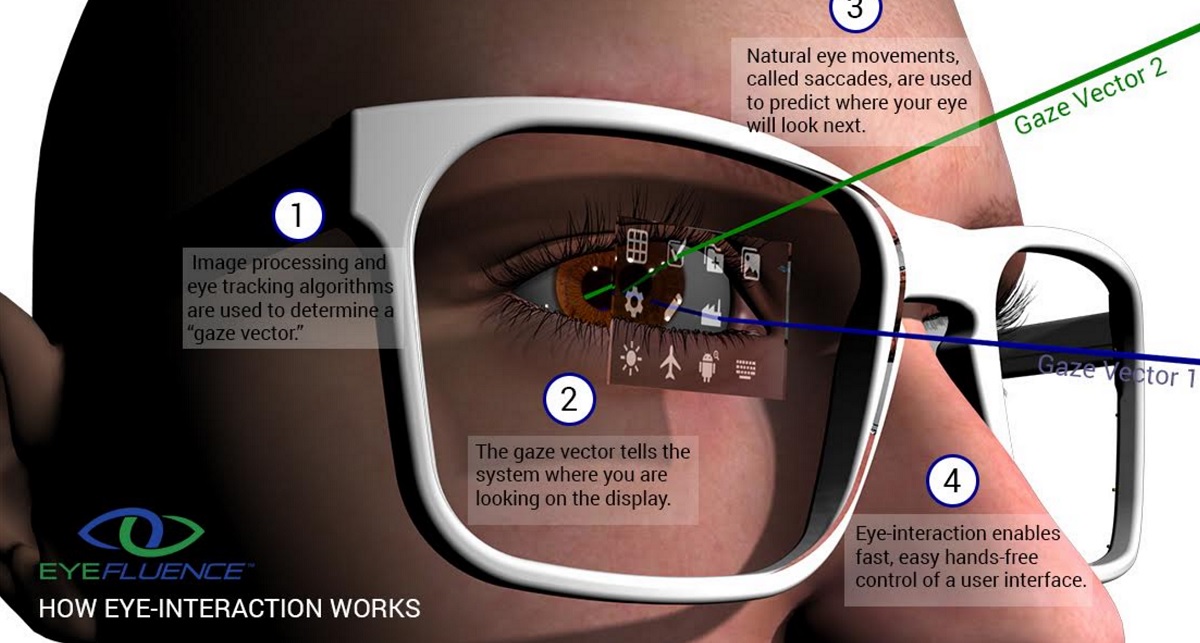

The technology comes from researchers and serial entrepreneur Jim Margraff, who created Livescribe, the smart pen company that was recently acquired by Anoto. But in contrast to other eye-tracking technologies that have recently been announced, Eyefluence has taken a deep dive into figuring out how the eye works and how we can read intent in where the eye is focused.

“We can do anything with your eye that you can do with fingers on a smartphone or tablet,” Margraff said in an interview with VentureBeat at the recent Intel Capital Global Summit. “It’s all about gauging your intent and what you want to control with your eye.”

Other technologies are limited to making you gaze at an object for a long time or winking for a while. But Eyefluence is trying to figure out what you intend to do — based on the behavior of your eyes — and then make that thing happen.

Eyefluence’s technology goes back to the 1990s. Researchers were trying to create ways to control devices with eye controls to benefit police and firefighters. But it didn’t really get off the ground. Margraff met them in 2013, and he acquired the rights to the technology. Then he started Eyefluence as a new company with cofounder Dave Stiehr.

“I looked at the history of eye-tracking, some of it that had been in the works for decades,” Margraff said. “You either look at something and wait, or dwell. Or you blink. It’s good for people with disabilities, but for anyone else, it wasn’t practical.”

He added, “So we did a deep dive, looking at the biomechanics of the eye. We said there has to be a way to combine the biology of the eye with technology for interaction. That was the premise. The benchmark is that you should be able do anything with your eyes that you can do with your hands. But faster. We transfer intent into action through your eyes.”

Rivals include Tobii, which has worked with SteelSeries to create a game-control product. Sony’s PlayStation Magic Lab has also shown off eye-tracking controls for its games.

The challenge of using eyes to control something is very difficult, as you can’t do anything that fatigues the eyes. You also have to re-acquire the location of the eyes if someone looks away from a computer or a screen.

Margraff’s team decided to focus on wearable devices. They had to filter out false direction, like when you’re blinking or looking away because your eyes are watering.

“You can’t do something unnatural,” he said. “Our method is completely natural. It’s a new language, as natural as a keyboard and mouse are to your fingers. It just works, and at times it feels like it is reading your mind.”

The problem is especially acute in virtual reality, where you can’t see your hands, and augmented reality, where you don’t really have another kind of control system, except maybe tapping the side of your glasses with a finger.

The company plans to license a suite of its eye-tracking algorithms and vision-driven user interface interaction model.

“We think this is the real deal,” said John Spinale, general partner at investor Jazz Venture Partners. “I’ve seen it and it works.”

Motorola Solutions is bullish on integrating eye-interaction into its smart public safety applications.

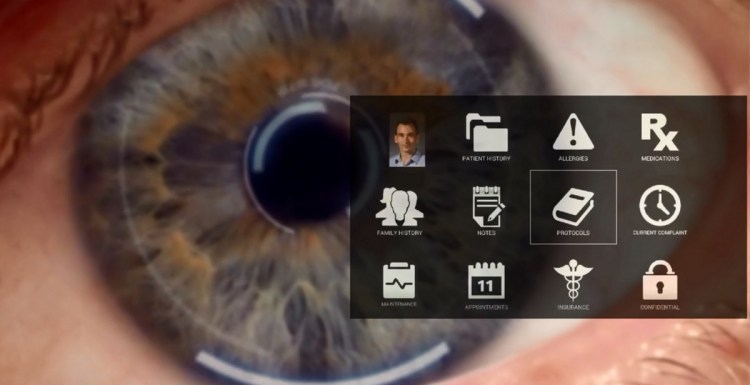

“Imagine if police officers could get information on an unfolding crime scene without visibly moving a muscle,” said Paul Steinberg, chief technology officer, Motorola Solutions. “Instead, they would use only the motion of their eyes behind sunglasses, leaving their heads up and their hands free to manage the scene and take quick action. Eyefluence is part of Motorola Solutions’ increasing investment in smart public safety solutions that power safer cities through innovative technologies.”

Eyefluence hopes that its technology can be used in AR and VR devices in the healthcare, oil and gas, financial services, entertainment, assistive technology, defense industries, and more. Margraff said Eyefluence is talking with Fortune 500 companies about partnerships.

Intel Capital led a first round investment in 2013 as a strategic investor. Eyefluence has about 30 granted or pending patents.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More