Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

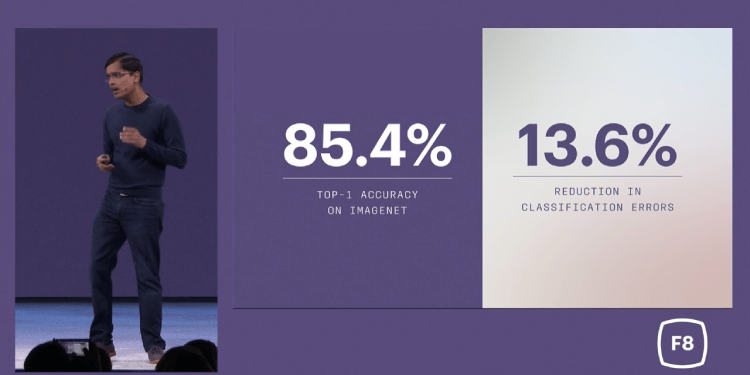

Facebook today revealed that, using 3.5 billion publicly shared Instagram photos and their accompanying hashtags, its computer system has achieved new advances, with a 85.4 percent accuracy rate when used on ImageNet, a well-known benchmark dataset. The AI model is now the world’s best image recognition system, said Facebook director of applied machine learning Srinivas Narayanan.

The results were shared onstage at F8, Facebook’s annual developer conference taking place today at McEnery Convention Center in San Jose, California. Other news announced at F8 this year include the release of Oculus Go, new Facebook Stories sharing capabilities, and the reopening of app and bot reviews following the Cambridge Analytica scandal. See the full rundown here.

The results of Facebook’s research mean that its computer vision in the real world can see more specific subsets, so instead of just saying “food,” it’s Indian or Italian cuisine; not just “bird” but a cedar waxwing; not just “man in a white suit” but a clown. Improvements to Facebook’s computer vision could make for a better experience for everything from sharing old memories to News Feed rankings, Manohar Paluri, Facebook computer vision research lead in the applied machine learning division, told VentureBeat.

“You can put it into descriptions or captioning for blind people, or visual search, or enforcing platform policies,” he said. “All of these actually can now do a much better job in individual tasks because we have representations that are richer, that are better, and that understand the world in a lot more detail than before.”

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

- Turning energy into a strategic advantage

- Architecting efficient inference for real throughput gains

- Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

Most of Facebook’s computer vision advancements have been achieved through supervised learning, Paluri said, in which a person is fully involved in labeling data fed to neural nets. However, today’s advances were achieved with weakly supervised learning, which use a mix of labeled and unlabeled datasets.

Hashtags that weren’t previously known were identified by cross-referencing hashtags with WordNet, a popular database of English words.

“Each word in the WordNet ontology is rooted to a real-world object or real world thing, so we take the intersection of these hashtags with WordNet ontology and fix the parts of the trees that intersect and only use those hashtags for training and the data associated for these hashtags for training, and this is where we were able to actually learn more compelling representations,” Paluri said.

Facebook is using weakly supervised learning because the method requires fewer humans to annotate and train AI than supervised learning.

Weakly supervised learning was also chosen because hashtags can be very noisy data. People routinely do things like mislabel their dog’s breed or hashtag the Eiffel Tower because they’re near the Eiffel Tower, even if there’s no tower in their photo.

No specific verticals were tackled during the course of this research. The AI model can recognize images from 20,000 categories, but learnings from the research will be used to improve Facebook’s deep recognition of things like birds, flowers, and pets. Facebook wants to expand to 100,000 categories in the future.

“Food is another place where we’re actually looking to improve significantly and going for that vertical. We haven’t listed out all the rest, but those are the things that come to our mind as immediate next steps,” he said.

Follow-up research may explore how similar techniques can continue to address challenges encountered when working with multi-label datasets or weakly supervised learning for video, Paluri said.