For the past year, Facebook has been leading a charge to open-source the world of servers and data centers, with the end goal being the cleanest, most energy-efficient Internet we humans can dream up.

[aditude-amp id="flyingcarpet" targeting='{"env":"staging","page_type":"article","post_id":485659,"post_type":"story","post_chan":"none","tags":null,"ai":false,"category":"none","all_categories":"business,dev,","session":"C"}']And for the past year, we and many others have wondered why Google isn’t participating in this charge — or leading a similar one of its own.

Why, we wondered, was Google refusing to participate in Open Compute, the Facebook-led coalition of major tech companies that have open-sourced their hardware for the greater good (and, it must be added, for some pretty big PR and recruiting brownie points, as well)? It seemed like an obvious fit with Google’s “don’t be evil” credo.

AI Weekly

The must-read newsletter for AI and Big Data industry written by Khari Johnson, Kyle Wiggers, and Seth Colaner.

Included with VentureBeat Insider and VentureBeat VIP memberships.

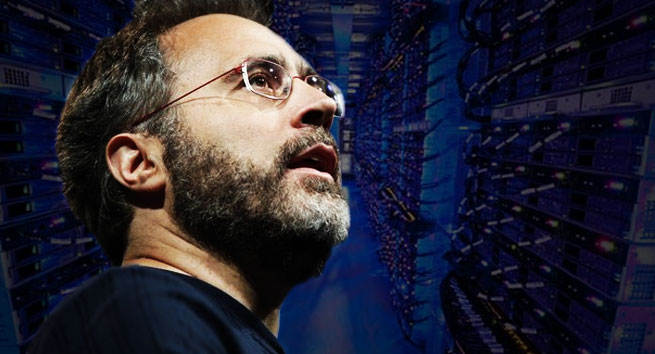

At Google I/O last month, we finally got an answer to this persistent question. We had some time for a long chat with Urs Hölzle, one of Google’s first 10 employees and the current chief of all things infrastructure-related at the sprawling software giant. The first part of that talk centered on Google’s latest cloud offerings, but our talk soon drifted toward hardware, data centers, and the awkward subject of Open Compute.

“Open Compute is a little bit tricky,” Hölzle began, stating the obvious. “If you can figure out how to make things work at scale and at good cost, that’s a competitive advantage.” Of Google’s own infrastructure, he said, “Thousands of years of engineering work has gone into the system to make it work.”

In other words, Google’s data centers are part of its long-cultivated goldmine, and it’s not letting Facebook peek inside. Google actually started harping on the concept of data center efficiency years ago. It joined up with Intel (now an Open Compute partner) and a few others in the Climate Savers Computing Initiative in 2007, and it held a summit on the subject in 2009 and again in 2011.

We asked a question Facebook execs had brought up earlier: Couldn’t Google simply share parts of its secret sauce without giving away the whole recipe?

“This isn’t an all or nothing approach,” said Facebook engineering VP Mike Schroepfer in a meeting at his company’s Menlo Park campus. It should be noted that even in Facebook’s “open-source” data center in Prineville, Ore., there are still servers with proprietary configurations and hardware. Still, Facebook is quite open about the other half of the hardware it uses.

“It’s disappointing when people don’t participate when they can selectively share with the community,” Schroepfer said pointedly.

[aditude-amp id="medium1" targeting='{"env":"staging","page_type":"article","post_id":485659,"post_type":"story","post_chan":"none","tags":null,"ai":false,"category":"none","all_categories":"business,dev,","session":"C"}']

Hölzle’s response is somewhere between cynical and stone-cold realistic, acknowledging that the competition between these two titans is, at every level and with every product, growing more strenuous all the time.

“In an ideal world, I would love to do that,” said Hölzle of Facebook’s piecemeal approach to open-sourcing its own servers. “We’ve selectively tried to share as much as possible. We have tons of contributions to Linux and open source [software] projects… But hardware is more difficult.”

Hölzle said there are two main reasons Google can’t or won’t open-source its server hardware. The first is that relatively little of each design is persistent through multiple generations; he describes it as ephemeral rather than iterative.

“The other thing is, the problems we’ve solved, very few other people need to solve those problems,” he said. “[The average company is] not going to experience the problems that we’ve solved. The people who will are going to be the Microsofts, the Amazons, and it’s not to our advantage to share that information with them.”

[aditude-amp id="medium2" targeting='{"env":"staging","page_type":"article","post_id":485659,"post_type":"story","post_chan":"none","tags":null,"ai":false,"category":"none","all_categories":"business,dev,","session":"C"}']

However, other companies could make the same argument. Rackspace, for example, lives and dies by its data centers, their cost-efficiency, and their uptime. Yet it is still choosing to participate in Open Compute. The same thing goes for Salesforce and Alibaba, also Open Compute partners.

But the Googler maintains his position: From a business perspective, it just doesn’t make sense. “There is conflict. If someone like Rackspace contributed their best ideas, then their competitor could use that idea and replicate it,” he said.

But isn’t that the point? To share ideas, collaborate on designs, and ultimately create the perfect spec for the perfectly efficient server — one that everyone could use and that would give enormous environmental benefit to a carbon-overloaded planet?

Ultimately, the key word in Hölzle’s statement is competitor. Facebook pitted itself against Google the day its ad sales started challenging those of the search Goliath. The struggle escalated as we users started spending more and more time on Facebook — more time than we spend in Gmail or Google web search.

[aditude-amp id="medium3" targeting='{"env":"staging","page_type":"article","post_id":485659,"post_type":"story","post_chan":"none","tags":null,"ai":false,"category":"none","all_categories":"business,dev,","session":"C"}']

In the end, as “evil” as Google’s non-participation in Open Compute might seem from the outside, any expectation that it would join in is naïve at best.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More