Google today is announcing that it has open-sourced Show and Tell, a model for automatically generating captions for images.

Google first published a paper on the model in 2014 and released an update in 2015 to document a newer and more accurate version of the model. Google has improved the technology even more since then, and that’s what’s becoming available today on GitHub under an open-source Apache license, as part of Google’s TensorFlow deep learning framework.

Google is also posting a research paper on its latest findings, along with a corresponding blog post.

One advantage of this new system is that people can train it more quickly than older systems, specifically the DistBelief system Google previously used for generating image captions. “The TensorFlow implementation released today achieves the same level of accuracy with significantly faster performance: time per training step is just 0.7 seconds in TensorFlow compared to 3 seconds in DistBelief on an Nvidia K20 GPU, meaning that total training time is just 25 percent of the time previously required,” Chris Shallue, a software engineer on the Google Brain team, wrote in the blog post. (But if you’re training the model with one GPU-backed machine, you will still have to wait one or two weeks, and getting “peak performance” could take “several more weeks,” according to the information in the GitHub repo.)

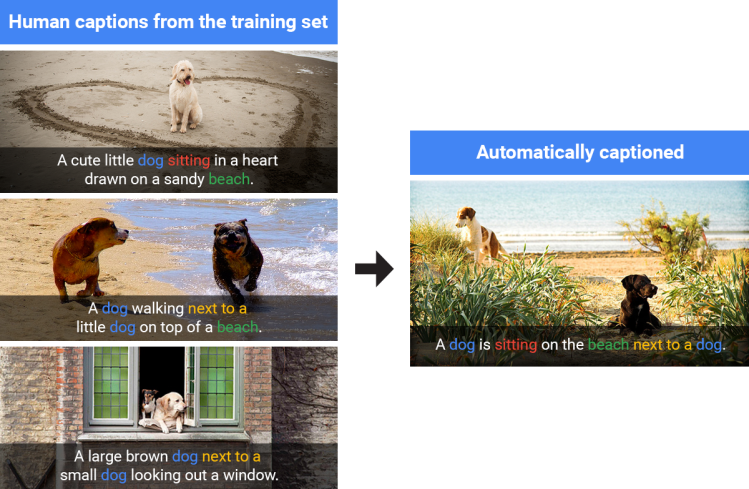

Google trains Show and Tell by letting it take a look at images and captions that people wrote for those images. Sometimes, if the model thinks it sees something going on in a new image that’s exactly like a previous image it has seen, it falls back on the caption for the caption for that previous image. But at other times, Show and Tell is able to come up with original captions. “Moreover,” Shallue wrote, “it learns how to express that knowledge in natural-sounding English phrases despite receiving no additional language training other than reading the human captions.”

Google is hardly the only company looking to AI for writing captions for images. There’s plenty of competition in that field, which goes beyond simply recognizing objects in images. (Think of how Google Photos can pull up your photos containing streets if you type “street” into the search box.) Automated caption generation can be useful for things like telling people what’s happening in photos that people post on Facebook — which Facebook has been working on.

There are other open-source tools for generating image captions, like Andrej Karpathy’s NeuralTalk2, but this is Google’s latest and greatest, and now it’s available for you to inspect or try out for free.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More