In the past couple of years, Google has been trying to improve more and more of its services with artificial intelligence. Google also happens to own a quantum computer — a system capable of performing certain computations faster than classical computers.

It would be reasonable to think that Google would try running AI workloads on the quantum computer it got from startup D-Wave, which is kept at NASA’s Ames Research Center in Mountain View, California, right near Google headquarters.

Google is keen on advancing its capabilities in a type of AI called deep learning, which involves training artificial neural networks on a large supply of data and then getting them to make inferences about new data.

But at an event at Google headquarters last week, a Google researcher explained that the quantum computing infrastructure just isn’t the best fit for systems such as convolutional neural networks or recurrent neural networks.

Several other tech companies — including Facebook, Microsoft, and Baidu — have been experimenting with deep learning in the context of image recognition, natural language processing, and speech recognition. Those other companies are large, with plenty of money to spend on infrastructure. But they don’t have a quantum computer. Google does. Still, that doesn’t mean it’s always the best tool for the job.

If anything, Google may be more interested in using the D-Wave machine to work on improving core Google processes like search ranking, the placement of advertisements, and spam filtering, if one report from last week is correct. (And Google may well be planning to talk more about its quantum work; the company is planning to hold an event on the subject on December 8, according to a report today from 9to5Google.)

Google product applications of deep learning #machinelearning pic.twitter.com/LoZzsi5lPf

— Greg Sterling ?? (@gsterling) November 3, 2015

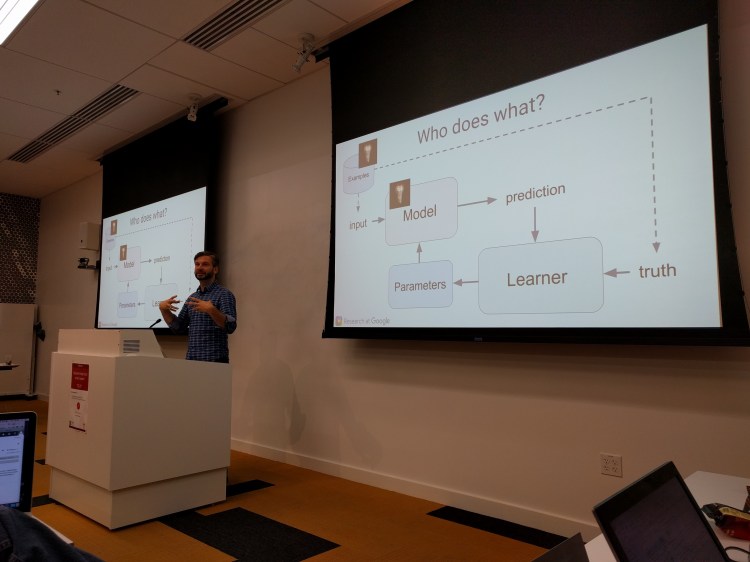

Deep learning, though, is a whole other thing. Generally speaking, it requires a model and a set of values for parameters, and you can’t make a prediction until you have both of those things, Greg Corrado, a senior research scientist at Google Research, told reporters at last week’s event.

“The number of parameters a quantum computer can hold, and the number of operations it can hold, are very small,” Corrado said.

He explained that recognizing a cat in a photo, for example, might require millions of parameters. It would involve taking “billions upon billions upon billions of steps,” to make out the cat’s granular characteristics, such as its whiskers, and then eventually identify the image in the photo at a higher level.

So, for now, Corrado said, the D-Wave equipment is not something he and his team working on the Google Brain are spending much time with.

That’s not to say other companies aren’t thinking about applying quantum computing to deep learning. Last month, two employees of defense contractor Lockheed Martin, which has also bought a D-Wave quantum computer, published a paper documenting their efforts to use a D-Wave machine to help train a deep neural network.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More