Ellis: There’s definitely that. The crowd was the first thing we started building – engaging researchers, giving them things to do, and learning how to community-manage a group of hackers. It’s an interesting business problem to have. It’s a lot of fun, but it’s pretty unique. Making sure that they’re looked after is one of the reasons we put quite a bit of effort into making sure the customer is ready to be above board when we’re doing stuff like that.

With open programs, what you see researchers complain about is a lack of perceived transparency. All it takes is a company saying it’s a duplicate, when before they said it was whatever. As soon as that distrust is seeded into the community, it can spread.

Having us in the middle, we look after that and work very hard to advocate for them, and also to be seen as an advocate. We go to bat when stuff like that happens, because it does happen from time to time. People don’t understand what the problem is, and they’ll not reward something that should have been. When that happens, the first thing we do is educate the tester as to how to communicate the issue more effectively to the customer. If that doesn’t hit the mark, we’ll jump in and help out a little.

VB: What sort of things are you learning from your work, from the crowd as a source of information? Has anything been particularly fascinating to you?

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

- Turning energy into a strategic advantage

- Architecting efficient inference for real throughput gains

- Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

Ellis: The crowd is a lot more effective at getting this done than single people. I used to run a pen-test consultancy, as I said. If you look at the number of vulnerabilities per dollar spent, it’s usually on the order of three or five times with this type of model. The creativity applied is higher as well, although that’s harder to measure.

A lot of it goes back to the kind of job that we’re doing right now. One thing that’s been interesting, that surprised us along the way — and the reason this is an issue in the first place — is that the people who are cutting the code either don’t understand how to make their code secure, or they do, but it slows them down. For whatever reason, they’re not doing what they should to make it secure. It’s often because they’re not incentivized to do it. “Maybe I can skip this step and nothing will happen.” That’s pretty common.

The best way to get someone to believe in the boogeyman is to have him jump out of the closet and say, “Hey, I’m here.” Having a kid in Russia come in and say, “Hey, I hacked your stuff as a part of the bug bounty program,” the psychology of how it impacts the person who cut that code is very different compared to when it’s another employee. This is just some kid. He’s well-intentioned and helped this out, but you wonder what his neighbor could do. You’ve seen that someone from outside the company, someone who doesn’t know what you know about the code, can come in and hack it anyway. We didn’t anticipate or plan that, but it’s been pretty consistent. It’s neat.

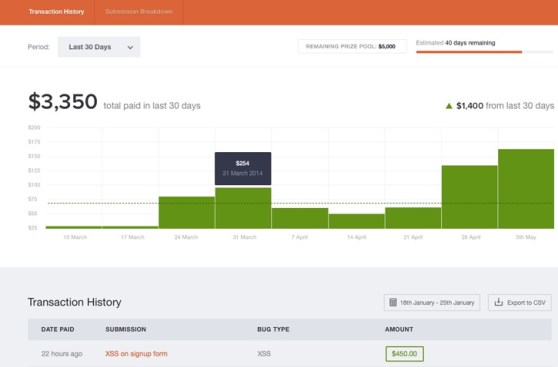

Above: Bugcrowd

VB: Is most of what you deal with pretty hackable?

Ellis: Anything can be compromised, generally speaking, with the right skills and with enough resources, whether it’s money or time. You apply enough money or time to the problem with the right people and you’ll get in eventually. There’s economic pragmatism that starts to kick in there, because not everyone is going to spend a bunch of time on that type of thing, but it’s very rare that we start a bounty for a customer and don’t have to escalate an issue to them in the first 24 or 48 hours. “Hey, we’ve just seen something come through and you need to look at it right now. This is not a drill. Wake the engineers up.” That’s a fairly regular occurrence.

VB: Back to some of these disclosure issues and all that, do you help on that front? How many days’ notice would somebody give before they expose the bug to the world? You could argue that exposing it allows the victim to prepare for a problem, whereas just telling the company 30 days ahead of time gives them time to patch it and all that.

Ellis: This is where the conversation is starting to get a bit murky, because there are two different conversations. There are conversations around hosted code, where it’s changed in one spot and then it’s fixed. Then there’s installable code or IOT or whatever, where you’ve fixed the code and then all of your customers need to update it. The timelines around things like disclosure are very different in those two cases, as well as the need for disclosure. If you’re running hosted code, there’s no real need to disclose an issue after it’s been resolved, unless it’s something you feel a proactive need to do. If it’s installed code, you need your customers to know to patch their stuff.

We released an open-source responsible disclosure framework, which was designed mostly around hosted code, with a guy called Jim Denaro at CipherLaw in D.C. The idea around that whole thing is, the problem that’s happening is that these researchers are already at the table doing stuff. Oftentimes, what we see now is, someone will find a bug in a site or whatever when they haven’t necessarily been given permission ahead of time to do that. They want to help and they get it in there, but because that’s such a confronting experience for someone who hasn’t had that happen to them before, they lawyer up or whatever and it’ll go downhill.

We let people run responsible disclosure programs for free on the platform. It’s just like, let’s get everyone starting to engage with the research community and feeling more comfortable about it. It’s better for the researchers and better for the companies who need to know how their stuff is going to get hacked.

VB: We have all these companies with their bug bounty programs. What real competition is out there for you?

Ellis: There are a few out there, a few kicking around here as well. There’s Synack. They’re an NSA company, doing their thing. There’s HackerOne as well, which came out of some of the Facebook guys, some guys from the Netherlands. It’s interesting. Looking back on the market, we were the first to come out and say, “We’re doing this.” We’re leading in a lot of ways from that perspective, and with the traction and customers we’ve had as well. But it’s definitely starting to become validated as a market. Other people are jumping in and getting funded.

VB: What do you guys do differently? Do you feel like you’re just ahead of them, or do you have something that makes you unique?

Ellis: Well, both. We’re ahead of them on execution and timing and so on, as well as experience with customers and handling both sides of the market, both supply and demand. As far as what we do that’s unique, if you think about it in terms of a spectrum of risk tolerance – risk tolerant and risk sensitive – you have Google and Facebook and these guys who are pretty happy to take some interesting risks and jump in to do this sort of thing as it stands. They make a big noise about it.

Over here you have financial services and companies that need this, but they’re not going to do anything that looks like that for quite some time, if ever. They’re trying to figure out how to apply it to their problems now. How do I get a better bang for the buck out of my security spend?

We address the whole stack. HackerOne is pretty much focused down this end, Synack is focused on this end. What we’ve done is said, “Let’s take companies that want to go loud and proud, grab them on, and then the ones who are further along the spectrum in a more conservative direction, we’ll give them a low-risk introduction to the concept and they can take it where they want to go with it.” If they want to replace their existing program with this, fine. If they want to move toward running an open program they can use for marketing and whatnot, fine. It’s not one size fits all.

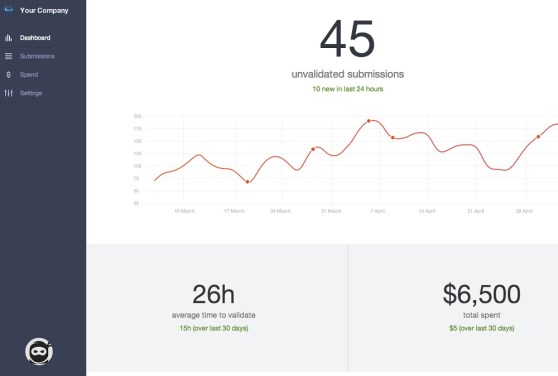

Above: Bugcrowd user screen

VB: Do you think you would position yourselves to handle five-alarm problems, like Heartbleed and so on?

Ellis: It’s an interesting one. Most of the bugs we see are in code that’s written by the customer, so it’s unique. But reasonably regularly we’ll see something come through in a framework and it turns out to be a zero-day in widely installed code. We run those disclosures up the flagpole so that people can fix it. The conversation around managing that process, but then also incentivizing the people who are capable of finding this stuff to find it, and then connecting to the people who can fix it, that’s an interesting problem. We’re playing with that. Right now we’re focused on customers, getting liquidity out to the crowd, getting stuff for them to do, and growing the company. But that’s an interesting concept on the side.

VB: How many people do you have on the team itself? What kind of money have you raised?

Ellis: We’re 12 people at the moment. We raised $1.6 million in September of last year through Paladin Capital and Icon Ventures. We have two models as far as revenue. One is a subscription model and the other is an ad hoc, where we get a project and take a piece of that as it happens. That’s the lead-in for the subscription model – a pilot program, so to speak.

VB: What kind of growth rate are you expecting? What can you see at the moment as far as your number of researchers?

Ellis: We’re not doing deliberate marketing, at least not in the sense of going out and directly trying to acquire testers, except when we come to these things. The way we see it grow, it’s organic network effects through regional intraspace networks. One hacker will say, “Hey, I just got paid a bounty” and tweet about it or whatever. Their peers who might also do the same thing say, “Shit, that’s cool.” Then they jump in and start participating.

Numbers wise, we have 10,500, maybe 10,600 researchers on the platform now. It’s been growing pretty consistently at 150 a week or so. We’re about 25 percent U.S.-based researchers. The next 25 percent are in India. Then the U.K., Australia, Germany, and others. We have some opportunities to do some cool stuff in South America and eastern Europe. Those will be the next places we go on purpose.

VB: Some places are interesting because they have these people, but they seem like they’ve been pushed to the wrong side of the law.

Ellis: Yeah. They don’t have opportunities. Romania and Bulgaria and the like. The funny thing about that part of the world, what I’m told is that a lot of the reason for that is because these are all the children of people who had state-sponsored PhDs in things like maths and astrophysics. Now things are changing as far as the opportunities available for them to do this type of thing in a way that’s legit over there.

VB: Do you find much of this stuff happening in the gaming world?

Ellis: No, but we do have a gaming aspect to how we incentivize these guys. We have a ranking system, leaderboards, badges, all these different things. It’s a pre-existing part of hacker culture, this competitive spirit. A lot of how we’ve designed those aspects of it is to try to take those things that are already there and steer them, instead of just creating something brand new. Making games for hackers is a very fun problem to be working on.