Advanced Micro Devices has been talking a lot about its leadership in micro servers, the chips with simple cores and low power that can be densely packed into a hive of machines in Internet data centers. Naturally, that’s ruffled some feathers at Intel, AMD’s much larger competitor, which has a commanding lead in both high-end and low-end server chips.

So we talked to Jason Waxman, vice president of the cloud infrastructure group at Intel, about the computing giant’s view of disruptions in the cloud and the possible cannibalization that could happen if micro servers take off and eat at Intel’s high-end Xeon server processors. The conversation was interesting in part because, even though this is currently a small part of the server market, it is potentially one of the most volatile.

Here’s an edited transcript of our conversation.

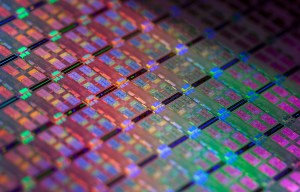

Above: Intel Avoton chip

VentureBeat: I listened to Intel’s earnings call. It sounded like cloud revenues were up about 40 percent in the quarter. That’s pretty good growth.

Jason Waxman: Yeah. The business has been very good in general. Just to provide an opening summary, there is a lot of growth being driven by cloud service providers, but also we’re seeing enterprises driving toward developing their own private cloud plans. Both of those things are showing high growth rates.

One of the things my group does is look at really large, hyper-scale data centers. We think about every piece of technology that they’re looking to optimize, whether that’s facilitating or customizing or optimizing it for their workloads, looking at the applications development and tuning for more performance or allowing them to scale better, looking at the systems themselves and trying to find ways to optimize for unique requirements, or even the facility – we have people within my team that are looking at innovation in all layers of that stack.

A big portion of what we do is thinking about the silicon technology that customers want to drive in that space. To that end, we’ve continued to see a lot of success with the mainstream Xeon product family. The vast majority of all of the servers being deployed in cloud service providers are standard two-socket Xeons, although some of them are tweaked for the unique parameters of those providers.

VB: And the low-end too?

Waxman: We’ve also started looking at emerging applications in different portions of the spectrum. Toward the low end, we’ve seen some applications that might benefit from SoC (system on a chip) technology, and that’s part of the reason we announced our second generation of Atom SoCs, called Avoton, which is based on our 22 nanometer manufacturing. We also have Xeon E3s that are going as low as 13 watts in that space. We’re trying to make sure we have targeted solutions.

We’re also targeting the product toward new segments for us, particularly things like cold storage, where some of the cloud service providers have a large storage requirement that really isn’t doing a lot. It’s “cold” because you’re storing photos that aren’t accessed all that often. Low-power SoCs are great for that emerging application and they represent growth for us. As well as in networking – Intel’s market share in networking is only 10 or 15 percent. Driving SoCs that allow people to make switches more programmable is also an area of growth for us. We’ve created a product based off of Avoton called Rangeley for that segment that includes some of the network-specific offloads and accelerators.density into that space. We’re driving a leadership product line there.

We’re trying to broaden our portfolio, because there is so much growth in cloud, to make sure that all those technology components can come from Intel.

VB: What’s the area that’s been getting the most attention here? Is it the micro servers? How would you characterize what’s getting the most press these days?

Waxman: It’s funny. Micro servers are getting what I would call maybe a disproportionate share of the attention, given that they remain a pretty small portion of the market. There’s a lot of interest in those three segments: micro servers, entry storage, and networking. That’s a high growth area because there’s so much change.

Software-defined networking also has a lot of attention, and the shift toward making switches and networks more programmable. Alongside that, network function virtualization, obviously a lot of focus there. We’ve seen a lot of interest in silicon photonics and the ability to use optics that meet a range of different requirements – distance, cost, and power – that the market doesn’t have today.

Big data is the new buzzword. People are interested in how we’re helping to bring that mainstream, just like we did from a cloud perspective. When you look at the fundamentals of that technology, we built off of volume economics. In as much as you can make technology more cost-affordable, make it easier to integrate, there’s more market demand for it. We’re taking that same view toward big data solutions. How do we work with the industry to make them easier to deploy?

VB: It seems like you think the attention to the micro server business is greater than it deserves right now. What’s your view of how much of the business it has become? AMD and the other advocates in the market are suggesting that it could be 10 or 20 percent. I think they said it was four to six percent right now. What’s your view of where it actually is and what it’s going to grow to?

Waxman: When it comes to forecasts, I’ll leave that to the people that do that best. The Gardners and the IDCs are probably better at that. Anything that’s nascent, you never know what the growth trajectory is going to look like. The only strategy that I can execute to is to make sure that we have the best products and that we can win. Regardless of whether it’s two percent or 20 percent, our job is to make sure that we deliver the best platform.

That said, today I think we have a good handle on how big the micro server market is today. It’s roughly one percent of the server units from our perspective. That’s Atom SoCs plus Xeon E3s plus Core, anything that falls into that category of single socket shared infrastructure. It’s about one percent today, from a volume perspective.

VB: There has been concern that this category might cannibalize the more expensive server chips. That percentage there suggests otherwise, though, that that’s not really happening.

Waxman: You never know what the volume ramp looks like. Some people think that it’s a hockey stick and some people paint it as a flat line. From a strategy perspective, what we decided to do is to make sure that, because we don’t know, it’s important for us to lead. When customers want something, we want to make sure we’re delivering the best product.

Part of the reason that we came to market with the Atom processor C2000 was because customers in that space told us very clearly what they wanted. They wanted 64-bit capability. They wanted competitive performance. They wanted energy efficiency. They wanted all the cloud data center class features, like ECC and virtualization technology. And so the product that we developed wasn’t so much predicated on whether the market was one or three or five percent, but more about making sure that if there is an emerging segment here, we have a leadership class product. We’re proud of the fact that we’ve delivered our second-generation 64-bit Atom SoC workload that is best in class before competitive products have come up with their first generation.

VB: ARM is active in the space as well. How do you look at that competitive threat?

Waxman: We always take the competition very seriously. The reality is that it’s not ARM, but it’s the cadre of different SoC vendors that are looking at their own approach into the market. Our goal is to make sure that we’re providing the best products and derivatives to address the span of the market. The customers, whether they’re looking at micro servers or cold storage or networking, buy based off of their total cost of ownership. What’s going to give them the best solution? That’s a combination of performance, power, density, and the features that matter.

We feel confident that what we’re delivering is not only delivering best in class for that segments, but we’re doing it in a way that’s allowing people to retain their software investment. That’s important. Software compatibility has been part of Intel’s value since the early days of x86. It’s part of what’s led us to success in the server market. We want to deliver that leadership product while maintaining the user’s investment.

Above: Server farm with cooling

VB: These guys have not really executed so far. They seem to be putting things off until next year almost every year. The competition seems to be moving slowly in this sector, even though it’s been talked about a lot.

Waxman: That’s one of the things that’s challenging about the server market. Particularly when you look at some of the growth segments in the data center. If you look at cloud computing or high performance computing, customers are telling us that they still want to see a fast performance growth rate. They want to see repeatable improvements.

VB: What sort of view does this look like to you? It seems like there’s a lot of competitors battling for what is now a very small piece of the market.

Waxman: It’s hard to contest that view. [chuckles]

VB: AMD announced their deal with Verizon recently. They said that their Opteron-based micro server chips are going to be getting into their cloud infrastructure. I think you guys contend that a lot of that cloud infrastructure – especially with a lot of the SeaMicro installations – is already based on Intel.

Waxman: I always like to let the customers disclose what architecture they’re using. It’s their call. But I do think Andrew did seem to acknowledge that a sizable portion was based on Intel architecture.

VB: What about the highest end of the market? How would you describe the dynamics there?

Waxman: Talking about data centers, there are two trends that are important. One is, again, a continued demand for more performance and capability. The rationale is simple. A lot of hyper scale data centers, whether cloud or high performance computing, they’re looking at just sheer numbers of systems that they want to deploy. If, as an example, you could get 10 or 15 percent more performance, that might not seem like much, but if you look at it in the context of 100,000 servers and avoiding the overhead of 10,000 to 15,000 servers, it’s a sizable amount. We continue to get requests from customers to find new ways to get even more performance. One of the things we’ve talked about a bit is that we’re getting more requests for customization and optimization by customers for their particular workload so they can eke a little more value out of our servers.

Splitting the enterprise market from the scale-out market, one of the things that differentiates them at the high end is the desire for reliability features and things such as shared memory. We have the E7 product lines for large mission critical databases with higher levels of reliability, error correcting circuitry. You have other segments of the market that don’t necessarily value the shared memory, but they just want more performance.

Above: Server farm

VB: What do you think about GPU computing and how it’s making its way into data centers?

Waxman: We talk about this a lot. Every time we look at an innovation in our silicon, if it requires software modification, there’s always this escape velocity that’s required. Meaning that sometimes that general purpose Xeon covers so much and can do so much that it’s hard to come up with something that’s differentiated for a different segment. That’s the border line where GPUs tend to reside. It’s difficult, in many cases, for people to program. They need to make investments in it. By the time they tap that potential, they could get almost equivalent performance out of the Moore’s Law that we deliver from the next generation of Xeon processors. There’s a constant treadmill that is difficult to escape from.

VB: If you’re looking out a few years, what are the trends that you see and that you’ll have to adapt to over time?

Waxman: I think that there are a number of them. One is, we’ll continue to see hyper scale and large scale computing becoming a bigger portion of the business. For us, we have to think about how our technologies deploy at scale, and how we’re allowing these users that will have these large application-centric data centers to optimize and get the most capability out of it.

Another trend that I see is just the sheer desire to simplify and make each of the platforms more programmable. If you think about compute and network and storage, each of them has largely been vertical stacks at various points in time. Now servers have migrated to a horizontal solution.

I think people are looking to bring that same type of mentality into networking, as one example. That’s part of the reason we see the desire for software defined networking and network function virtualization, and network function virtualization in particular, where you had special purpose hardware, and now you’d like to be able to run some of those applications and workloads on a standard Intel-based platform.

The third one is around big data. I’m a believer that, as much hype and growth as there has been around cloud, the potential for big data is substantially larger. It goes back to the economic drivers. If you look at simplifying IT – say, a half a trillion dollar per year industry – if you look at solving major problems in health care or manufacturing or government, or opportunities for more optimized marketing and supply chains, you’re talking about trillions of dollars worth of economic value. It’s going to be a big growth driver, particularly as we connect the internet of things to the analytics in the data center.

Above: Server farm side

VB: How do you convey some of this to regular folks? Paul Otellini (former CEO of Intel) used to say that for every 50 cell phones sold, somebody has to deploy a server. What are some of the things you like to draw out that way?

Waxman: We haven’t quite gotten it down to a science yet, what the ratio is going to be for the internet of things. We’ve seen forecasts of anywhere from 15 billion to 50 billion connected devices that are going to be doing computing. These range for sensors embedded in homes managing energy networks to smarter manufacturing equipment to wearables that might be monitoring people’s vital signs. You think about this huge proliferation of devices that will be computing. They need to be connected. They’re all going to be giving off data. You need a place to store that data and to analyze that data.

It’s too early to tell exactly whether one server for every 200 devices will hold, but there’s little doubt in my mind that once people get a taste of the insights and information coming off of those 15 billion plus devices, it’s going to represent a great driver for the data center business.

VB: What’s going to be interesting in the news in the next six months or a year, regarding the server market?

Waxman: The increased focus on network transformation is probably going to be one of the big headlines over the next six months. The need is clearly there, to go simplify the networks, particularly as there’s more complexity. And I say “more complexity” meaning that the data centers themselves are getting bigger and need to manage that traffic. You also have companies looking to have their own private clouds combined with public clouds. How they manage their network across different organizational barriers—All those things are driving transformation.

The two outcomes of that are going to be network function virtualization, where more people are going to want to run – rather than using special purpose hardware – those workloads virtualized on general purpose Intel control plane based switches. Second, how that’s all managed through a software defined network. If you look at forthcoming announcements we see in the industry from all the industry leaders—If it wasn’t interesting enough, the announcements around OpenDaylight or VMware’s acquisitions or what some of the folks in the startup community are doing are going to be even more interesting in the next six months. People will have to figure out the standards, or how software defined networks will plug in with different types of orchestration solutions, whether that be VMware, Microsoft, or OpenStack. There’s a tremendous amount of change. I would expect that we’ll see a lot of news from leading vendors around that change.

VB: What about the notion of where processing is going to happen? Whether that’s local or in the cloud. What’s the latest thinking on that?

Waxman: It will continue to ebb and flow based on connectivity. It’ll test some of the norms of what people think. It’s funny, because we interact with our devices. We think about gesture or voice as something that’s a client side activity. But to drive gesture recognition or voice recognition—A lot of the way it works is that it’s constantly taking samples of something, a picture or a pattern, and comparing that against a huge database. If I say a certain word, how do I know what the best match for that is? It’s hard, at least today, to get the type of database that can provide great speech recognition in a single client device. Things that require huge amounts of compute or breakthrough capabilities may lean more heavily on the data center for compute.

On the flip side, there are things that have been traditional data center activities, but due to bandwidth and connectivity, they’re moving more toward the client. Back to big data for a moment, the whole notion behind some of the frameworks that are produced is that you move the compute to the data rather than moving the data all the time. If you think about a network of surveillance cameras, you could take all the video and pictures from those cameras and try to consolidate them into the data center. But that becomes pretty costly from a bandwidth and storage perspective. What you’d rather do is have a camera signal when it sees a certain event, and that means the compute here goes from the data center out to the edge, the device itself. Having the device identify an object or an event and sending relevant data when it’s needed back to the data center. The traditional view of where compute is being done can change depending on how much data is required. The rule becomes that the compute moves to where the data resides.

VB: The internet of things, does that cause you guys to make some changes? Did you start thinking about it a long time ago, or is it more recent? It reminds me of the transition from PCs to mobile, how long that took, and the different calculations and bets that companies made to get ready for that. I wonder what the internet of things causes you guys to do now that you’re talking about it a lot more this year.

Waxman: You could say that there’s an inflection of a number of different things that come together. Our roots in the space could be traced back at least a decade into some of the things we’ve done for the embedded market. A lot of the intelligence in the internet of things is reserved for the smartest, if you will, of machines. That could be computer numerically controlled factory equipment, as an example.

But like a lot of things, as compute becomes more powerful and cheaper, people find new uses for it. One of the things we’re seeing is that a natural evolution is occurring. Now you can take the same level of compute and have a 10X level of capability in a certain type of silicon package than what you had just three or four years ago. That allows people to embed intelligence in devices where it really wasn’t feasible previously.

While we’ve been continuing to look at applications in retail or manufacturing or health care or energy all along, the thing we’ve noticed is that there’s this huge pyramid of devices. At the base of that pyramid, where there’s a lot of volume, you’ve got what most would consider pretty dumb, rudimentary sensors out there. It opens up an opportunity. How can someone harvest all of those sensors that are embedded in all of these devices and start to add intelligence?

You’ve got the convergence of all these different things. That led us, a couple of years ago, to start looking at how we can produce Intel silicon that hits the right price point, performance, and power to address that new range of capabilities. That’s what led us to announce Quark. You combine that with having a chief executive that knows fabs better than anybody in the industry, and it lends itself to a powerful strategy as far as how we can go make Intel architecture ubiquitous through a new architecture such as Quark.

Above: Intel Xeon Ivy Town chip

VB: Do you think all of the servers in the world are going to wind up in Iceland?

Waxman: [laughs] I don’t think they have to. It’s interesting. One would think that locating a server where it’s cold all the time would certainly reduce the cooling costs. But one of the things that’s funny is that a lot of data center location is more tied to the latency and the speed of light. Number two, it’s the humidity, or cheap power.

Certainly if Iceland has cheap power and it’s easy to cool, those are big drivers. But we’ve also found that you can do free cooling in even hot temperatures. There are companies doing it in Arizona and Las Vegas. You wouldn’t think that would be an easy place to run a free cooling data center, but it has a lot more to do with humidity as a key driver than the actual temperature. Availability of power and proximity to population will continue to be the two primary drivers. But it’s interesting to see the different approaches that people take to free cooling.

VB: What do you notice about the behavior of some of the folks who buy most of these things – Google, Facebook, Apple, Amazon. Are there any points you’d like to make about the behavior of the biggest server customers?

Waxman: One of the things that’s been interesting for me in learning from them and their requirements is that—If you think about it from their perspective, they have giant data centers designed to deliver an application. Traditional data centers were designed to offer a few servers here, a few servers there, with many different types of applications. The desire to optimize for that scale brings in a whole new perspective on some of the challenges they have.

One of them is consistency. They like repeatable. They like consistent. The more that you do things that are one-off or variable, the more it creates that fly in the ointment effect. What we want to go do is provide them with that reliability and consistency. That’s part of the reason that having a consistent instruction set and compatibility is important.

The other element is that they’re always looking for competitive advantage. They’re looking to deploy the technology sooner. We had a number of our largest cloud customers deploying the latest generation Xeon E5 2600s before they were publicly launched, because there’s so much demand to get a better time to market. It’s all about economics and competitive advantage.

That also leads me to the conventional wisdom, which is that sometimes we hear our competition talk about how they’re designing for the large cloud service provider design point and touting power efficiency. That certainly is a level of interest to them. But by far the biggest driver is getting more performance and more capacity out of these massive data centers. It separates the truth of what people are deploying from what’s sometimes just the industry hype.