Brave new architecture

The chip has a radical new architecture that differs from the basic computing architecture, first laid out by pioneering computer scientist John von Neumann, that has ruled computing for 68 years. In von Neumann machines, memory and processor are separated and linked via a data pathway known as a bus. Over the years, von Neumann machines have gotten faster by sending more and more data at higher speeds across the bus as processor and memory interact. But the speed of a computer is often limited by the capacity of that bus, leading some computer scientists to call it the “von Neumann bottleneck.”

With the human brain, the memory is located in the same place as the processor — at least, that’s how it appears, based on our current understanding of what is admittedly a still-mysterious 3 pounds of meat in our heads.

“After years of collaboration with IBM, we are now a step closer to building a computer similar to our brain,” said Cornell University professor Rajit Manohar in a statement.

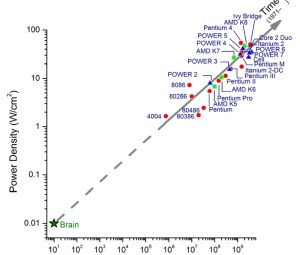

The brain-like processors with integrated memory don’t operate fast at all by traditional measurements, sending data at a mere 10 hertz, or far slower than the 5 gigahertz computer processors of today. But the human brain does an awful lot of work in parallel, sending signals out in all directions and getting the brain’s neurons to work simultaneously. Because the brain has more than 10 billion neuron and 10 trillion connections (synapses) between those neurons, that amounts to an enormous amount of computing power.

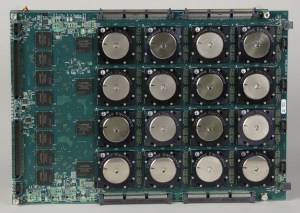

IBM is emulating that architecture with its new chips.

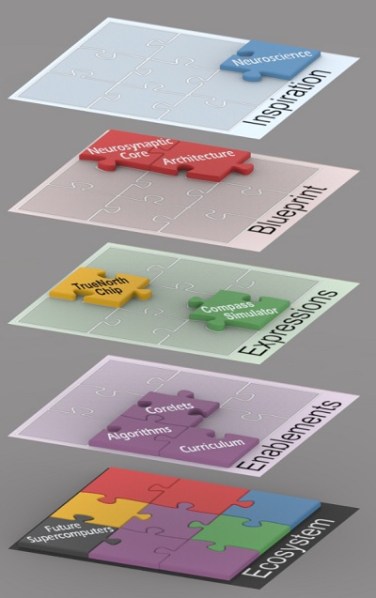

This new computing unit, or core, is analogous to the brain. It has “neurons,” or digital processors that compute information. It has “synapses,” which are the foundation of learning and memory. And it has “axons,” or data pathways that connect the tissue of the computer.

The work combines supercomputing, nanotechnology, and neuroscience in an effort to move beyond calculation to perception.

“We are using totally different design techniques than those used to create current computer chips,” Modha said. “It’s scalable, efficient, and flexible.”

Modha said that this new kind of computing will likely complement, rather than replace, von Neumann machines, which have become good at solving problems involving math, serial processing, and business computations. The disadvantage is that those machines aren’t scaling up to handle big problems well any more. They are using too much power and are harder to program.

“This is like the milk to von Neumann’s cookies,” Modha said. “French fries and ketchup, yin and yang. This architecture puts the anatomy and physiology of the brain in today’s silicon. It’s a parallel, distributed, modular, event-driven, and fault-tolerant architecture.”

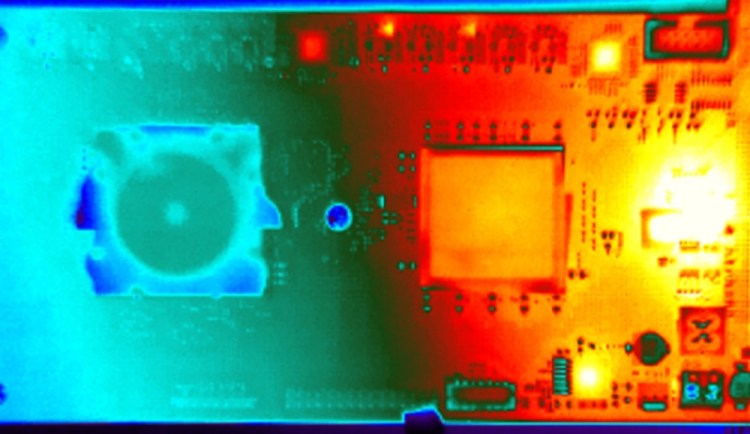

The more powerful a computer gets, the more power it consumes, and manufacturing requires extremely precise and expensive technologies. And the more components are crammed together onto a single chip, the more they “leak” power, even in stand-by mode. So they are not so easily turned off to save power. The advantage of the human brain is that it operates on very low power and it can essentially turn off parts of the brain when they aren’t in use.

These new chips won’t be programmed in the traditional way. Cognitive computers are expected to learn through experiences, find correlations, create hypotheses, remember, and learn from the outcomes. They mimic the brain’s “structural and synaptic plasticity.” The processing is distributed and parallel, not centralized and serial.

With no set programming, the computing cores that the researchers have built can mimic the event-driven brain, which wakes up to perform a task.

The so-called “neurosynaptic computing chips” re-create a phenomenon known in the brain as a “spiking” between neurons and synapses. The system can handle complex tasks such as playing a game of Pong, the original computer game from Atari. IBM demonstrated the chip playing Pong a couple of years ago, and it got a tech community working on learning how to program the chip in 2013.

As we noted before in 2011, the eventual applications could have a huge impact on business, science, and government. The idea is to create computers that are better at handling real-world sensory problems than today’s computers can. IBM could also build a better Watson, the computer that became the world champion at the game show Jeopardy.

Richard Doherty, an analyst at Envisioneering Group, has followed the research for a long time. He describes the importance of the Synapse technology — and the kits for programming it — to the launch of the Apple II in 1977.

“Outside engineers, programmers, neurologists, psychologists will tinker, improve, quicken and pour it all into a better IBM Synapse and some new startups,” he said. “We must have processors which will use the human senses we all identify with and feel comfortable with” so we can sift information from noise.

A long time in the making

I wrote about this when IBM announced the project in November 2008 and again when it hit its first milestone in November 2009. The company hit new milestones in 2011 and 2013.

With the grant from DARPA, the project took the work of eight IBM labs, Samsung, a startup, four universities (Cornell, the University of Wisconsin, University of California at Merced, and Columbia) and a number of government researchers from two Department of Energy Labs.

It took so long because it required the creation of an unconventional architecture, custom design of hardware and software, and new manufacturing processes. The team built new simulations, algorithms, libraries, training programs, and applications.

“It was genuine collaboration and sheer hard work,” Modha said.

After the design was done, the leaders offered a $1,000 bottle of champagne to anyone who could find a bug in the chip. After a month, no one found one.

“We had cheap wine for everybody at the end of the month,” Modha said.

Applications are likely to be in analyzing sensor data in real-time, and sifting through the ambiguity found in complex, real-world environments. Modha said companies can embed this brain chip in sensors.

Modha said the human eye sifts through a terabyte of data per day. Today, we record the world pixel by pixel. But the human retina detects changes in a scene. When it detects changes, the neurons spike. A Swiss partner provides a dynamic vision camera that connects to the chip and enables vision-like processing.

“This can revolutionize the mobile experience as we know it,” Modha said. “It can take visual, auditory, multisensory data to mobile, to the cloud, and to a new generation of supercomputers and distributed sensors.”

In closing, Modha said, “We haven’t built a brain. But we have taken the functions, structure, behavior, and capability and put it into silicon to enable a new computing capability. The chip has the potential to transform business, government, science, technology, and society.

“That’s my story, and I’m sticking to it.”

As for shipping the chips, Modha said it is still a research project. More than 200 people worked on it, investing 200 person-years in the project.

“The commercialization part is where we are engaged with a number of discussions with business partners, universities, government agencies, and fellow IBMers to move it out of the lab,” Modha said.

Science magazine is publishing a paper describing the project today.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More