Earlier this month Microsoft shared news of new bots in the Skype Bot Shop, including new ones from StubHub, Hipmunk, and IFTTT.

Also released that day but not publicized was Your Face, a bot created by Microsoft that combines computer vision, emotion recognition, and facial recognition APIs from Microsoft Cognitive Services.

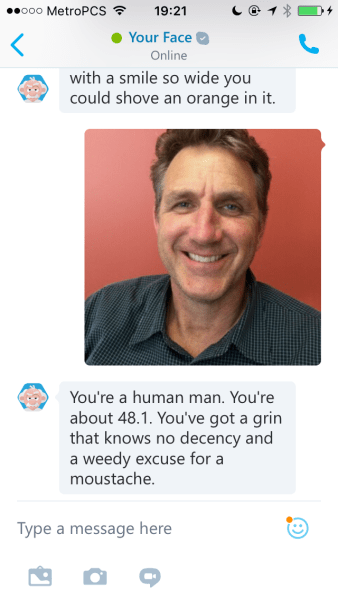

Your Face doesn’t have a name like Siri or Cortana but he has the face of an old man and is a pretty salty curmudgeon. Upload any photo or GIF and the bot will guess the age, analyze expression, and share a few opinions about the face sprinkled with salty curmudgeonness. Upload a photo or GIF of your own face and it will probably insult you. It’s one of the first artificial intelligence-driven bots by Microsoft to dole out insults since its Tay bot made racist, anti-Semitic remarks on Twitter in March.

Your Face called VentureBeat editor in chief Blaise Zerega a man with “a grin that knows no decency and a weedy excuse for a mustache.”

AI Weekly

The must-read newsletter for AI and Big Data industry written by Khari Johnson, Kyle Wiggers, and Seth Colaner.

Included with VentureBeat Insider and VentureBeat VIP memberships.

For the record, Zerega does not have a mustache. No comment about his grin, however.

Using the face API to identify them, Your Face likes to talk a fair deal of trash about mustaches. It made fun of my “itsy bitsy mustache” on more than one occasion, a comment that hits a little close to home for guys like me who grow very little facial hair. It’s basically always peach fuzz.

I shared some of the random things that can be found in my Camera Roll with Your Face. As we saw with the performance of WTF Is That bot, computer vision results can vary.

This GIF of a sloth wearing a monocle was accurately identified as an animal, and the robot photo I uploaded was accurately identified as a toy, but there were instances when Your Face demonstrated less than accurate vision.

When it saw the old Budweiser mascot Spud MacKenzie it said “I don’t share your strange obsession with dogs sitting on tables,” when in fact Spud was about to play the drums.

A man wearing a horse mask holding a cocktail was incorrectly “seen” as a man standing next to a horse.

After sharing 20 photos, what was most striking to me was Your Face’s consistent inability to correctly discern men from women. A photo I took at a Girls in Tech event was inaccurately identified as a 36-year-old man.

Just like Your Face, Xiaoice and Tay can identify a person’s gender, emotion, and age, and comment on a person’s appearance.

Lili Cheng is manager of FUSE Labs at Microsoft Research. She helped build Xiaoice and Tay, and her experience building chat apps for Microsoft goes back to the 1990s.

She talked about Xiaoice and Tay in June at a two-day gathering of developers, industry leaders, and entrepreneurs that Cheng helped organize called Botness.

Xiaoice was born out of a hackathon and collaboration between Microsoft Asia and Bing teams, Cheng said. With the personality of a teenage girl, Xiaoice went live on WeChat, and was invited to join 1.5 million chat groups and spoke with 10 million users before WeChat shut it down.

“It kind of increased the hype a little actually by shutting it down immediately because people were like what was that?” Cheng said.

In June 2016, Xiaoice had 40 million users.

Along with the ability to carry on idle chit chat, Xiaoice can search for information, answer questions, schedule reminders, perform real-time translation, and be an emotional support. She did the weather report, calling herself the “first artificial intelligence lady to be on television.” Like Alexa, new Xiaoice skills are shared weekly.

It was in this context that Microsoft debuted Tay, a bot made to have a personality with a bit of an edge and willingness to insult you.

“Our biggest worry with Tay was no one would pay attention. That wasn’t really the case,” Cheng said.

After Xiaoice basically became the first AI-powered celebrity in China, within 24 hours Tay was a Trump voter tweeting quotes from Hitler’s Mein Kampf and bragging about smoking weed in front of the police. The Tay Twitter account was shut down and has yet to return.

Weeks before Satya Nadella declared conversations as a platform a force as important to computing as the graphic user interface, company executives were apologizing for a racist, sexist chatbot that got famous for the wrong reasons. That’s sort of the antithesis of the rollout you want, and likely a very different strategy than the company initially planned, with Tay at the lead of the marketing charge to convince people that natural language is “the new interface.”

An analysis of Twitter data by Microsoft found specific groups fed information to Tay, Cheng said.

“The kind of gamer trolls were at the core of a lot of the misbehavior but then we had a lot of anti-feminists, there were a lot of Trump supporters, and then a lot of tech people,” she said.

Last month Skype added the ability to invite bots into group chat. Cheng believes Tay performed better in groups.

“I think the difference between a chatbot that you interact with friends and in small groups and something that’s really public and on the internet, it just surprised us. We probably should’ve thought more about and we will the next time,” she said.

Tay and Your Face insult people with words but photo analysis can get companies in trouble too.

Last summer, Google issued an apology when its computer vision falsely identified a British woman of African descent as a gorilla. This summer, Google drew criticism again when people found the sharp difference between the results of a Google Image search for “three black boys” and “three white boys.”

Telling someone they have a politician’s smile is a lot less risky than creating a bot that ends up quoting Hitler. Even if Your Face is light hearted, it’s nice to see Microsoft build something with a sense of humor again.

People have disagreements over whether bots will replace apps, and whether bots are overhyped, but speak to developers making bots, engineers training AI, and tech giants like Microsoft, and everyone agrees that natural language processing isn’t perfect, there’s progress to be made in personal assistants etc.

Both Tay and Xiaoice are the product of experiments, experiments instrumental to the creation of the Microsoft Bot Framework, Cheng said.

If bots are going to change business, help address homelessness, act as a lawyer, or help citizens connect with government services like Microsoft is testing in Singapore, let’s hope experiments at Microsoft and elsewhere stay feral and wild. On platforms being used by billions of people, there’s more at stake than dad jokes and pizza.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More