Nvidia is forging ahead with its plans to revolutionize supercomputers and workstations with a number of announcements today. Among them, the Barcelona Supercomputing Center will be the first in the world to adopt a solution that combines Nvidia’s low-power ARM-based Tegra central processing units (CPUs) with Nvidia’s graphics processing units (GPUs).

Nvidia is forging ahead with its plans to revolutionize supercomputers and workstations with a number of announcements today. Among them, the Barcelona Supercomputing Center will be the first in the world to adopt a solution that combines Nvidia’s low-power ARM-based Tegra central processing units (CPUs) with Nvidia’s graphics processing units (GPUs).

That combination could be a powerful way to improve computing power without consuming a lot of electricity. Power consumption and the electricity costs that go with it are now the No. 1 cost for maintaining supercomputers these days. Until now, Nvidia’s Tegra CPUs were used as mobile device processors, and most supercomputers used Intel microprocessors.

The supercomputers will use the hybrid architecture that combines the speedy serial (one thing after another) processing nature of CPUs with the parallel (many small tasks at once) nature of GPUs. The Barcelona Supercomputing Center plans to improve its energy efficiency by two to five times, according to the announcement today at the SC11 supercomputing conference in Seattle.

Of course, Nvidia is a long way from knocking Intel out of supercomputers in the big picture. Intel has 64-bit processors while Nvidia doesn’t and that’s an important feature for high-end computers. Intel is also using its manufacturing advantages to race ahead with low-power server chips, and it is starting initiatives to use low-power Atom chips in microservers.

The GPUs take advantage of Nvidia’s Cuda programming environment to perform non-graphics computing tasks on a GPU. The Barcelona center ultimately plans to deliver exascale-level performance while using 15 to 30 times less power than current supercomputers. Nvidia is the world’s largest independent graphics chip maker, but it has recently made headway in the processor market with its ARM-based Tegra CPUs.

An exascale supercomputer will, at some point in the future, be able to execute an exaflop. That is a billion billion floating point operations per second. A flop is the equivalent of taking two 15-digit numbers and multiplying them together. Right now, supercomputers such as the upcoming Titan machine at the Oak Ridge National Laboratory are targeting computation levels at 20 petaflops. A thousand petaflops is equal to one exaflop. So supercomputers have a long way to go to get to exascale.

Alex Ramirez, leader of Barcelona’s Mont-Blanc Project, said that CPUs alone often consume the lion’s share of the energy — 40 percent or more — in a supercoputer. By comparison, he said the Mont-Blanc machine will use the Tegra mobile processors to achieve a four to 10-fold improvement in energy efficiency by 2014.

Nvidia will create a new hardware and software development kit to enable more ARM-based supercomputing initiatives. The Barcelona version will feature a quad-core Nvidia Tegra 3 ARM-based CPU along with an Nvidia GPU. The center is starting with 256 Tegra CPUs and 256 GPUs. The kit will be available in the first half of 2012. Nvidia is naming the Barcelona center a Cuda “center of excellence,” the 14th such institute.

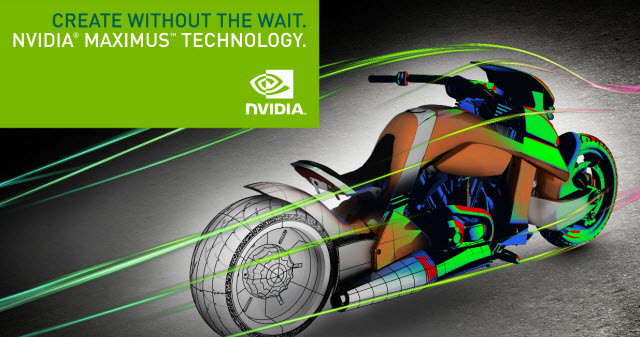

Nvidia is also introducing its Maximus circuit cards (pictured right) for advanced workstations. The Maximus technology can be used to design fancy cars and other high-computation tasks that normal PCs can’t handle. The Maximus cards will combine graphics and computation in the same system. The Maximus technology combines Nvidia’s Quadro professional workstation GPUs with Nvidia Tesla C2075 companion processors (which are separate computing cards).

Nvidia is also introducing its Maximus circuit cards (pictured right) for advanced workstations. The Maximus technology can be used to design fancy cars and other high-computation tasks that normal PCs can’t handle. The Maximus cards will combine graphics and computation in the same system. The Maximus technology combines Nvidia’s Quadro professional workstation GPUs with Nvidia Tesla C2075 companion processors (which are separate computing cards).

Previous workstations forced designers and engineers to do compute-based and graphics-based work separately or offline. Now, with Maximus, they can do both of those kinds of tasks at the same time on the same machine, said Jeff Brown, general manager of Nvidia’s professional solutions group. The workstations will speed up science, engineering and design applications from Adobe, Ansys, Autodesk, Bunkspeed, Dassault Systèmes and MathWorks.

Meanwhile, Nvidia has joined with Cray, the Portland Group, and Caps to create a new standard for parallel programming known as OpenACC. That will make it easier to accelerate applications that use both CPUs and GPUs. The OpenACC should benefit programmers working in chemistry, biology, physics, data analytics, weather and climate, intelligence, and other fields. Initial support for OpenACC is expected to be available in the first quarter of 2012.

CloudBeat 2011 takes place Nov 30 – Dec 1 at the Hotel Sofitel in Redwood City, CA. Unlike any other cloud events, we’ll be focusing on 12 case studies where we’ll dissect the most disruptive instances of enterprise adoption of the cloud. Speakers include: Aaron Levie, Co-Founder & CEO of Box.net; Amit Singh VP of Enterprise at Google; Adrian Cockcroft, Director of Cloud Architecture at Netflix; Byron Sebastian, Senior VP of Platforms at Salesforce; Lew Tucker, VP & CTO of Cloud Computing at Cisco, and many more. Join 500 executives for two days packed with actionable lessons and networking opportunities as we define the key processes and architectures that companies must put in place in order to survive and prosper. Register here. Spaces are very limited!

CloudBeat 2011 takes place Nov 30 – Dec 1 at the Hotel Sofitel in Redwood City, CA. Unlike any other cloud events, we’ll be focusing on 12 case studies where we’ll dissect the most disruptive instances of enterprise adoption of the cloud. Speakers include: Aaron Levie, Co-Founder & CEO of Box.net; Amit Singh VP of Enterprise at Google; Adrian Cockcroft, Director of Cloud Architecture at Netflix; Byron Sebastian, Senior VP of Platforms at Salesforce; Lew Tucker, VP & CTO of Cloud Computing at Cisco, and many more. Join 500 executives for two days packed with actionable lessons and networking opportunities as we define the key processes and architectures that companies must put in place in order to survive and prosper. Register here. Spaces are very limited!

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More