WOODINVILLE, Wash. — Inside a big home in the forested suburbs of Seattle, augmented reality glasses aren’t just an illusion. They’re about to become real products, dubbed CastAR, that can deliver games and other visual apps with cool 3D effects.

Inside the house jammed with pinball machines and prototypes, a team of former Valve employees — led by chip engineering wizard Jeri Ellsworth and game progammer Rick Johnson — have created the startup Technical Illusions. They’re designing inexpensive augmented reality glasses that they believe will create a new level of excitement for the category.

While game publisher and Steam game distributor Valve chose not to pursue augmented reality, Technical Illusions spun out and then received huge validation for its efforts when it raised $1 million in funding on Kickstarter in November.

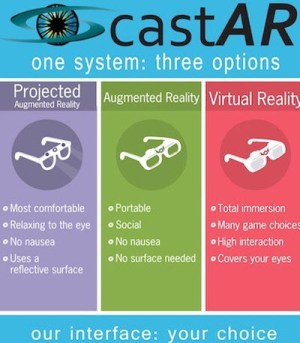

The CastAR glasses will be able to project 3D holographic images in front of your eyes so that you can either feel like you’re seeing a virtual layer on top of the real world, or you can feel like you’re immersed inside a game world. It works with glasses and a reflect sheet-like material called “retro-reflective.” Technical Illusions plans on delivering products to its Kickstarter supporters this year.

We caught up with Ellsworth and Johnson at their (temporary) headquarters on our recent trip to Seattle. Here’s an edited transcript of our interview.

GamesBeat: Tell us how the CastAR augmented reality system is going to work.

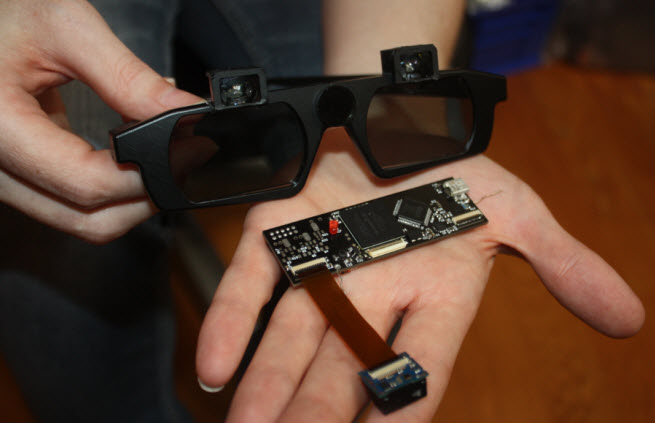

Rick Johnson: On the glasses, there are two micro-projectors. The final version will be 1,280 by 720 per eye. They shine out to the surface. Just as you watch a movie on a movie screen, same concept here. The surface is a special material called retro-reflective. It’s designed to bounce almost 100 percent of the light back to the source. If you look at the material under a microscope, it’s made of these tiny microspheres. Light enters each sphere and comes right back.

This allows for several advantages. Your eyes are focused naturally, so you don’t have any eye strain or near-eye optics. It allows for multiple people to use the surface simultaneously. If I’m shining out and you’re next to my shoulder, you’re not going to see what I’m seeing. You can play a different game, or the same game, or the same game from a different perspective. It’s a collaborative multi-user environment.

On the glasses is a cell-phone-style camera. It’s tracking infrared LED points in the physical world. The camera has special hardware that Jeri developed that breaks down the image sensor, so it comes down with point data. It becomes very efficient to transfer a small amount of data to the PC to do the final world-solver.

The overall system – the projectors, the tracking – is very low-wattage. You can run it in a mobile environment, just have it tethered to your phone and walk around using it that way. In addition, we have two input devices, what we call the magic wands. That’s a three-dimensional input into the world. It also has joystick buttons.

There’s a radio frequency identification (RFID) rig that sits underneath the surface. Anything with an RFID tag can be tracked across that surface with centimeter-level accuracy and uniquely identified. You can put down Magic: The Gathering cards to spawn land or a creature. You can place Dungeons & Dragons figures and augment them with your stats. You could play Warhammer – since we know all the distances, you could have the computer calculate fog of war, firing ranges. You just worry about strategy at that point.

The final thing is the AR/VR clip-ons. Those fit on top of the glasses. Through a series of optical expansions, they turn it into a full VR device, as well as an AR device, both of which don’t require the surface.

GamesBeat: Can you talk about how you got started, how you began this research? Was it something you pitched to Valve to get it going?

Jeri Ellsworth: The backstory is that I was hired by Valve to help them create their hardware R&D department, around 2011. We were exploring all different types of gaming experiences, input and output devices, and we were very interested in virtual reality and augmented reality. We quickly tested a bunch of AR and virtual reality (VR) rigs. We identified issues with headaches and motion sickness.

I was working on trying to solve the headache issues that people get with near-to-eye displays. I had a workbench set up with projectors and reflectors and lenses and stuff. I accidentally put a reflector in this test rig backwards, and I was trying to look into it. Instead of projecting it into my eye, it was projecting it into the room. We had a piece of this reflective material in the room for a different experiment, and I saw this beautiful image show up in the reflector. I’m like, “Wow, that’s interesting.” I grabbed the material and started looking at it, and I realized, “Wow, this solves a lot of issues with eyestrain. You can still see through the glasses and the material isn’t expensive.” So it was a happy accident that we ran into this property.

GamesBeat: Why did you even think about VR, given that it had been so discredited in the ‘90s? The conventional wisdom was that it was never going to be ready.

Ellsworth: There are points where technology converges and things become more practical. Because of cell phones and the miniaturization of cameras and displays, it started to get more interesting. Our objective was just to see if we could heighten gameplay and make it better, at that point in this project. We were exploring everything, both input and output devices. Then Rick came on board to help with the project, because I was doing the hardware, but we didn’t have any game support.

GamesBeat: What was your background leading to this?

Ellsworth: I’m an electrical engineer. I’ve done a lot of toy designs and produced custom ASICs (application specific integrated circuits), which allows me to make really low-cost devices. That was attractive to them as far as hiring me. I could help them create products.

Johnson: My first professional video game was in April of 1990, so I’ve been doing programming, lead programming, technology programming, all sorts of stuff. At Valve I did the graphics technology for DOTA. I was one of the three people that started the Linux revolution at Valve.

GamesBeat: With SteamOS, or before that?

Johnson: This was the initial effort to get games up and running, to get the OpenGL drivers running, and to get Steam ported. The SteamOS thing was at the very beginning when I left. Doing all that simultaneously, I got to know Jeri and the project she was working on. She didn’t have any software support, so I worked in my spare time to create this prototyping environment, so we could create samples and ideas and see what they looked like in AR. Figuring out what games work and what doesn’t, how to handle input, how to present data to the users, all that sort of thing. We started working together and all this spawned from there.

Ellsworth: I knew we were on to something big when people started coming to the lab and hanging out for three, four hours at a time, playing with our prototypes. Rick had put together a really simple multiplayer game – which he hates, because it had a lot of bugs – but it used the wands. Multiple people could sit around the table with a flat piece of reflector, and it had a scrolling maze that would move around depending on where you moved your character through with the wand.

The fun part of the gameplay, what was so addictive, was that you could grief the other players. There were zombies and other stuff running around this maze, and you could get the other player to run into zombies by doing things. But you could also look at each other across the table and kind of tell what they were doing, because they’d be moving their wands in different ways. You could counter what they were doing if you could tell what they were up to, even if they were five meters away in this virtual maze.

Those prototypes were ridiculously heavy, not even close to the miniaturization we have now. It was just giant, these big things that sat on your head. That was the first prototype, there. It was just to prove the concept. We shrunk it a bit and got it down, but it was still big. But we knew we were on to something, because all these different game experiences were super addicting. People were disrupting us, because they’d come down wanting to play.

Johnson: Jeri picked up these horrible sounds from Left 4 Dead, the zombie deaths. Every now and then there was a bug in the sound system, where the sound would take five times as long to play. It was this low-pitched AAAAAAGH! Here I am at two in the morning, I’m trying to do pixel shader coding, and people are talking and making noise while these crazy sounds play. Finally I’d have to kick them out.

Ellsworth: We were working late into the night, because everyone was so excited about this. People would come down about six or seven o’clock when the rest of Valve was getting done, and they’d be there all evening. We’d all go out to dinner and come back, and they’d be like, “Hey, can we do another round of Zombie Maze?”

GamesBeat: Was this kind of self-assembled? You would just grab people, or people would gravitate to your project.

Johnson: In this case, it was a little more difficult to attract people. Introducing hardware to a software company — you can’t put out hardware and patch it. The iterations of going from the giant head crab to something that’s actually comfortable, it’s a long road. Sometimes it’s hard to convey that it takes time for these things to iterate.

We found ourselves, mostly, with other hardware people developing it. We were constantly inviting people down to take a look at it.

GamesBeat: Did you have to bring them in, or were they already at Valve? Did you have to hire new people?

Ellsworth: I recruited most of the hardware team. I was brought on to do that.

GamesBeat: For this project, or for this broader laboratory operation?

Ellsworth: The whole thing. We were all helping each other out on different projects.

Johnson: Historically, for the most part, this has just been Jeri and I, up until the completion of the Kickstarter. Now we have some capital to hire people who can help grow the business and alleviate some of the effort we’re doing.

Ellsworth: We’re almost two years on it. It was actually a very short time at Valve. We exited Valve in February of last year and it was still the big prototypes.

GamesBeat: Was that part of a strategic decision Valve made, to do VR as opposed to augmented reality?

Ellsworth: It’s complicated. It wasn’t necessarily the project at all. It was just a changing direction inside the company. Fortunately, Gabe [Newell, Valve’s managing director] just let us have it all. It wasn’t exactly easy to get it past the lawyers, but we were able to negotiate, free and clear, all the technology. Part of the tech was developed at Valve, but a lot of the novel stuff was floating around in our heads just before we left. In fact, we’ve developed a lot of the tracking technology, a lot of the image stabilization, all this stuff, outside of Valve. We’ve been working to protect our IP ever since.

Johnson: We have a full-time person who’s working to protect what we’ve already done, and also the progression into the future. Patents are a necessary evil, unfortunately.

Ellsworth: We see the big picture of where this can go. We know the trends in the way you can do image processing and do tracking and image stabilization. We’re just taking baby steps into it now, but we’re developing the IP to go forward, so that we can do the ultimate in walkaround AR. Surface-free.

Johnson: Essentially what you see in movies. The processing power and the hardware power is still a decade away, though. In order for us to be successful, we’re taking logical steps in order to get there, but we don’t want to bite off too much to chew. What we’re doing now is finding a good way to blend the physical world with the virtual world, by using the wand and the RFID grid. Those are our first steps.

We have concepts of how we want to progress the technology going forward. As we continue with the company, we’ll progress that way.

GamesBeat: How did you come up with a business in the meantime? Can you get something out more quickly that will satisfy people’s curiosity while you’re still working toward this 10-year horizon?

Ellsworth: Just the early experiments, how engaged people were with the simplest demos — it’s a whole new way to interact with digital media. Part of our customer base found us, just as we showed our early demos at Maker Faire. We were stunned.

We chose Maker Faire because we thought we’d be sympathetic. It’s the crowd I run with. I’m an engineer, maker type. We showed up there with four workstations and we set up several very simplistic game experiences, without announcements, really. The first couple of people dribbled into the booth, took a look at it, and they didn’t want to put the system down. Then they’d go off and come back with five of their friends. Within 30 or 40 minutes of the opening door, we had a line outside our booth 45 minutes to an hour-long.

GamesBeat: So the Maker movement is one of these trends that’s helping you along?

Johnson: That and Kickstarter. Kickstarter has turned the traditional investment route completely backwards. We can go develop our product, show it off to the public, and prove it out through Kickstarter as people vote with their money to make it successful. Now you have evidence that your product is desired by people. You can show that to investors and go that route later on, rather than needing money first before even coming up with a concept.

Ellsworth: Before Kickstarter, we showed thousands of people. We knew this was something you had to experience to understand. We went to almost a dozen trade shows. It was the same experience over and over again, with these long lines. When we hit the go button on Kickstarter, it was a matter of hours. We hit our funding goal and just took off.

We were trying to get 20 percent in the first couple of days, because that’s what everyone says is enough. If you get to 20 percent, you’ll probably succeed. We got that in the first couple of hours. All the hard work, showing people this was real, paid off.

GamesBeat: The cost of it is pretty low. That probably also makes it attractive to the Kickstarter followers. How is it that you can start so low and cost-reduce this over time? Why is it an inexpensive technology?

Ellsworth: My background is in toy design, where everything is super-cost-sensitive. You get very little build material to work with when you’re doing any toy. From day one, when we started looking at productizing this pair of glasses, we knew we had to do things to cost-reduce it down.

Our first experiment was like, “How much can we do on one chip to drive two displays?” We made sure that the projectors could be reduced down to one piece of drive circuitry. The tracking we knew was critical. We had to get the tracking offloaded from the computer so we could use cell phones and other inexpensive devices to drive our glasses. So we started with an eight-chip solution, these big boards. Then we slowly integrated each one of those into our FPGA emulator, which emulates what our final chip will be. To get the costs down, in the end it will be a single-chip solution.

The optics were simplified in projected mode. You don’t have to have really complicated optics in the baseline product, so we can offer a sub-$200 pair of glasses to get going for the projected AR mode. With the clip-ons, where you can add the more complicated optics, we’ll have higher price points for people who want to do the extended experiences. Same goes for the RFID grid and the various peripherals.

We’re leveraging the tracking camera from the glasses we developed to go into the wand. We know we have sub-millimeter accuracy for where your head position is. After testing, we proved out the wand in the early days. We knew it was a good input device. But then we were like, “How can we use the same chips we use in the headset in the wand?” That’s what we’re doing. We’re just putting the camera in the wand. It tracks in the same way.

GamesBeat: Have you decided to start with this basic field, rather than something that’s 360 degrees around the user?

Johnson: The final design will be configurable. You could lay a flag up like this, or if you want to do a semi-circle — when it comes down to it, it’s just cloth. It’s however you want to stretch it out. If a person wanted to buy in bulk from us and build a holodeck, just wallpaper a room, they could.

GamesBeat: Does the software designer want to care whether or not it’s a curved surface, or whether it’s a 360 thing? Does that matter to them?

Johnson: From a rendering perspective, it doesn’t care what shape the surface is. Light goes out, comes back.

GamesBeat: So you’re basically in a 3D world. They’ll build a 3D world and you maneuver through it.

Johnson: Yeah. There are natural configurations that work better. Board games work better in more of a flat environment. If you’re doing first-person shooters or flight simulators, you probably want more of a semicircle. It depends on the configuration and what you want to do.

Ellsworth: We did quite a bit of testing about the ideal starter size. We looked at games, what could be done. One meter by two meter felt like a nice starting size. People’s coffee tables and kitchen tables are the right size for a piece of material about that size.

GamesBeat: You can create an effect that impresses people at that size.

Ellsworth: It balances cost, too, the consumer cost.

Johnson: If you use the clip-ons, you don’t need the surface at all. The way they work is that there’s a visor that’s down that blocks the light. That becomes a VR mode, just like other head-mount displays. If it’s up, then it’s augmented.

Ellsworth: There are a lot of applications for iPads where you can put up a marker that’s recognizable by a camera, and then hold up the tablet like a window into a virtual world. But what we’ve discovered is that by putting the displays on your head, so that you don’t have to think about alignment, it becomes a seamless experience.

Johnson: It also gives you a sense of depth. Because you’re rendering from two different projectors, the stereoscopic is naturally in the image, rather than being portrayed on a flat screen. When you see this interactive hologram in front of you, and you interact with it in three dimensions, it’s magical. If you reach out and touch something, it’s not really there, but it looks like it should be.

GamesBeat: Is there a retail application here, then? Something outside the gaming industry?

Johnson: I can give you a hundred different applications. [laughs] One of the examples we showed in the Kickstarter was for the medical community. We found an animated GIF of someone doing an MRI scan of their head. It was this series of 20 slices from front to back. You just see it going through there.

Take that data, and now I arrange the 20 slices as vertical 3D planes. All of a sudden, in this environment you can see the full 3D shape of the head. You see all the slices going through simultaneously. You can use the wand to interact with those slices to get a subsection of all that. Taking traditional 2D data that the medical community already has and presenting it in a 3D way creates a unique perspective for how doctors can view data.

As far as other applications, we talked to an oil guy down in Texas, a geologist. His example case went, Grandma and Grandpa have a piece of land. Here’s this flat geographical chart with a squiggly line representing the oil deposit. Now, if you did it three-dimensionally, they could see the oil deposit underneath where their house sits. It becomes a much more compelling environment.

For the movie industry, you could go from the executive level of—Here’s a movie set. Here’s where the camera pans are. Here’s where the lighting goes. Here’s where the action will be. The executives could sit around the table, see it, interact with it simultaneously, and make adjustments, rather than building it all the way out.

You could go the opposite end of the scale and build a sound stage of reflective material. Now the actors could wear the glasses and see the CG monsters coming at them. They’d actually interact in a much more seamless environment than just pretending that they see something in front of a green screen.

In aviation, we’re talking to some lieutenants in the Air Force. One of their ideas was, take a regular jet that might be on an aircraft carrier somewhere. Put the retro material on the outside of the cockpit. Now they can wear the glasses inside, sitting in a real jet with the real controls, and use the glasses to project out terrain to the retro material outside the cockpit. Now all of a sudden you have a flight simulator on the real equipment, but it’s very cheap from a hardware perspective, and very portable. They can take the material, wrap it around the outside of a jet, and then go over to a helicopter and do the same. You can do all these experiments and training on the equipment very effectively and cheaply.

Ellsworth: You prototyped that in 15 minutes or so with one of your arcade machines. He had a cockpit arcade machine in his garage. He cut a little bit of retro reflector, put it where the screen was, and hung it over the open sides. You could sit inside of it, look out the sides, and see a game of Asteroids going by. If you looked forward, you’d see it right through the window. It was pretty neat.

The private/public display aspect of it is interesting. We’ve talked to some industrial applications, where they may have an educator there that sees all the steps of what his students are doing. The students might be trying to assemble some piece of equipment in virtual space.

GamesBeat: So your goal is 2014. Do you have a more detailed timetable?

Johnson: For Kickstarter, we have an earlier preview, which is about 100 pairs of glasses that we’re delivering in the April-May time frame. These are just to get the glasses out to people who are interested in the technology, to begin development early. The bulk of the Kickstarter will be delivered around September. Post-Kickstarter we have visions on how to grow the company and move into retail.

GamesBeat: Is that aimed at more developers than consumers?

Ellsworth: The Kickstarter? It’s kind of across the board. One of the things that resonated big with people was the whole RFID grid mixed with D&D. Board gamers were one aspect of it. We’ve had a lot of interest from educational institutions, using it as a learning device and using it more specifically for AR research. Movie studios and other companies have gone into it, and gamers in general.

A lot of them are just enthusiasts who are finally like, “Wow, AR is at an affordable price and works well. We can jump in and start exploring new worlds that we couldn’t before.”

GamesBeat: Oculus seemed to do a pretty good business just selling to developers for a while. That allows you to go to another stage and have revenue coming in. Does that seem like the path that you guys would follow?

Ellsworth: Units are still selling very briskly on our website. People are interested in getting in line to get units after the Kickstarter stuff comes out this spring.

GamesBeat: Do you have a certain number of hardware spins you think you’ll do before you get to a consumer-grade product?

Johnson: The September timeline is the first revision that’s consumer-grade. Internally we’ll have lots of steps. At GDC, we’ll have probably two different revisions from what you see now. We’ll keep on progressing the technology to make it smaller, better optic choices, progressions like that.

Ellsworth: We have a road map with certain check points we have to get to as far as miniaturizing electronics and getting our sample optics to increase the fidelity. By the end of the summer, that’s when we do the manufacture for our first X number of units that go out.

Johnson: People knew where we were going, but no one had seen the HD yet. All the demos were just SD.

Ellsworth: In the days prior to the Kickstarter, it was difficult getting the manufacturers of some of these components to work directly with us. Then we make this huge splash on Kickstarter, and now we have them banging on our door. “Please use our display! We’ll give you design services!” That’s great. It’s helping us a lot.

GamesBeat: Is something like a $99 product visible to you right now, as far as how quickly you could get there?

Johnson: The price points we chose were based on Kickstarter volume levels. If we had full retail, that will allow us to do volume purchases and things like that. One of the things we had early on in our design process is that there are different avenues we could take the glasses as far as components we could put out. You could imagine a kids’ product where we’re using a lower-resolution projector or different combinations of things that could lower the price point. There’s also the opposite direction, with higher-resolution panels and more precision components that we could do for higher-end use cases.

GamesBeat: Do you see augmented reality as a very different market from virtual reality? Is there room for the different players here?

Johnson: Everyone complements everyone else. It’s not like AR or VR is going to replace watching TV on the couch. What you’ll find is that, initially, you’ll see applications that barely take advantage of either space. As people get to the hardware and are able to experiment and try crazy new ideas, you’ll see unique things for AR and VR alike. It’ll be a growth market for both.

GamesBeat: How do you look at the software that you need to come up with? What do you have to do to get that content going?

Johnson: One of the first things I did over the summer was integrated this into Unity. It’s the most popular engine out there right now. It’s also one of the strongest engines for mobile devices. The goal for the Unity integration was to make it as quick and painless as possible. Angry Bots, the joystick demo, is a sample game that comes with Unity. It takes about three minutes to get up and running on our system. We’ve had game jams with other people that use Unity, so we can understand what they’re doing and further develop that plug-in.

There’s a low-level SDK, and if you have a custom app or a custom engine, you can talk to that. Integration is fairly easy in that regard. Likewise, now that we have Brian [Bruning] and Christina [Engel] on board, we’re starting to engage developers and get them exposed to the technology, understanding what they’re doing and how they can use it, building up the environment in that regard.

On the VR side, Valve announced the OpenVR Initiative. If you remember the early days of 3D technology, you had OpenGL, the OpenGL mini-driver, the Glide driver. You had all these different APIs from different vendors. This is an effort to make sure games aren’t written only for the Oculus or only for us. You write it for OpenVR. Now you have this giant library of games that work on multiple devices.

GamesBeat: What’s an example of an experience that’s written for this, versus taking something that’s ported for somewhere? What more could you do to impress people if you’re going beyond just a port?

Johnson: That’s part of what we’re doing right now for GDC. The others in the room there are working on some unique experiences that we’ll show off for GDC.

GamesBeat: And that maybe involves things like the wand or the RFID grid?

Johnson: It’ll involve different input devices and different control mechanisms and different orientations of the surface.

GamesBeat: Are you expanding very much as far as the size of the company?

Ellsworth: Yeah, we’re staffing up on the production side and the engineering side. That’s been one of our challenges since Kickstarter.

GamesBeat: If I were Valve, I’m not sure I would let you guys go. Have they asked for a stake in the company at all?

Ellsworth: No. Like I said, most of the people in the company hadn’t seen what we were doing. A handful of people came down and played it all the time, but maybe only five percent of the company had seen our prototypes. It was a big leap for them. They were a software company. This was that big head crab thing. I was doing a lot of hand-waving – like, “These can be miniaturized!” But that leap was difficult.

GamesBeat: You’re going to have to get a bigger house, then.

Johnson: We’re being frugal [laughter]. Once we get another infusion of cash, getting a proper office space and kicking everyone out of my house is the next big goal.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=514c164c-fe51-4b05-a2d9-f7d43c41e28e)