When the leaders of the tech industry get up on the big stages at the Consumer Electronics Show, you can learn a lot. I’m at the big tech trade show in Las Vegas, where face-to-face interaction can still yield some insights. I’ve collected some of those insights and looked for trends at CES 2017, which remains the best crystal ball to see the future of technology.

It’s easy to miss things amid the 165,000 people, and no one can cover all of the 2.6 million square feet of exhibit space. But after more than 20 of these shows, I’ve trained myself to look for patterns. Here’s a list of 10 memorable moments, gadgets, ideas, and trends that made me think during the show.

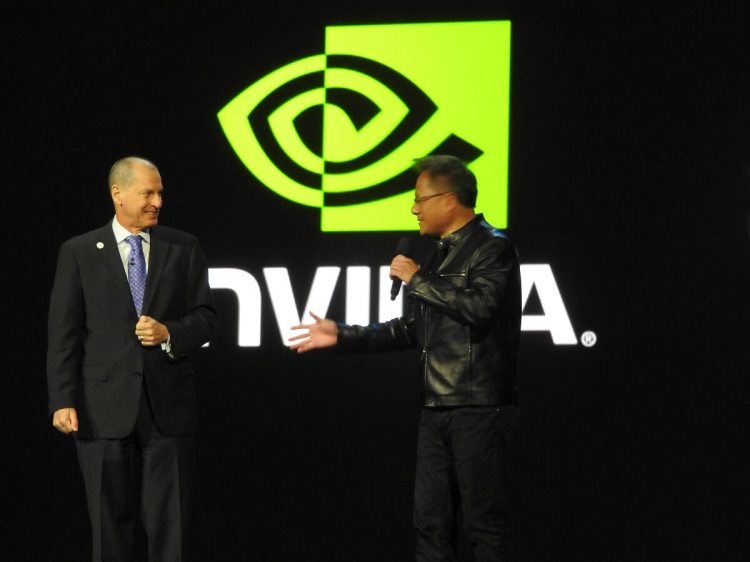

A.I. is becoming pervasive

Above: Nvidia has partnered with Audi on A.I. cars.

John Curran, managing director at Accenture, predicted that A.I. had the chance to become the story of the show. And he was right. Hundreds of companies announced integration with Amazon’s Alexa and Google Assistant — two technologies that use A.I. and the cloud to create a better voice recognition — to create a more natural human-machine interface.

In 1995, the error rate for speech recognition was 100 percent. By 2013, it was 23 percent. And now, in 2017, we essentially have parity between humans and computers with speech recognition, said Shawn Dubravac, analyst at the Consumer Technology Association, during at talk at CES.

Jen-Hsun Huang, CEO of Nvidia, credited the advance in A.I. and voice recognition — after decades of failures — to the graphics processing unit (GPU). Before the programmable GPU (which debuted in 1999), he said that central processing units (CPUs) were like sports cars, and they were good for processing a lot of instructions.

But they were not useful in solving A.I. problems. The GPU came along and Nvidia made it programmable for tasks behind graphics. It was now more like a truck, able to process an enormous amount of data at once. The machine-learning software creators recognized this, and they used GPUs to process a huge amount of data necessary to teach a neural network to learn. This truck paid off in huge improvements in neural networks for tasks such as voice recognition.

“Part of it was destiny. Part was serendipity,” Huang said in a small press gathering. “The deep neural network is computationally a brute force solution. It is simple and elegant but only effective if you use an enormous amount of data. When the GPU and deep learning came together, it was serendipity.”

He said in a small press gathering that his company is working with more than 1,500 A.I. startups now. Those startups have a chance at life because it is possible to train neural networks for many different kinds of pattern recognition tasks in many different applications using powerful GPU technology and machine-learning software. They can layer better A.I. across one problem after another, from the smart Nvidia Shield TV set-top box to self-driving cars.

Voice control is only one of the new interfaces

Above: Rick Bergman, CEO of Synaptics.

The triumph of better voice controls is only one of the ways that humans can better interact with machines. Rick Bergman, CEO of Synaptics, the maker of touchscreen sensors, believes his company’s larger job is to be a visionary for the human-machine interface. And he foresees some big advances as each company figures out how to best apply technology to solving problems for consumers, who want cheap and practical solutions.

It’s easy, for instance, to appreciate the value of the touchpad on a laptop. You can swipe across to move your mouse cursor. But how many of us know that you can use two fingers to swipe down and scroll down a page? We can appreciate the basic value of a touchpad, but few of us will ever take the trouble to fully exploit it.

“We found it’s hard to train people to do new things,” Bergman said.

I thought about that as I used my eyeballs to control the cursor and targeting in a series of new computers with Tobii’s eye-tracking technology built into them. This is a neat trick for targeting multiple zombies at once in a shooting game. But it’s going to be quite hard to change my habits and use my eyes instead of my hands to interact with a computer.

On the other hand, Synaptics has a cool technology that allows it to identify a fingerprint and authenticate a user through a touchscreen glass. That means that instead of pressing the Home button on your iPhone, you can press any spot on the touchscreen to authenticate yourself on your smartphone. With this technology, Apple could get rid of the Home button altogether, and we could all get used to interacting with our smartphones in a slightly different way.

The non-tech companies take technology to the masses

Above: Carnival’s Ocean Medallion wearable makes it easy to tailor a guest experience on a cruise ship.

A.I. is a tool that can be used by everyone, and that’s one reason why the non-tech companies are showing up at CES. Car companies were the first ones to show up. The appliance makers are out in force to trot out Internet of Things products. And now we had a keynote speech from Arnold Donald, CEO of Carnival, the world’s largest cruise ship company.

He introduced the Ocean Medallion wearable at CES as a platform for everything including unlocking your cabin door, paying for goods aboard the ship, and keeping track of your kids. Donald isn’t from a tech company, but his presence at CES shows how technology has spread into all forms of business. Once the tech companies create the foundations, it’s up to the non-tech businesses to make it mainstream and use it to affect the lives of billions of consumers, said Gary Shapiro, CEO of the CTA, in an interview with VentureBeat.

The Internet of (insert adjective here) Things

Above: Samsung Family Hub 2.0 is spreading the smart fridge everywhere.

I’ve come to believe that the Internet of Things is an inevitable trend, where companies uses processors, sensors, and connectivity to make everyday objects smarter and more useful. But that doesn’t mean that every one of them is going to be useful. As Samsung and LG described their new washers and dryers, I loved how these connected devices could let you know when your load was done or you needed more laundry detergent.

I also knew that I wasn’t going to buy them anytime soon. That’s because the reality today is that this is the Internet of Expensive Things. I’m not going to pay hundreds of dollars more to get these appliances, at least not as long as they add extra costs. I don’t want a smart pet feeder, if it means it costs $200 compared to the $5 pet bowl it replaces.

I can foresee a consumer disconnect for many things the Internet of Things vendors are trying to solve. We don’t want the Internet of Hackable Things. We don’t want the Internet of Stupid Things.

Chip competition thrives in 2017

Above: AMD’s Ryzen debuts for desktops in the first quarter of 2017.

In chips, you can compete with a better manufacturing process (which can make your chips smaller, faster, and cheaper) or with a better architecture (which allows your chip to do more things at the same time in an efficient way). Those are a couple of good rules to remember, and it means a lot when one company says or doesn’t say something about either one.

Lisa Su, CEO of Advanced Micro Devices, believes her company has a rare opportunity to take on the competition in 2017 as it launches two new architectures in both processors and graphics. Nvidia is busy transforming itself into an artificial intelligence company, maneuvering to stay ahead of Intel in new battlegrounds such as processors for self-driving cars. In doing so, will Nvidia take its eye off the graphics chip market? In that market, Nvidia competes with AMD, which unveiled its Vega graphics architecture at the show.

Vega-based graphics chips will appear in the coming months, but Nvidia’s Huang did not announce any new chips or follow-on to last year’s Pascal architecture during his keynote speech at CES. Huang believes Nvidia will stay competitive, but Su thinks that AMD has a chance to regain market share in graphics.

And AMD’s new Ryzen processors, based on its new power-efficient Zen architecture, are poised to launch in the first quarter. Intel debuted its Kaby Lake processors at the show, but those are only slightly faster than last year’s Silver Lake processors. Brian Krzanich, CEO of Intel, did not describe any new architectures during his press event. And he said that Intel would debut its 10-nanometer manufacturing process by the end of 2017. That’s late. Qualcomm said at CES its contract manufacturing partner (believed to be Samsung) had begun making 10-nanometer Snapdragon 835 processors.

That means that, possibly for the first time, a mobile processor company might take the lead in switching to a new manufacturing process ahead of Intel. The result: Intel can’t use a process advantage to stay ahead of AMD this year. Su and her colleagues expressed this hope for a rare opening at a dinner at CES. I find it to be plausible. And overall, it means that the market for processors and graphics chips will become more competitive in 2017.