[This piece, written by Microsoft Research’s Mary Czerwinski (pictured below), is the fourth in a series of posts about cutting-edge areas of innovation. The series is sponsored by Microsoft. Microsoft authors will participate, as will other outside experts.]

[This piece, written by Microsoft Research’s Mary Czerwinski (pictured below), is the fourth in a series of posts about cutting-edge areas of innovation. The series is sponsored by Microsoft. Microsoft authors will participate, as will other outside experts.]

A lot of research across a large number of companies is going into developing natural user interfaces (NUI). The idea is that by freeing people from keyboard-based interaction, we won’t just make it easier to work with computers, but we’ll enable brand new experiences that aren’t possible today.

Touch and hand gesture technologies are already seeing good market reception in a number of smartphones and Nintendo’s Wii. Other interface approaches seeing traction include speech recognition, which has been available already for years and continues to see improvements; the ability of a computer to recognize real-world objects, such as with Microsoft Surface; and whole body movement, which is only just emerging in products like the recently announced Project Natal, which uses 3D cameras to translate user motion into commands.

But those types of interfaces could be the tip of the iceberg. A whole new set of interfaces are in the works at various stages of research and development. At Microsoft Research, for example, I have colleagues working on tongue-based interaction, bionic contacts lenses, a muscle-computer interface, and brain-computer interaction.

AI Weekly

The must-read newsletter for AI and Big Data industry written by Khari Johnson, Kyle Wiggers, and Seth Colaner.

Included with VentureBeat Insider and VentureBeat VIP memberships.

A muscle-computer interface would allow users to interact with their computers even if their hands are occupied with other objects (e.g., carrying a briefcase, holding a phone or a cup of coffee, etc). Simple, unintrusive straps worn on a user’s forearms that carry sensors interpret muscle movement and translate it into commands. A exertion of pressure in the form of a pinch against the mug or phone in a users hand, for example, could signal the computer to stop or start a musical selection (device prototype pictured).

A muscle-computer interface would allow users to interact with their computers even if their hands are occupied with other objects (e.g., carrying a briefcase, holding a phone or a cup of coffee, etc). Simple, unintrusive straps worn on a user’s forearms that carry sensors interpret muscle movement and translate it into commands. A exertion of pressure in the form of a pinch against the mug or phone in a users hand, for example, could signal the computer to stop or start a musical selection (device prototype pictured).

Of course, the most natural user interface of all would be when the computer can read your mind with no effort on the user’s part. This is the promise of research around brain-computer interaction. Gaming is one of the first areas where we could see this type of interface come into play. In fact, a company called NeuroSky already offers some level of brainwave sensing for game play. But brain-computer interaction could do so much more than operate games. At Microsoft, for example, we’re thinking about how a computer could tailor the information it’s presenting based on a user’s state. If a user is talking, for example, the information could be relayed spatially rather than than in text or speech format. And if a user is deep in thought, the computer could halt email alerts and phone calls. Needless to say, though, it will be a while before you can get a brain-activated Windows Phone at Best Buy!

Tongue-based interaction could enable users who are paralyzed but still have control of their eyes, jaw and tongue to use tongue gestures to control a computer. The idea is to use infrared optical sensors embedded in a retainer inside the user’s mouth to sense tongue movement.

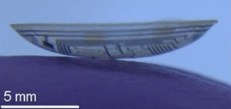

The bionic contact lens project allows contact lenses to continuously perform blood tests on an individual by sampling the fluid on the surface of the cornea and without having to collect a blood sample. The lens could eventually analyze the fluid and send that analysis wirelessly as part of a healthcare monitoring program. The project still has a way to go, but researchers at Microsoft Research are collaborating with the University of Washington to make bionic lenses a reality.

The bionic contact lens project allows contact lenses to continuously perform blood tests on an individual by sampling the fluid on the surface of the cornea and without having to collect a blood sample. The lens could eventually analyze the fluid and send that analysis wirelessly as part of a healthcare monitoring program. The project still has a way to go, but researchers at Microsoft Research are collaborating with the University of Washington to make bionic lenses a reality.

Microsoft’s Craig Mundie has also talked about designing computers that are contextually aware — that is, computers that use sensors to gain awareness of what’s going on around them and take action or make suggestions based on that input. The example he gives is of a robotic receptionist, created by Microsoft Research, that Microsoft employees could use to book shuttle requests between buildings. Users would interact with it much the same way we currently interact with avatars in a game environment. As sensors become cheaper and more accurate, we get closer to enabling this kind of capability.

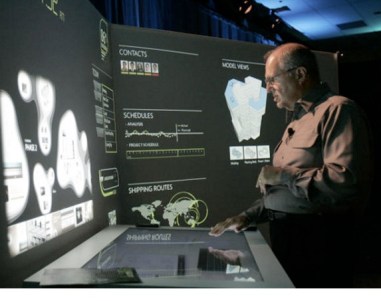

And finally there’s a new form factor Mundie talks about — instead of traditional form factors of small, medium or large screens (ie, phone, computer, and TV), the new form factor will be the room you’re standing in.

Be sure to see the previous articles in this series on the future of interface:

How phones emerged as main computing devices, and why user interface will improve

Put your finger on it: The future of interactive technology

“Touch” technology for the desktop finally taking off

Mary Czerwinski is a Research Area Manager of Microsoft’s Visualization and Interaction (VIBE) Research Group. Her research focuses primarily on novel information visualization and interaction techniques across a wide variety of display sizes. She also studies information worker task management, multitasking, and reflection systems. Her background is in visual attention and user interface design.

Mary Czerwinski is a Research Area Manager of Microsoft’s Visualization and Interaction (VIBE) Research Group. Her research focuses primarily on novel information visualization and interaction techniques across a wide variety of display sizes. She also studies information worker task management, multitasking, and reflection systems. Her background is in visual attention and user interface design.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More