Facebook and, to a lesser extent, Google, have entirely too much control over the news most people see. Good thing Facebook and Google are cracking down on “fake news sites,” right? After all, Fake News on Facebook changed the election results (maybe).

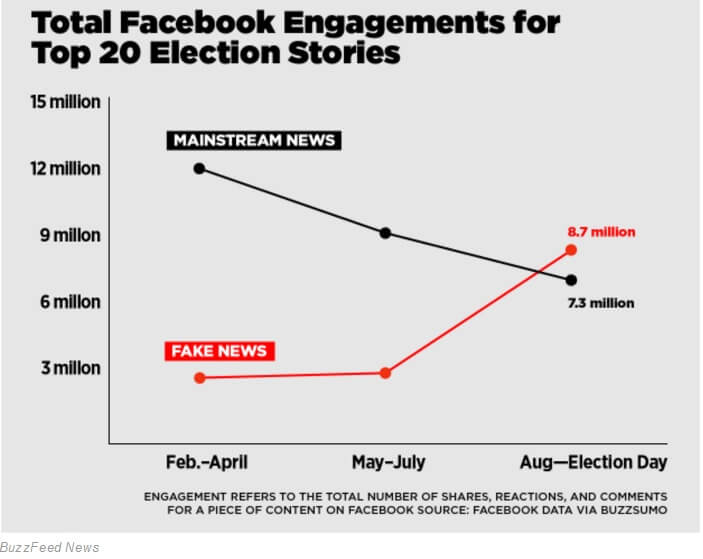

One of the more damning stats about fake news on Facebook came from the increased engagement of “fake news” compared to “real news” in the time approaching the U.S. presidential election. Ironically, this research came from BuzzFeed, a media outlet that started years ago as mostly clickbait and quizzes and has gone on to offer an amazing newsroom helmed by Ben Smith.

The piece in BuzzFeed was well written, level, and reasoned, but it also missed the mark. “Engagement” means a lot on Facebook. For the BuzzFeed article, engagement means reactions (formerly Likes), a comment, or a share. Since engagement is so broad, that means everything from “angry” to commenting with a link to Snopes or sharing the content with the caption “look at this sensationalized crap!”

Sometimes it seems like we all got together and decided Facebook was our appointed benevolent information dictator. As though it was Zuckerberg and Co.’s mandate to save us from information overload by determining with ever-wise algorithms what we get to see and what we never do. But It shouldn’t be Facebook’s job to filter news. We need to do that ourselves.

What about the watchdogs?

The risk of being labeled fake is a problem. And not just for BuzzFeed, the New Yorker, or Time Magazine, which publish actual news along with content that is satirical or simply lighthearted. Arguably a “not news” label would be a bigger problem for publications sustained by opinion editorials and analysis. Such labeling could lead to fewer watchdogs having a voice.

One of my favorite publications is Reason Magazine. It offers news and reporting but primarily runs op-eds and well researched (but subjective libertarian leaning) analysis. If you think Reason isn’t a credible outlet, research the number of times major publications, have published retractions because of reporting from Reason.com. It would be a shame if The New York Times lost the peer review of publications like Reason and even The Daily Show and went down a road of largely sensationalized news that drives clicks while little publications end up labeled “not news” by an algorithm.

I’m sure Reason wouldn’t be first on the chopping block of fake news sites. I use it as an example because many smaller publications (smaller = anything not The Atlantic) have broken huge stories that later gained mainstream media coverage; often due to social media attention. A number of small news outlets that have broken news on everything from local government scandals to the Dakota Pipeline protests could easily be called fake. The Daily Show has never even claimed to be news, and yet the link above shows former host Jon Stewart rightfully calling out the Times for bad reporting.

And getting back to BuzzFeed, despite its real reporting, it would be possible, even easy, to dismiss anything from a publication where one of the most shared posts of the year was “Can We Guess Your Age and Location With This Food? Test”. But BuzzFeed offers real news, and news sites offer non news. Look at this tweet I sent out over a year ago:

https://twitter.com/masonpelt/status/636937868225548288

Fake news can come from reputable outlets

Sometimes a completely BS story is so juicy that even mainstream news sites picked it up. For example, when the team behind Triumph the Insult Comedy Dog posted a casting call for something called “Trump TV”, major news sites took the story and ran with it. Watch Triumph explain below:

This is one of many examples of “real news outlets” mistaking fake news for real news and reporting it. How could an algorithm filter for this type of story? It would be hard — perhaps impossible — because the idea of Donald Trump launching a conservative news outlet is less improbable than Donald Trump selling steaks by mail exclusively at The Sharper Image, or becoming president of the United States, and both of those happened.

Should algorithms censor and filter news?

I say “no.” But anyone who has researched how Facebook works knows that algorithms are already filtering everything you see. My timeline is full of weddings, birth announcements, and “rest in peace” notes for recently deceased because the almighty algorithm knows I want to know these things.

In that way, Facebook already filters our news. You see content that you’re likely to engage with. That’s why many of my diehard libertarian (deliberate small L) friends were shocked others didn’t know about Gary Johnson. For better or worse, Facebook puts us into a vacuum of self-affirmation. Filtering out known conspiracy theorists and Russian state controlled publications won’t change the underlying problem. Social media has a stranglehold on news.

Facebook will filter my news feed whether I like it or not. It has every right to do so. Alex Jones and Michael Moore have every right to start online forums and censor that content. As consumers, all we can do is leave those platforms or find another way to break out of the echo chamber.

Technology can’t save us from technology

I can’t see any effective way for technology to fix the fake news problem, or the infinite loop of self-affirming content Facebook’s algorithms create. I’m not sure a tech solution is possible, because what we’re seeing isn’t a tech problem.

Historically newspapers have always sensationalized news in order to sell papers. People have always found lies that support their worldviews, and most people tend to be friends with those of like mind.

Social networks and the internet may have sped up the flow of information, making the ramifications of these problems more apparent, though. The internet’s democratization of publishing has been both good and bad, giving watchers a voice that can’t be drowned out by six big companies that own most media outlets, but that democratization has also given us endless conspiracy theories without a clear origin.

The fix isn’t as simple as just leaving Facebook. Social networks aren’t the root of the problem; they also offer real value. I think about the people I’ve met online, the friendships I have, the perspectives I’ve gotten to hear, and I can’t help feel the good of social networks outweighs the harm.

For now — and maybe forever — we can’t look for technology to save us from ourselves. If you want out of the echo chamber, it’s up to you to follow news outlets outside of Facebook, to fact check, and to understand the bias of both the publications you read and the writers.

We live in an ocean of data with many viewpoints, yet somehow we all seem to be swimming in a small pond of ideas that make us feel safe. Each of us has access to more information (and misinformation) on the phone in our hand than the Ptolemaic dynasty had for the 275 years they ruled Egypt. With that kind of power, can we all challenge ourselves to do better than be spoon-fed headlines our friends and an algorithm think we should see?

If it’s okay with the editor, I’d like to end with a quote from the film The Great Dictator, as it is my hope the internet can someday make this quote come true:

“Let us fight to free the world — to do away with national barriers — to do away with greed, with hate and intolerance. Let us fight for a world of reason, a world where science and progress will lead to all men’s happiness.”

Mason Pelt is a growth hacker, speaker, writer, and founder of Push ROI. Follow him on Twitter: @masonpelt.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More