This post has not been edited by the GamesBeat staff. Opinions by GamesBeat community writers do not necessarily reflect those of the staff.

A common complaint surrounding the video games press is the over reliance on review scores as a means of determining a game's overall quality. Opponents of such a system argue that a reviewer's complex opinion is impossible to sum up in a simple numerical score whilst supporters point to scores as an easy way of summarizing a length review. Is the importance of scores overstated? Is it right to compare games based upon single numbers? All things considered, does the industry need review scores at all?

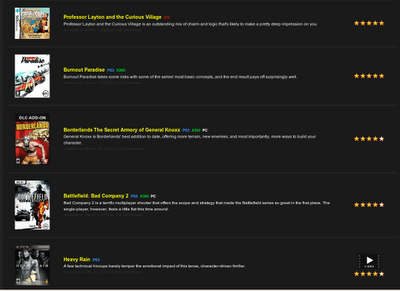

To start with, we shouldn't forget that review  scores play a vital role on websites with vast databases containing thousands of games. Scores allow sites to very easily rank games on a qualitative basis, placing better reviewed games at the top, and the very worst at the bottom. Such a general feature is invaluable to consumers that may not have the time to read through dozens of reviews to find the cream of the crop; they can simply select their platform of choice, perhaps a genre or two, and instantly find pages of games worth their attention. Scores are thus a very useful feature in themselves – essentially removing the need for a constantly updated 'Top Ten' list – but only when they're taken in a such a general context.

scores play a vital role on websites with vast databases containing thousands of games. Scores allow sites to very easily rank games on a qualitative basis, placing better reviewed games at the top, and the very worst at the bottom. Such a general feature is invaluable to consumers that may not have the time to read through dozens of reviews to find the cream of the crop; they can simply select their platform of choice, perhaps a genre or two, and instantly find pages of games worth their attention. Scores are thus a very useful feature in themselves – essentially removing the need for a constantly updated 'Top Ten' list – but only when they're taken in a such a general context.

What of comparing reviews of the same game across multiple publications though? The website Metacritic – which provides an 'average' score across dozens of reviews – could be considered the hub of this practice on the internet today. It's influence is so wide ranging that even huge publishers are starting to take notice. There have been some terrifying reports over the years of bonuses being paid out based upon how high a Metacritic score the title receives.

The first problem with comparing scores in this way is the marked difference in scoring scales. 1Up for example, chooses to review its games based upon a alphabetical scale similar to what teacher's use to grade work. The best of the best receive As and A+s, which more average games have to settle for a grade closer to a C. Similar problems occur with 5-star scoring systems: Many, such as myself, would consider a 3-star game to be average, but on a ten-point scale the average tends to lie at around 7 instead.

The first problem with comparing scores in this way is the marked difference in scoring scales. 1Up for example, chooses to review its games based upon a alphabetical scale similar to what teacher's use to grade work. The best of the best receive As and A+s, which more average games have to settle for a grade closer to a C. Similar problems occur with 5-star scoring systems: Many, such as myself, would consider a 3-star game to be average, but on a ten-point scale the average tends to lie at around 7 instead.

Ironically the biggest problems come when comparing scores from ostensibly identical systems, of which the 10-point scale is the most popular. The British magazine 'Edge' regularly receives criticism from forum-posters for their seemingly low review scores. In recent years they've given a 7 to Killzone 2, and more recently a 5 to Final Fantasy XIII. Whilst admittedly part of the trend is caused by reviewer preference, the main issue is that Edge's scoring method is different from every other scoring system out there. Every review site is the exact same way, and as such comparisons between them are a little funky at best, and at worst a complete waste of time.

So surely if we stick to comparing review scores from the same outlet we can avoid all these difficulties? Sadly this is also not the case, and this exact mistake way made by Ryan Paton on an edition of the late 1Up Yours where he complained (again about Edge) that Race Driver: GRID had been given a higher score that Metal Gear Solid 4: Guns of the Patriots despite them being “in different leagues.”

outlet we can avoid all these difficulties? Sadly this is also not the case, and this exact mistake way made by Ryan Paton on an edition of the late 1Up Yours where he complained (again about Edge) that Race Driver: GRID had been given a higher score that Metal Gear Solid 4: Guns of the Patriots despite them being “in different leagues.”

In one sense he's hit the nail right on its head, they are in different leagues as games. One is, after all a fairly arcade-style racing game, and the other is one of the most expensive action games ever made. The point he's missing is that when considering review scores, each game's respective reviewer was considering different scales.

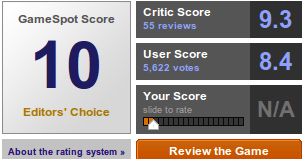

So the fact that GRID got a 9 and MGS4 got a 7 doesn't mean that one is a better game than the other. What it actually means is that one is a better racing game than the other is a stealth/action game. It's a fact that seems to pass many gamers by, Super Mario Galaxy isn't a better game than Assassin's Creed (at least not according to Gamespot), but it IS a better platformer than Assassin's creed is an action game. When Jeff Gerstmann awarded Tony Hawks Pro Skater 3 a coveted 10 out of 10 all those years ago (though the unobtainable nature of this score has seemed to have gone to pot recently) he wasn't saying it was a perfect game, but that it was a perfect skateboarding game.

I think this is a point missed by not only many gamers, but also a large number of people on the other side of the fence who actually review games. I've lost count of the number of times a little PSN or XBLA title has received a lower score simply because of the unreasonable expectations of their reviewers who seem to want to compare them to the likes of Grand Theft Auto and Mass Effect. Thankfully in recent years this practice has, however, been in decline.

I hope what people realize is that there's no inherent problem with placing a review score at the end of your piece. They provide a pretty nice summary for skim reading, as well as enabling the ranking features of many of our favorite websites. It's important however, to not get too tied up with comparing scores at an individual level. They exist as an imperfect science, and as soon as you start treating them any differently, their flaws become starkly apparent.