Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

Hazelcast is updating its namesake real-time data processing platform to version 5.4, with a series of improvements designed to help improve operational and artificial intelligence (AI) workloads.

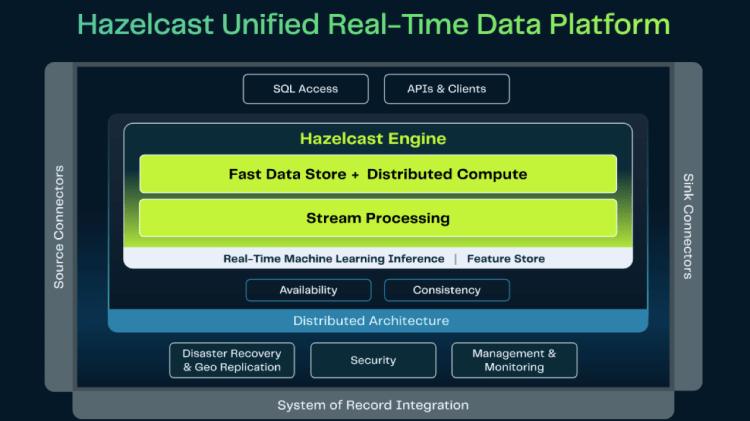

The Hazelcast platform is a real-time intelligent application platform that has both an open-source community as well as enterprise editions. The core architecture of the Hazelcast platform combines a fast data store with stream processing capabilities that are used for data analytics, business intelligence and increasingly for machine learning(ML) and AI workloads. As enterprises rapidly embrace AI to drive latency-sensitive decision-making, Hazelcast is evolving its core capabilities in the new 5.4 update to meet the stringent data processing demands of production AI pipelines. Hazelcast claims some very large enterprises among its customer base including JPMorgan Chase, Volvo, New York Life and Target.

“It’s another step in our multi-year leadership powering AI workloads for really large organizations,” Kelly Herrell, CEO of Hazelcast told VentureBeat. “For AI to deliver value, you know, the data processing infrastructure simply has to work and that’s where our DNA comes in.”

When it comes to real-time data, consistency matters

As a real-time platform, Hazelcast ingests and processes data as it is streamed into the system. With any modern data system that has to be highly available, there are always multiple nodes, which can potentially lead to incremental data consistency issues.

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

- Turning energy into a strategic advantage

- Architecting efficient inference for real throughput gains

- Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

“Data consistency is a hard problem,” Herrell said. “We’ve always been really strong with consistency, we’ve had a consistency subsystem for years and our biggest customers have exercised the heck out of it.”

With the growth and speed of AI-powered applications, consistency requirements are even more critical and time-dependent than ever before. To help improve consistency Hazelcast 5.4 introduces an advanced CP subsystem. The CP subsystem is a component of a Hazelcast cluster that builds a strongly consistent in-memory data layer. CP is based on the CAP (Consistency, Availability and Partitions) theorem for ensuring consistency in a distributed cluster.

Additionally, Hazelcast 5.4 integrates a new advanced Thread-Per-Core architecture (TPC) that boosts computation performance by a minimum of 30% through new threading capabilities.

“Most developers know that when you invoke consistency, you’re going to slow the system down, that’s the trade off,” Herrell said. “By combining advanced consistency with TPC what we’re doing is we’re basically saying there is almost no trade off whatsoever in performance to get consistency.”

Tiered storage helps to feed AI’s need for data

Hazelcast’s platform has an in-memory data processing capability as a core component.

The reality with modern AI/ML workloads is that the volume of data is massive and there is a need for more storage than what in-memory options can support. That’s where the new tiered storage capability fits in, providing other storage options with tiers of performance characteristics to support real-time data processing.

“In the AI world the appetite for data is just insatiable,” Herrell said. “You don’t want to hold everything in memory as that can get really expensive. So being able to use tiered storage lets users scale up the amount of storage that they’ve got to be able to process AI ml workloads in one integrated environment.”

Real time data processing accelerates AI for credit card fraud detection

To date, Hazelcast’s platform has been used for a variety of AI/ML use cases, including fraud detection.

Herrell said that among his company’s users is a large credit card company that is using the Hazelcast platform for credit card fraud detection. When an individual swipes a credit card the payment terminal beeps either approved or not approved. That approval needs to happen within a very short period.

He explained that from the instant the card is swiped, data traverses the network to the cloud where the processing decision is done in real time with a time window of only 50 milliseconds to detect fraud.

“In that window, we actually process six discrete ML algorithms for fraud detection and create a composite score in 50 milliseconds that gives a deeply informed answer as to whether they should or should be authorized,” Herrell said.