When Square Enix announced Project Flare last week, the Japanese company said it would revolutionize gaming through smart use of the cloud.

Call it “cloud gaming 2.0.” It certainly isn’t what OnLive and Gaikai tried to do by streaming games from the Internet to a person’s compatible machine. Rather, Square Enix calls Project Flare a new way to create cloud games, replacing consoles with virtual supercomputers. As such, it is much more of a technological breakthrough than other cloud-gaming systems, said Jacob Navok, director of business development at Square Enix.

Project Flare promises to disrupt more because it can create new experiences, and it is exponentially scalable, meaning it makes better use of server hardware.

We sat down last week with Yoichi Wada, chairman of Square Enix; Jacob Navok, director of business development; and Tetsuji Iwasaki, development director of new technologies. They explained their intentions and how they will work with others to fully build out Project Flare.

Here’s an edited transcript of our interview.

GamesBeat: Tell us how this got started.

Jacob Navok: We’ve been working on this for a couple of years now. I’m very happy to finally make it public. We believe that rendering will inevitably shift to the data center. We believe that what is special about the cloud is that you can unlock all the processing potential in the server farm, all of those central processing units (CPUs) and graphics processing units (GPUs) working together. When you harness that, you can create this virtual supercomputer for games. You can revolutionize the way that people interact with content and deliver content. That’s going to create new experiences.

GamesBeat: Tell us what is different.

For us as a publisher, that’s exciting — the idea that we can deliver radically different experiences for games, enhance our games, offer new technology. Because when that technology evolution happens, we see innovation. When we see innovation, we see growth because new kinds of games come out.

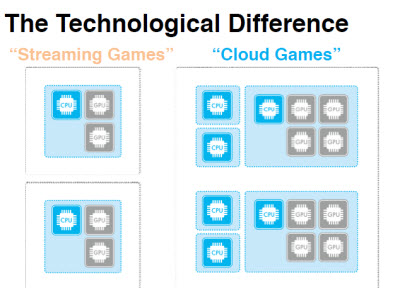

Up until now, we’ve primarily seen games streamed. We’ve seen Gaikai and OnLive. What they do is take the console and put it in the data center. They don’t change the games. They don’t change the way that the game design works. We were disappointed by this. We wanted to see the real power of the cloud come out. We didn’t see that happen. We decided to take advantage of that opportunity ourselves. We created Project Flare.

Project Flare is a technological breakthrough that replaces the console with a virtual supercomputer. What’s the difference between streaming games and Project Flare? Streaming games are only console games or PC games. Project Flare is about new experiences. It’s about enhancing games with the power of the cloud. We can offer console games and the same experiences the other guys have, but that’s just not as interesting to us.

We have these two other incredibly important components. I’m not going to get into them too much today. They’re more on the technology and business side. But we scale exponentially as opposed to linearly. We also significantly reduce the amount of latency involved in the process, limiting it primarily to network latency rather than game latency. Again, I’ll get more into this later.

GamesBeat: So what can you do with Flare?

What I want to focus on is the new experiences that Project Flare can bring to life, that we believe the cloud can bring to our users. What we think it can do, for example, is deepened immersion — Hollywood-style special effects, physics, animation done in real time. From there we can think of new designs and new features using video streams. We also believe that richer, more lifelike worlds are going to come from the idea of big-data rendering. Finally, what we’re calling the extremes of realism and fantasy — being able to do massed battles, massed crowds, and incredible AI you couldn’t do before.

We believe that much more is possible. There are more ideas we haven’t come up with before. That’s why we want to open this up to developers. We’re announcing this in the current state, where it’s not really a full service yet, because we want to hear from other developers.

What is deepened immersion? For us, that’s real-time Hollywood-quality animation and physics. We see this meteor striking Earth. There are all of these textures and ridges on the meteor. There are these particles emanating from the planet and the atmosphere. If we were to try to do this at a Pixar quality, a very high movie quality, we would normally need a render farm spending hours to render each frame. We only have milliseconds to do that. We need to have the power of the data center running somewhere to calculate that for users because you can’t do that with a single CPU and GPU in a box under someone’s television.

GamesBeat: Tell us more about what is different.

We thought about how everything is a shared resource for us. Every world should be shared, whether it’s single-player or multiplayer. What’s interesting is that when you share everything and you’re rendering everything and putting it together and everything is a video stream going to the user, you can use those video streams to create new experiences for users. I’ll showcase that a bit better later.

From there, we thought about, well, we’re in a data center. We can get data from anywhere. We’re just rendering algorithms. Why should our programmers spend time rendering maps or creating cities? Why should we be doing traffic patterns or weather patterns ourselves? Why can’t we use Google maps, or traffic or data patterns? Why can’t we use IBM’s Watson to power our AI and create Skynet and cause the downfall of mankind? All sorts of cool things can happen when we take this outside data and bring it to our data center and send it to the users.

From there we get what we call the extremes of realism and fantasy. Here we see Shibuya crossing. It’s really crowded. If we tried to do this in a game right now, we couldn’t do it. If you look at any game that takes place in a city, the most they have is a couple of people on the screen, a few cars. Every time you add another object on the screen, the exponential physics calculations increase to the point where you have limitations on what’s possible. That’s why, when you see videos of people in [The Elder Scrolls V] Skyrim raining cheese wheels down, the frame rate drops completely. We can’t do that in a realistic, good-quality experience with current methods of calculation. But if we offload that to the cloud and utilize our new rendering system, we can make this possible.

We’ve separated rendering, and we’re applying our technology to a single server. We have, on one server, 57 instances of games. This is something that, before our technology, you could only run a few of. We’re sharing the resources between all of these games. The games that we’re running are 16 instances of Dragon Quest X, another 32 instances of Mini Ninjas, and nine instances of Deus Ex, all running on a single server. Each one of these screens is connected to one GPU on this server, so all of this rendering is happening on one GPU, one GPU, one GPU, one GPU.