GamesBeat: When you say that one GPU connects to each screen, what do you mean?

Navok: Each of those monitors is hooked up to one GPU that’s running on one server. We have 57 instances on one server, but that one server has four GPUs. That server has two Intel Xeon processors and four Nvidia Quadro K5000s. Depending on the game, we could increase this density dramatically. If it were really high-quality games, the number would go down. But we’re maximizing that throughput.

We also have a demo of Final Fantasy XI. Normally the only information I know about my party is this tiny red bar in the corner that’s telling me their HP and MP. But why don’t we use video streams? Everything is a shared resource with Project Flare, so, finally, I can see exactly what my party is doing. I can see where they are in the world. I can see their MP and their stats. I can call them over to me, and we can fight together. I can see how best to strategize and balance thanks to this technology. This is very simple stuff. This is what we can do in a massively multiplayer online game (MMO) where it makes a lot of sense, and it’s very easy to share the world with a party. We want to take this a step beyond, though, and figure out where we can go past this.

In an MMO, for example, if my friend’s in a town, I can click on this window and move in right next to him. That’s one simple way to do it. But then take that beyond. What if it’s a game where I’m leaping from video stream to video stream? What if there are windows of video screens in the world, and I’m clicking on them and jumping through them? What if we take a game like Deus Ex, which is a stealth game normally, and we put video streams of other people playing in other universes? We have to use those video streams in our own gameplay. Deus Ex goes from a stealth game to a strategic game, because we have to keep in mind all of this other information that’s taking place.

We’ll show you Agni’s Philosophy running live on our Luminous Studio engine. For the very first time, we’re going to allow you to change the camera angle. We’ve never allowed the camera to be moved because usually this is running on a big strong PC with one CPU and GPU. Once you move the camera around while it’s moving, the framerate drops, and you can’t see anything. We don’t have to worry about that anymore. You can pause it and move it around. All the objects on the screen are generated in real time very smoothly.

What we’re trying to illustrate here is this Hollywood level of effects and graphics we’ve already reached as a publisher. What we’re trying to do with Project Flare is bring it to people at an acceptable quality. Not only can we do this with the pre-cut scenes, but we can do stuff like manipulate the characters for the first time. You can go ahead and play with Agni. This is the first time we’re letting people do this. On the bottom right of the pad here, you can cycle between some pre-set cameras. We’ve also added single-touch swipe to rotate and double-touch swipe to pan.

GamesBeat: Where is your demo running?

Navok: We have two servers back there, and we’re streaming it live from those servers. We’re not trying to showcase our ability to stream something. We’re showcasing our ability to render it in real time.

This demo here is one of my favorites. It shows what we can do when we enhance our existing titles. We take Deus Ex, and we radically increase the number of objects on the screen. Normally, the framerate would drop and you couldn’t do anything. But what we’ve done here is very interesting. We’ve separated rendering and physics into two separate servers. They’re interacting with each other to create this final image. There are no problems with all of those relational physics calculations happening there. You could try doing this separately, but it’s going to affect the quality of the framerate and the image. If you could imagine if those boxes became enemies, vehicles, weapons, and we’re able to render them side by side in the date center somewhere, you can start to see that image of Shibuya, with all of those people, happening at the quality level of Agni’s Philosophy. That becomes possible with the power of Project Flare.

What we’re showing is just a spark of the imagination, where we want to take these games in the future. We’ve just used our existing resources to put together some simple demos. From now on, we hope to create games that consider this from the ground up, that we can enhance with this technology, that really push the boundaries of what we can do for games.

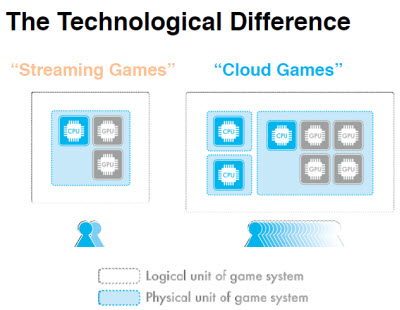

To explain a little bit about the technology that’s powering Flare, I want to explain the difference between streaming games and cloud games. For streaming games, the logical unit and the physical unit are the same. It’s the same as a PC or a console. Your unit of logic — a CPU connected on a motherboard to one or more GPUs — is always together. If you try to increase this, you’re simply increasing the same number of logical units. It doesn’t scale exponentially. We do something very different. We separate CPU and GPU. This is the first time this has ever been done.

GamesBeat: Why is that important?

It’s a very strange concept the first time you think about it. What happens is, some games are CPU heavy. Some games are GPU heavy. If you’re at home, it’s not really a problem, but when you have a lot of users running in a data center, that balance starts to add up. If we connected them together in one logical, physical unit, that balance would break our cost feasibility. But what happens with Project Flare is that if we need more compute [power], we add more CPU servers. If we need more rendering, we can add more rendering servers. We can make sure that things balance. The end result is that we get exponential scalability.

What happens in a typical streaming game is that if you want to add more people, you add another server, and you get the exact same number of people who were possible in the previous model. With us, the more servers you add, the more people who can get on there, exponentially. We improve, the more servers we add. Our costs go down.

What’s happening with the technology behind this is that we match the CPU and the GPU for efficiency. This is not the sort of thing you normally have to do at home. You don’t have to worry about it. What typically happens is that the CPU and GPU are always waiting for each other. Those waits, in the data center, add up. If you have 1,000 people with CPUs and GPUs waiting for each other, you have a massive amount of wasted wait time that’s not being efficiently used. We fill all those gaps with commands from other people’s games. The end result is that we try to get to 100 percent efficiency, 100 percent utilization of the CPU and the GPU. Again, it’s never been done before because the majority of games were written for one CPU and one GPU. It’s a very different type of architecture. It’s a very different type of thinking.

GamesBeat: How is this more scalable?

From there, what we do is we share the resources for scalability. Typically, when you have a streaming game, it’s the same as a PC or a console game. Every game is a silo. It’s its own instance, its own executable. We create one instance, and we have the users access those calculations and those resources. The more users we add, the better we scale. More and more people coming in makes our system a lot better and a lot cheaper.

We have a very interesting relationship between all of this, because what happens is, the reduction in the rendering rates from the amount of people in our system leads to cost reduction, which leads to latency reduction. Every time it takes us one less millisecond to render things for all of these people, we have one more millisecond we can give to network latency. We significantly reduce the amount of game-centric latency. To the extent possible, that leads to only network latency.

We look at this as the evolution of games. Up until now, these games have been single, physical, logical units, little microorganisms operating independently. Even if you have millions of them, they don’t add up to anything larger. We try to connect those. We try to evolve them from single-celled creatures to multicellular creatures. The amount of knowledge it takes to do this, the type of knowledge it takes to do this, is very disparate. It’s not the type of things that are normally on a game team. You have to understand the video encoding, how rendering works, how server-side processing is going to work, how GPUs work, and so being able to coalesce that into a single vision is incredibly difficult.

Where we’re taking this from here is actively working with partners to develop a consumer service. From there, working with developers. We’re actively recruiting them, seeking a dialogue with developers who are inspired by the vision.