When Square Enix announced Project Flare last week, the Japanese company said it would revolutionize gaming through smart use of the cloud.

Call it “cloud gaming 2.0.” It certainly isn’t what OnLive and Gaikai tried to do by streaming games from the Internet to a person’s compatible machine. Rather, Square Enix calls Project Flare a new way to create cloud games, replacing consoles with virtual supercomputers. As such, it is much more of a technological breakthrough than other cloud-gaming systems, said Jacob Navok, director of business development at Square Enix.

Project Flare promises to disrupt more because it can create new experiences, and it is exponentially scalable, meaning it makes better use of server hardware.

We sat down last week with Yoichi Wada, chairman of Square Enix; Jacob Navok, director of business development; and Tetsuji Iwasaki, development director of new technologies. They explained their intentions and how they will work with others to fully build out Project Flare.

AI Weekly

The must-read newsletter for AI and Big Data industry written by Khari Johnson, Kyle Wiggers, and Seth Colaner.

Included with VentureBeat Insider and VentureBeat VIP memberships.

Here’s an edited transcript of our interview.

GamesBeat: Tell us how this got started.

Jacob Navok: We’ve been working on this for a couple of years now. I’m very happy to finally make it public. We believe that rendering will inevitably shift to the data center. We believe that what is special about the cloud is that you can unlock all the processing potential in the server farm, all of those central processing units (CPUs) and graphics processing units (GPUs) working together. When you harness that, you can create this virtual supercomputer for games. You can revolutionize the way that people interact with content and deliver content. That’s going to create new experiences.

GamesBeat: Tell us what is different.

For us as a publisher, that’s exciting — the idea that we can deliver radically different experiences for games, enhance our games, offer new technology. Because when that technology evolution happens, we see innovation. When we see innovation, we see growth because new kinds of games come out.

Up until now, we’ve primarily seen games streamed. We’ve seen Gaikai and OnLive. What they do is take the console and put it in the data center. They don’t change the games. They don’t change the way that the game design works. We were disappointed by this. We wanted to see the real power of the cloud come out. We didn’t see that happen. We decided to take advantage of that opportunity ourselves. We created Project Flare.

Project Flare is a technological breakthrough that replaces the console with a virtual supercomputer. What’s the difference between streaming games and Project Flare? Streaming games are only console games or PC games. Project Flare is about new experiences. It’s about enhancing games with the power of the cloud. We can offer console games and the same experiences the other guys have, but that’s just not as interesting to us.

We have these two other incredibly important components. I’m not going to get into them too much today. They’re more on the technology and business side. But we scale exponentially as opposed to linearly. We also significantly reduce the amount of latency involved in the process, limiting it primarily to network latency rather than game latency. Again, I’ll get more into this later.

GamesBeat: So what can you do with Flare?

What I want to focus on is the new experiences that Project Flare can bring to life, that we believe the cloud can bring to our users. What we think it can do, for example, is deepened immersion — Hollywood-style special effects, physics, animation done in real time. From there we can think of new designs and new features using video streams. We also believe that richer, more lifelike worlds are going to come from the idea of big-data rendering. Finally, what we’re calling the extremes of realism and fantasy — being able to do massed battles, massed crowds, and incredible AI you couldn’t do before.

We believe that much more is possible. There are more ideas we haven’t come up with before. That’s why we want to open this up to developers. We’re announcing this in the current state, where it’s not really a full service yet, because we want to hear from other developers.

What is deepened immersion? For us, that’s real-time Hollywood-quality animation and physics. We see this meteor striking Earth. There are all of these textures and ridges on the meteor. There are these particles emanating from the planet and the atmosphere. If we were to try to do this at a Pixar quality, a very high movie quality, we would normally need a render farm spending hours to render each frame. We only have milliseconds to do that. We need to have the power of the data center running somewhere to calculate that for users because you can’t do that with a single CPU and GPU in a box under someone’s television.

GamesBeat: Tell us more about what is different.

We thought about how everything is a shared resource for us. Every world should be shared, whether it’s single-player or multiplayer. What’s interesting is that when you share everything and you’re rendering everything and putting it together and everything is a video stream going to the user, you can use those video streams to create new experiences for users. I’ll showcase that a bit better later.

From there, we thought about, well, we’re in a data center. We can get data from anywhere. We’re just rendering algorithms. Why should our programmers spend time rendering maps or creating cities? Why should we be doing traffic patterns or weather patterns ourselves? Why can’t we use Google maps, or traffic or data patterns? Why can’t we use IBM’s Watson to power our AI and create Skynet and cause the downfall of mankind? All sorts of cool things can happen when we take this outside data and bring it to our data center and send it to the users.

From there we get what we call the extremes of realism and fantasy. Here we see Shibuya crossing. It’s really crowded. If we tried to do this in a game right now, we couldn’t do it. If you look at any game that takes place in a city, the most they have is a couple of people on the screen, a few cars. Every time you add another object on the screen, the exponential physics calculations increase to the point where you have limitations on what’s possible. That’s why, when you see videos of people in [The Elder Scrolls V] Skyrim raining cheese wheels down, the frame rate drops completely. We can’t do that in a realistic, good-quality experience with current methods of calculation. But if we offload that to the cloud and utilize our new rendering system, we can make this possible.

We’ve separated rendering, and we’re applying our technology to a single server. We have, on one server, 57 instances of games. This is something that, before our technology, you could only run a few of. We’re sharing the resources between all of these games. The games that we’re running are 16 instances of Dragon Quest X, another 32 instances of Mini Ninjas, and nine instances of Deus Ex, all running on a single server. Each one of these screens is connected to one GPU on this server, so all of this rendering is happening on one GPU, one GPU, one GPU, one GPU.

GamesBeat: When you say that one GPU connects to each screen, what do you mean?

Navok: Each of those monitors is hooked up to one GPU that’s running on one server. We have 57 instances on one server, but that one server has four GPUs. That server has two Intel Xeon processors and four Nvidia Quadro K5000s. Depending on the game, we could increase this density dramatically. If it were really high-quality games, the number would go down. But we’re maximizing that throughput.

We also have a demo of Final Fantasy XI. Normally the only information I know about my party is this tiny red bar in the corner that’s telling me their HP and MP. But why don’t we use video streams? Everything is a shared resource with Project Flare, so, finally, I can see exactly what my party is doing. I can see where they are in the world. I can see their MP and their stats. I can call them over to me, and we can fight together. I can see how best to strategize and balance thanks to this technology. This is very simple stuff. This is what we can do in a massively multiplayer online game (MMO) where it makes a lot of sense, and it’s very easy to share the world with a party. We want to take this a step beyond, though, and figure out where we can go past this.

In an MMO, for example, if my friend’s in a town, I can click on this window and move in right next to him. That’s one simple way to do it. But then take that beyond. What if it’s a game where I’m leaping from video stream to video stream? What if there are windows of video screens in the world, and I’m clicking on them and jumping through them? What if we take a game like Deus Ex, which is a stealth game normally, and we put video streams of other people playing in other universes? We have to use those video streams in our own gameplay. Deus Ex goes from a stealth game to a strategic game, because we have to keep in mind all of this other information that’s taking place.

We’ll show you Agni’s Philosophy running live on our Luminous Studio engine. For the very first time, we’re going to allow you to change the camera angle. We’ve never allowed the camera to be moved because usually this is running on a big strong PC with one CPU and GPU. Once you move the camera around while it’s moving, the framerate drops, and you can’t see anything. We don’t have to worry about that anymore. You can pause it and move it around. All the objects on the screen are generated in real time very smoothly.

What we’re trying to illustrate here is this Hollywood level of effects and graphics we’ve already reached as a publisher. What we’re trying to do with Project Flare is bring it to people at an acceptable quality. Not only can we do this with the pre-cut scenes, but we can do stuff like manipulate the characters for the first time. You can go ahead and play with Agni. This is the first time we’re letting people do this. On the bottom right of the pad here, you can cycle between some pre-set cameras. We’ve also added single-touch swipe to rotate and double-touch swipe to pan.

GamesBeat: Where is your demo running?

Navok: We have two servers back there, and we’re streaming it live from those servers. We’re not trying to showcase our ability to stream something. We’re showcasing our ability to render it in real time.

This demo here is one of my favorites. It shows what we can do when we enhance our existing titles. We take Deus Ex, and we radically increase the number of objects on the screen. Normally, the framerate would drop and you couldn’t do anything. But what we’ve done here is very interesting. We’ve separated rendering and physics into two separate servers. They’re interacting with each other to create this final image. There are no problems with all of those relational physics calculations happening there. You could try doing this separately, but it’s going to affect the quality of the framerate and the image. If you could imagine if those boxes became enemies, vehicles, weapons, and we’re able to render them side by side in the date center somewhere, you can start to see that image of Shibuya, with all of those people, happening at the quality level of Agni’s Philosophy. That becomes possible with the power of Project Flare.

What we’re showing is just a spark of the imagination, where we want to take these games in the future. We’ve just used our existing resources to put together some simple demos. From now on, we hope to create games that consider this from the ground up, that we can enhance with this technology, that really push the boundaries of what we can do for games.

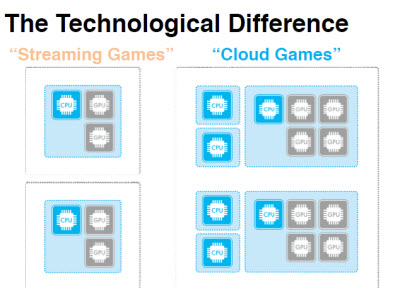

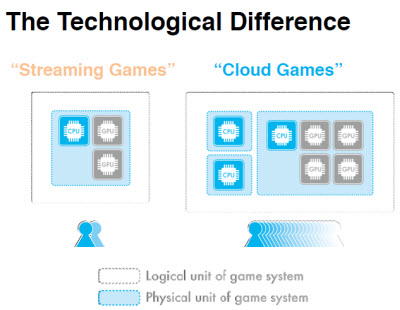

To explain a little bit about the technology that’s powering Flare, I want to explain the difference between streaming games and cloud games. For streaming games, the logical unit and the physical unit are the same. It’s the same as a PC or a console. Your unit of logic — a CPU connected on a motherboard to one or more GPUs — is always together. If you try to increase this, you’re simply increasing the same number of logical units. It doesn’t scale exponentially. We do something very different. We separate CPU and GPU. This is the first time this has ever been done.

GamesBeat: Why is that important?

It’s a very strange concept the first time you think about it. What happens is, some games are CPU heavy. Some games are GPU heavy. If you’re at home, it’s not really a problem, but when you have a lot of users running in a data center, that balance starts to add up. If we connected them together in one logical, physical unit, that balance would break our cost feasibility. But what happens with Project Flare is that if we need more compute [power], we add more CPU servers. If we need more rendering, we can add more rendering servers. We can make sure that things balance. The end result is that we get exponential scalability.

What happens in a typical streaming game is that if you want to add more people, you add another server, and you get the exact same number of people who were possible in the previous model. With us, the more servers you add, the more people who can get on there, exponentially. We improve, the more servers we add. Our costs go down.

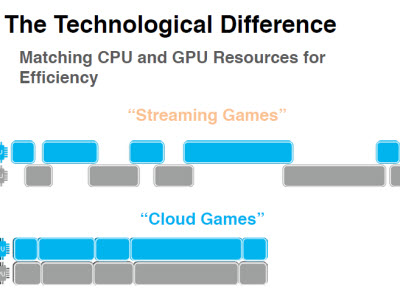

What’s happening with the technology behind this is that we match the CPU and the GPU for efficiency. This is not the sort of thing you normally have to do at home. You don’t have to worry about it. What typically happens is that the CPU and GPU are always waiting for each other. Those waits, in the data center, add up. If you have 1,000 people with CPUs and GPUs waiting for each other, you have a massive amount of wasted wait time that’s not being efficiently used. We fill all those gaps with commands from other people’s games. The end result is that we try to get to 100 percent efficiency, 100 percent utilization of the CPU and the GPU. Again, it’s never been done before because the majority of games were written for one CPU and one GPU. It’s a very different type of architecture. It’s a very different type of thinking.

GamesBeat: How is this more scalable?

From there, what we do is we share the resources for scalability. Typically, when you have a streaming game, it’s the same as a PC or a console game. Every game is a silo. It’s its own instance, its own executable. We create one instance, and we have the users access those calculations and those resources. The more users we add, the better we scale. More and more people coming in makes our system a lot better and a lot cheaper.

We have a very interesting relationship between all of this, because what happens is, the reduction in the rendering rates from the amount of people in our system leads to cost reduction, which leads to latency reduction. Every time it takes us one less millisecond to render things for all of these people, we have one more millisecond we can give to network latency. We significantly reduce the amount of game-centric latency. To the extent possible, that leads to only network latency.

We look at this as the evolution of games. Up until now, these games have been single, physical, logical units, little microorganisms operating independently. Even if you have millions of them, they don’t add up to anything larger. We try to connect those. We try to evolve them from single-celled creatures to multicellular creatures. The amount of knowledge it takes to do this, the type of knowledge it takes to do this, is very disparate. It’s not the type of things that are normally on a game team. You have to understand the video encoding, how rendering works, how server-side processing is going to work, how GPUs work, and so being able to coalesce that into a single vision is incredibly difficult.

Where we’re taking this from here is actively working with partners to develop a consumer service. From there, working with developers. We’re actively recruiting them, seeking a dialogue with developers who are inspired by the vision.

Yoichi Wada: The cloud is what is going to lead the game industry. That’s what I believe. However, to make it work, we need content that uses the features of the cloud. We want not only assets from Square Enix, but also work from other developers as well. At the moment, we don’t have a specific business framework, or specific partners. That’s why we did the presentation. We’re actively looking for other developers and business partners to move this thing forward.

We mentioned Ubisoft because they’re the first company to have serious discussions with us in this very early stage. We’re open to having conversations with many other companies. That’s what we’re looking for.

GamesBeat: What about the inconsistent broadband services across the United States? How big a piece of the solution is basically speeding up the networks?

Navok: Improving latency is a major concern for us, but it doesn’t only come from what we do on the game side. We have to work closely with telecom partners, and to the extent possible, be located inside their networks. As we look for partners, we’re particularly looking for those in the fiber optic and fiber-to-home areas, to make sure the quality of service, bandwidth, and low latency result in a gameplay experience that’s satisfactory to our users. As a game publisher ourselves, we want to make sure our games are fun and go to our players at a good enough quality to make them happy.

GamesBeat: Microsoft talked about using 300,000 servers for cloud processing on the Xbox One. How much are you anticipating you’ll need to set up in order to handle large demand?

Navok: The way we’re looking at it is that Square Enix itself is operating on the software layer. We’re not looking to build infrastructure. We’re working with partners who will invest in infrastructure within their networks because we think that makes the most sense in terms of the ecosystem. Telecom companies who are used to making investments in infrastructure, who understand how to run their networks, are the best place to manage and maintain these massive cloud configurations.

GamesBeat: Nvidia is also making a lot of advances with GPUs. They’re multifaceted and can handle a lot of instances at once. Are those things that determine how many instances you have?

Navok: The way we look at it is, as GPU power gets better and cheaper, we can radically increase the number of instances. But we’re not limited to any particular type of GPU or configuration. We consider ourselves to be agnostic. If you look at what’s happening with a lot of the GPU configurations in the cloud, they’re not maximizing the utilization of that processing power. When we run these 16 instances on a single GPU, we’re trying — to the extent possible — to get 100 percent of the calculating power of the GPU out there.

A very interesting thing happens in terms of the price-to-power ratio. From a conceptual standpoint, let’s imagine that you want to run a game that needs a GPU power of six to be at its maximum settings. But the only two GPUs available for you have a maximum power of five or a maximum power of seven. You’re running it in your PC and you want this game to be awesome, so you’ll pay double the price for a GPU with the power of seven, even though you only need six. In the data center, that doesn’t make much sense because we can just add up a bunch of fives, get 30, and then for half the price we’ll have exactly as much power. What we try to do, when we think about processing power — both on the CPU and the GPU side — is find where on the bell curve between price and processing power that we can hit the sweet spot.

GamesBeat: How soon can you deploy this?

Navok: We’re looking at beta tests in the next year or two with our telecom partners. We’re hoping to get into service after that.

GamesBeat: Do you plan on hitting any particular region first?

Navok: We’re looking at North America and, from there, Japan to start. The fiber-optic networks there are very good. We’ll continue to roll out where we see strong broadband potential, fiber, in other areas — Korea, for example, and other parts of Asia.

GamesBeat: Does it matter what kind of hardware a person has? Does it help that more Xbox Ones will be out there, for example?

Navok: It’s an interesting question. For us, we’re what we call a high-workload cloud, so we do all of the rendering and encoding on the servers. We’re only sending a video stream. But we’re neutral on the application layer. We could see ourselves going to a set-top box from telecom companies to next-generation consoles to Steam. What we’re calling the marketing layer, that final layer that touches the consumer, we’re flexible on that.

Wada: When it comes to cloud gaming, we’d like to be very flexible and open-minded. But, when it comes to making content for cloud gaming, we’ll have to be more strict about that. The technology we create, we want it to be very focused. If we don’t do that, I believe that we can’t provide new game experiences to players.

GamesBeat: Your bandwidth consumption when you stream video down, does that wind up being just a fraction of what it otherwise might be?

Navok: The video downstream is the same as basically any high-definition video downstream. Whatever it takes to get that from Netflix and the like is what we need. The upstream data from the controller is very limited. That’s almost nothing.

The real question for us is the latency, how low we can get that in order to make sure the quality of the service is acceptable to the user. It’s very interesting because you can’t cache a game. They’ve created all these content delivery networks all over the world to quickly distribute video to people. But I do believe that we need new solutions for the network to be able to do that for games. It’s one of the things we’re exploring as we speak with telecom companies and other companies involved in networking. GamesBeat: Are you already working on some games that prove out the Flare idea?

Wada: At the moment, there’s nothing we can announce. But because of this presentation, the word is beginning to spread. We’re looking forward to receiving a lot of feedback and input.

GamesBeat: Does the name “Flare” itself mean something?

Navok: Mr. Iwasaki, do you want to explain that one [Laughter]?

Tetsuji Iwasaki: It’s random.

Wada: The answer changes every day, so if you ask him tomorrow, he’ll tell you something new.

Navok: There’s no particularly deep meaning, as far as I know.

GamesBeat: Improvements in hardware and the grid are helping you. Are you doing something new and different that’s making a difference in costs, scalability, and efficiency?

Navok: If we look at why we initially went about creating this technology, it started about three years ago, when Mr. Wada, Mr. Iwasaki, and myself were having discussions about cloud gaming. Wada was a big believer that cloud was the future, that rendering and processing would all shift to the data center. As we looked at the first crop of companies that came out there, we had high expectations. We were involved in OnLive. We did a lot of experiments with different cloud companies. But we were very disappointed that none of them had a technological breakthrough.

What I mean by that is, they didn’t change the way games were played or designed. So we started studying this. We looked at what technology was powering this, and we realized that it was just an extension of remote desktop, basically. You were encoding something, sitting on top of the game, and streaming the game as-is. But if you really wanted to take advantage of all this distributed computing power in the data center, you had to change the software layer. You had to go into that API level where the game was interacting with the final rendered image and distribute that across all of those processors.

That discussion we had was what inspired us to go into research and development, which we started about a year and a half ago, and test our theories. The result of those tests is what you’re seeing in Project Flare today. We found that we could scale well and reduce latency. All those things that we thought we could do, when we started [research and development], we actually hit them. It was fairly shocking.

Wada: You might notice that there are two different descriptors in the presentation — streaming games and cloud games. A streaming game is what people called cloud gaming, but we felt like they were quite disappointing. That’s why we like to differentiate between the two.

Navok: Let’s say you have two multiplayer games being streamed from a data center somewhere with one of the existing cloud providers. They’re being rendered right next to each other physically, but in completely separate cycles. They’re going out to some data center somewhere and then coming back separately from each other. It doesn’t make much sense. If they’re next to each other, they should be talking to each other and sharing their processing power and using it efficiently. We didn’t see that.

GamesBeat: It sounds like it definitely differs from Microsoft’s approach in that its interested in hybrid processing between Xbox One and the cloud. That method offloads AI and physics sometimes to the cloud. You guys are mostly doing the processing in the cloud.

Navok: You can look at two different ways of thinking about the cloud. There’s the idea of rendering the game as-is and streaming it, and there’s the idea of offloading some processing. But nobody ever combined those ideas together. You get an exponentially better result when you do that because when the processing of physics and the rendering are both taking place on that server, you have added efficiency. You have added scalability. We’re hoping to harness that power, because we think that when we do, new kinds of games will result.

It’s also really nice, when you start to offload these calculations, to not have to worry about what the final latency to the user is going to be because the rendering and the physics processing are located near enough to each other to be calculated for our final frame at a very quick speed.

GamesBeat: Are you still maximizing the hardware in the local machine?

Navok: The client? No. It’s just video being decoded at the final end. We’re not trying to do hybrid at this point. I’m sure other people will try to do that, but we’re focused to the extent possible on maximizing processing in the data center because that’s where we think efficiency comes from.

GamesBeat: Microsoft has a demo of an asteroid belt. The Xbox One rendered 40,000 asteroids. Then, when the console went out to the cloud, it added hundreds of thousands of asteroids to the same field. This sounds very similar to what you’re doing with the Shibuya demo.Navok: It’s starting to grow similar. But we don’t have to worry about whether the user is going to be connected to us in the end. That’s a big concern for developers. If you’re going to design a game function where you can’t guarantee if the player is going to be able to access that function, how much do you invest in developing that function?

GamesBeat: Pull the plug and it’s 40,000 asteroids again.

Navok: Yeah. Let’s say that we want to go and put in those hundreds of thousands of asteroids in there. But we can’t guarantee that every user will see them.

GamesBeat: Is this one of Square Enix’s biggest investments as far as new game technology goes?

Wada: When it comes to the amount of investment we’ve put in, it’s not as enormous as you might imagine. What we’ve put in is our wisdom and experience in research and development.

Navok: But we have used a lot of the time of our senior management.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More