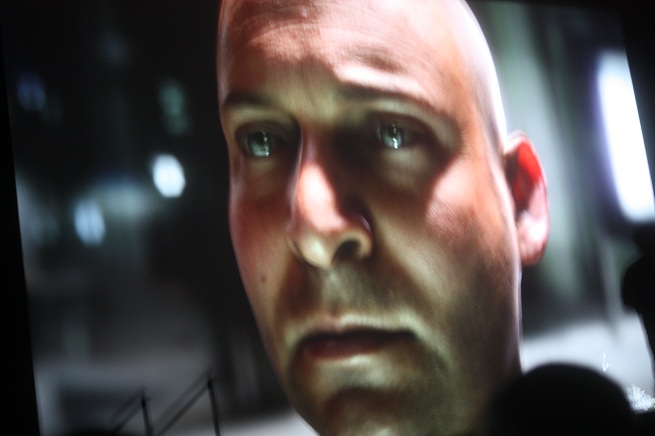

SAN JOSE, Calif. — Nvidia has nearly crossed the uncanny valley. The graphics computing giant debuted a technology today that showed how its graphics chips can render a human face with nearly flawless realism.

[aditude-amp id="flyingcarpet" targeting='{"env":"staging","page_type":"article","post_id":701986,"post_type":"story","post_chan":"none","tags":null,"ai":false,"category":"none","all_categories":"games,mobile,","session":"B"}']Jen-Hsun Huang, the chief executive of Santa Clara, Calif.-based Nvidia, unveiled Faceworks, which can create a human face and make it move like a real person’s. It still looks slightly fake, but that slightness is far better than computers have been able to do in the past. And this face pictured above, dubbed digital Ira, can be rendered with a single GPU (graphics processing unit).

The face above can be rendered with about two teraflops of computing power, or about half the power of Titan, Nvidia’s newest graphics chip with a whopping 7 billion transistors. It runs in real time, and so it could appear in a computer game.

AI Weekly

The must-read newsletter for AI and Big Data industry written by Khari Johnson, Kyle Wiggers, and Seth Colaner.

Included with VentureBeat Insider and VentureBeat VIP memberships.

You can now zoom in on a rendered face and see pores, wrinkles, hair blowing in the wind (not on this guy), and other real human flaws. Huang said that, working with Paul Debevec’s computing lab at the University of Southern California, animators can capture real human expressions. They capture about 30 expressions on video and then mold those expressions into realistic behavior and animations for a human face.

Huang said that the quality of the animations is now approaching the quality that it takes to cross the “uncanny valley,” or the observation that the more real you make an animated face, the creepier and less realistic it looks.

He made the comments in the opening day keynote at the GPU Technology conference at the San Jose Convention Center in San Jose, Calif.

“If we are not at a tipping point, we are racing at it,” he said.

Patrick Moorhead, analyst at Moor Insights & Strategy, said, “I have seen many demos like this using Light Stage technology for the last few years, but they were rendered post-production, not real time. Products like Nvidia’s Titan enables the real-time rendering of faces that could really change real-time communications. It could be a lot of fun, too. Imagine if you are playing online games with friends and their avatars could be these real-life faces. You could use your favorite villain or character.”

Nvidia also had a method for rendering realistic ocean waves. Dubbed Waveworks, the technology made it a lot easier for animators to produce waves that bounced with the same physical properties as water.

[aditude-amp id="medium1" targeting='{"env":"staging","page_type":"article","post_id":701986,"post_type":"story","post_chan":"none","tags":null,"ai":false,"category":"none","all_categories":"games,mobile,","session":"B"}']

Nvidia’s Dawn from VentureBeat on Vimeo.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More