Presented by Western Governors University

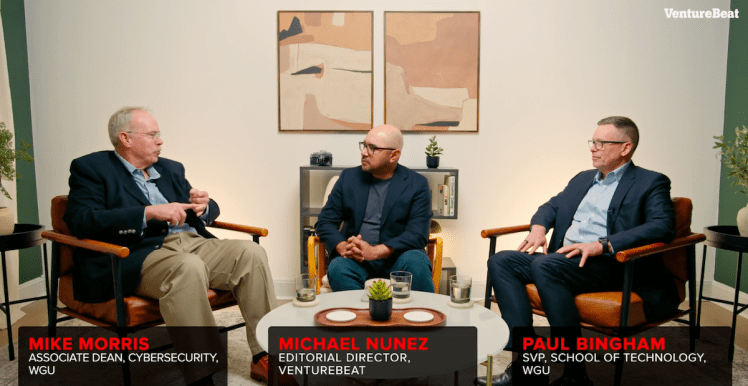

AI is powering increasingly sophisticated threats to the enterprise, from data poisoning to deepfakes. But AI is also on the side of the cybersecurity professionals who are fighting back. In this VB in Conversation, Paul Bingham and Mike Morris, former FBI cybersecurity agents with deep experience in nation state attacks and tactical response, sat down to talk about how they’re training the next wave of defenders to handle AI threats with speed, precision, and resilience at Western Governors University.

The most important thing to know is that the speed with which the criminals or the attackers are trying to breach networks and take advantage of all the potential vulnerabilities has increased exponentially.

“That means, for someone who’s trying to defend a network, they have to match that speed, or even try to stay one step ahead of it, so that when something weird comes in, they pick it up immediately and lock things down or roll things back to be able to get ahead of it,” says Paul Bingham, Senior Vice President and Executive Dean at Western Governors University’s School of Technology. “If the bad guys are using AI, we the defenders have to also use AI.”

Associate Dean & Senior Director, Cybersecurity and Information Assurance at Western Governors University’s School of Technology, Mike Morris adds: “The funny thing about all this is that the vulnerabilities don’t change. The attack surface changes. Companies lose focus on their assets and how they’re going to secure all these things. The method through which the attack happens doesn’t change so much, but how they get to it does.”

The two sides of generative AI and security

Generative AI has made phishing and black hat hacking readily available to attackers. But the benefit AI brings is just as large.

“Yes, the speed of those risks has changed, but so has our ability to detect those and counteract those,” Bingham says. “The recursive learning that occurs in the machine learning part of our systems tracks anomalous behavior. The speed and the accuracy with which that’s developing is helping the good guys. The recursive learning’s helping the models train and learn so much more quickly and so much more efficiently.”

Social engineering attacks have gone nuclear, however — generative AI only needs a scrap of a person’s voice to spoof real-time phone calls and even video calls, Morris says, such as begging a family member to send them money for an emergency.

“This can happen to any person, any time,” he says. “Now let’s think about the CEO who gets a call, or the comptroller of a company. I need a wire sent right away! We have a big deal happening. The money needs to be sent right now. A good employee would get it done. I’ve seen a company lose $150,000 in under a minute doing that.”

Low-technology solutions to cyber attacks

To defend against these attacks, the short-term answer is going back to basics — it’s human-based rather than technology-based. How do you verify the person on the line? You have to ask them a question that only they would know, or even institute pass phrases.

“There’s no doubt that the human element is still important,” Bingham says. “That human element is what’s being exploited for whatever nefarious purpose. Given that, we cannot overlook the code word in a corporate environment or a family environment, something that neither AI nor any other human can outsmart. It really is this very elementary fix to so many things. We have to remain extra vigilant. Keeping the guards at the gate. Identification and authentication measures are still the things that are going to protect us.”

Planning is critical — that’s what cannot be overstated, he adds.

“Just like the success of any tactical operation relied upon successful planning and preparation ahead of time,” he says. “In networking or the cyber world, as we prepare for this combat that we’re going to have with our adversaries, the success is not won or lost in the moment of the conflict. The success is going to be gained in the planning and the practicing of that plan well ahead of time.”

Tabletop exercises, running simulations in real time to test and iterate on plans in the case of emergency, are crucial. They are especially critical in testing the basics of security, like making sure your data is protected and encrypted, where your assets are and who exactly has permission to access it.

“One thing you see with AI, we give it too much power,” Morris says. “It would be like giving Alexa, as an AI agent, access to your bank account. You wouldn’t do that, right? You wouldn’t say, hey, Siri, move a million bucks for me. But that’s what companies are doing with some of their AI agents. They’re giving them way too much control. You can have unintended consequences if you give agents or APIs too much authority, too much access to protected data.”

The tabletop exercise is where those issues turn up.

“If you have C-suite executives, run them cold. Don’t let them have a script ahead of time,” he adds. “Put them in the room, run through it, and watch the surprises happen. It’s much better to be surprised then, rather than when all of a sudden the power grid is down, banking goes down, and every federal agency is calling you.”

Taking a swing at safe AI

“From a business perspective, or an organization perspective, if you don’t bring in AI, your competitors will,” Morris says. “How do we bring it in safely? A lot of companies can’t afford to build it. They use a lot of the platforms that are out there. They already have trained models out there. The question becomes, how do you know how it was trained? Who’s testing that to make sure there aren’t any backdoors or malware? There have been multiple cases now with multiple platforms where hackers are putting malware in it.”

It goes back to that cybersecurity chestnut: “trust, but verify.” Know the purview of your tools and solutions, and have verified that they’re safe and trustworthy. When you build a solution, make sure your AI functions correctly and test the data to ensure it’s secure and clean.

The growing need for cybersecurity professionals

The world, and more specifically, the United States, needs more cybersecurity professionals, whether protecting our national digital infrastructure, or protecting the businesses that operate within our country, Bingham says.

“Anybody listening out there, please consider cybersecurity as a profession,” he adds. “There’s a ton of jobs available.”

At Western Governors University, programs are designed to future-proof professionals by instilling a solid understanding of foundational skill sets — the building blocks that allow graduates to adapt as technology evolves. That includes not only core technical training, but also instruction in how to responsibly use emerging tools like generative AI. Students are learning to use AI coding assistants as force multipliers, but only after they’ve built the technical fluency to understand, verify, and apply what these tools generate.

And as essential as iron-clad technical skills are, WGU is also prioritizing the development of people skills — like communication, collaboration, and critical thinking — to ensure graduates are not only job-ready but team-ready.

“Cyber professionals rarely live and work on an island by themselves,” Bingham explains. “They have to be able to work as a team, communicate well with each other, understand the business purposes that they’re there to help promote and protect.”

At the same time, degrees and certifications alone no longer meet expectations. Employers are looking for proof of practical skills, demonstrated in real-world scenarios.

“We’re implementing more and more hands-on keyboard skills,” Morris says. “At WGU, we have the largest cyber club in the United States. They’re competing nationally across the United States in events, so that when they go to an employer, they can show what they’ve done. They can not only say, I earned the degree, I earned these 15 industry certifications, but they can also hack right in. They can protect. Which side of the coin do you need me on? Red team, blue team, purple? How do you want me to do it?”

The most valuable lessons from Bingham and Morris come from real-world operations, not textbooks. They’ve seen how fast today’s threats evolve — and how high the stakes are when AI is in play. Now, they’re focused on preparing defenders who can respond just as fast, with the judgment, instincts, and tactical experience to match. Because the future of cybersecurity depends not just on tools, but on the next generation of security specialists trained to use them effectively and responsibly.

Sponsored articles are content produced by a company that is either paying for the post or has a business relationship with VentureBeat, and they’re always clearly marked. For more information, contact