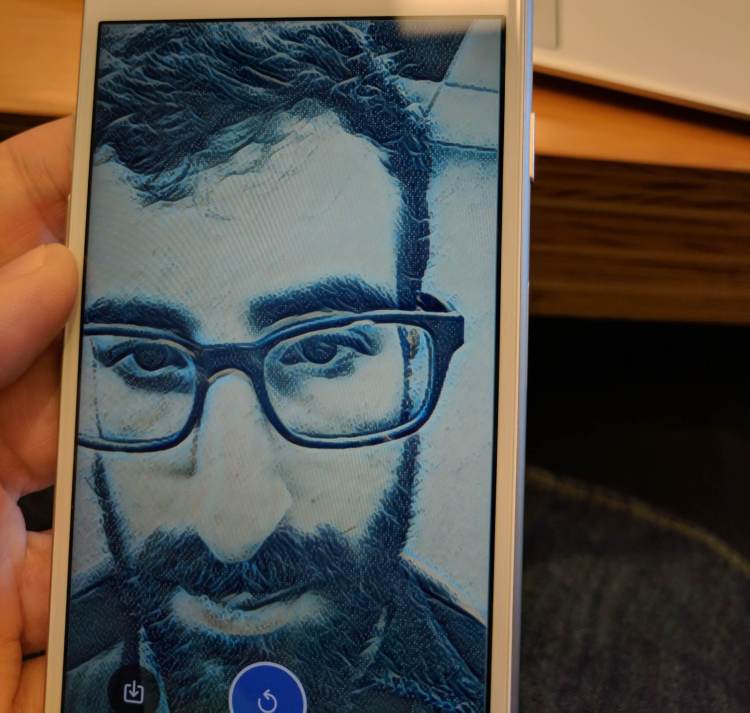

In conjunction with the Web Summit conference in Lisbon today, Facebook is unveiling artificial intelligence (AI) software that it’s using in order to let users apply and switch artistic styles for live video streams on Android and iOS. After demonstrating the technology at a conference last month, Facebook is now testing the video style transfer technology on mobile in a few countries, and it will be deployed more widely in the near future.

The Caffe2go technology Facebook developed in the past three months is an implementation of a hot type of AI called deep learning, which typically involves training neural networks on lots of data, like images, and then making inferences about new data. In this case, Facebook has developed pre-trained neural networks that can then make inferences about new data on the fly on mobile. Google did something similar with a part of Google Translate last year, but Google also recently demonstrated neural style transfer technology of its own, although it’s not yet been shown to run on mobile devices.

Being able to actually train neural nets on a mobile device without sending data to powerful remote computers will “take a lot longer” because so much data and compute power is required, Facebook chief technology officer Mike Schroepfer told reporters during a briefing last week at Facebook headquarters in Menlo Park, California.

The work from Facebook and Google in this area comes following the rise of the Prisma mobile app, which lets users add styles to photos and videos.

Facebook’s Caffe2go software is a full-blown deep learning framework that’s based on the open-source Caffe2 software, which itself derives from the popular Caffe open-source deep learning framework. But, as its name suggests, Caffe2go is designed to be mobile-first, said Hussein Mehanna, engineering director of Facebook’s Applied Machine Learning group. “It really runs very fast on mobile,” Mehanna said. But still it can run on a server chip and a graphics processing unit (GPU).

More specifically, Facebook has created an AI model that’s 100 times smaller than an existing one, Facebook research scientists Yangqing Jia and Peter Vajda wrote in a blog post.

Facebook intends to open-source parts of Caffe2go in the next few months, Jia and Vajda wrote.

Caffe2go is effectively a second platform for AI at Facebook. The first one was the existing Torch open-source deep learning framework to which Facebook has contributed extensively. But now, Facebook is positioning Caffe2go as very strategic to Facebook.

“Because of its size, speed, and flexibility, we’re rolling Caffe2go out across Facebook’s stack,” Jia and Vajda wrote.

Jia and Vajda also expound on how the team made the deep learning model small enough to fit onto a phone:

We optimized the number of convolution layers (the most time-consuming part of processing) and the width of each layer, and we adjusted the spatial resolution during processing. The number of convolutional layers and their width can be used as separate levers for adjusting processing time, by adjusting how many aspects of the image are getting processed, or adjusting the number of times a separate processing action has taken place. For spatial resolution, we can adjust the actual size of what is being processed in the middle layers. By using early pooling (downscaling the size of the image being processed) and late deconvolution (upscaling the image after processes), we can speed the processing time up because the system is not processing as much information. We also found with these techniques that we could aggressively cut down on the width and depth of the network while maintaining reasonable quality.

See the full blog post for more detail.

VentureBeat's mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Learn More